En bref

- Vision Processing Units (VPUs) are specialized AI accelerators optimized for real-time image and video analysis on edge devices.

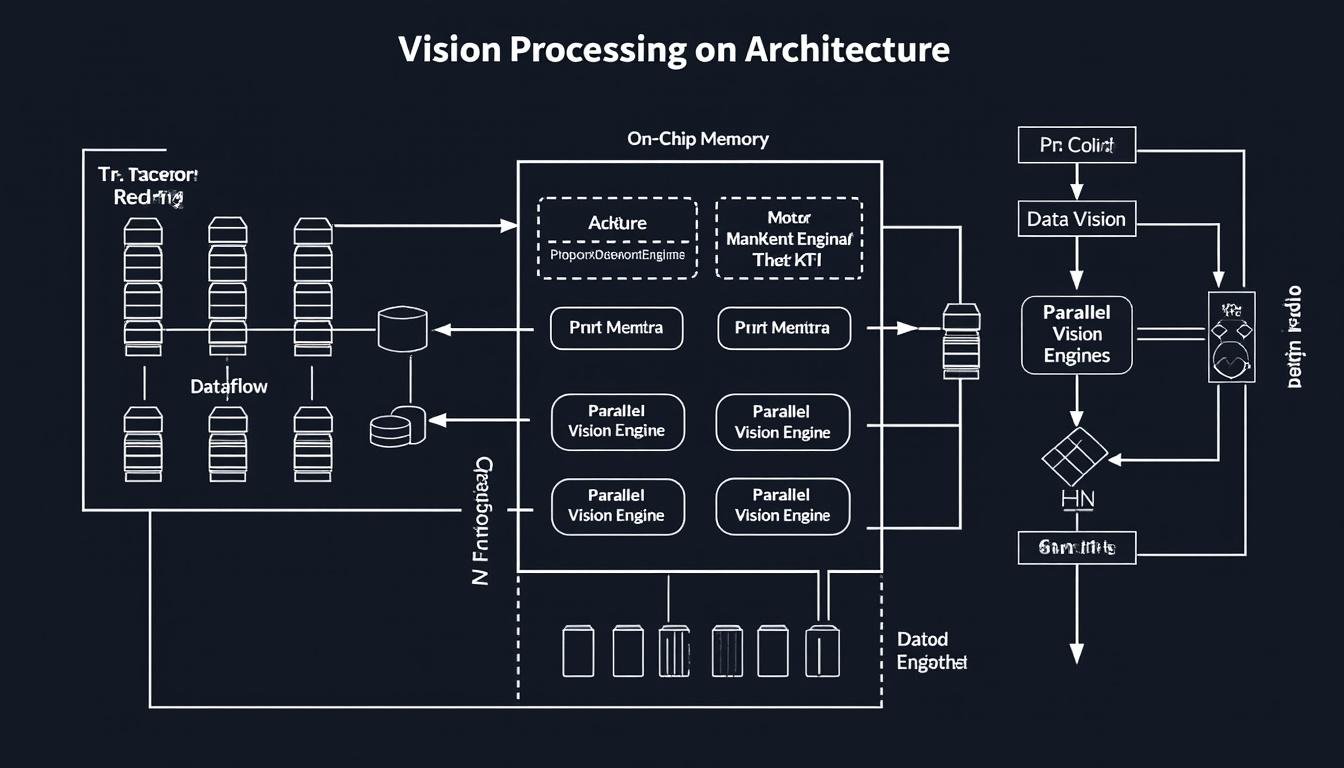

- VPUs use on-chip dataflow, scratchpad memory and parallel execution units to minimize data movement and power draw, outperforming traditional CPUs for vision pipelines.

- They differ from GPUs (graphics-oriented) and video processing units (less suited for dense machine-vision workloads).

- Key players in 2025 include Intel (Movidius lineage), NVIDIA, Google, Apple, Qualcomm, AMD, Samsung, Hikvision, Movidius, and ARM.

- Applications span robotics, smart cameras, IoT, and autonomous devices.

As of 2025, VPUs sit at the intersection of edge AI and real-time vision applications, delivering deterministic latency with impressive energy efficiency. This article surveys what VPUs are, how they work, who the major vendors are, and what trends shape their development in a rapidly evolving ecosystem. Explore how Intel’s Movidius lineage, alongside industry veterans and new entrants, is redefining on-device perception—while integrating insights from a broader AI landscape that includes providers and researchers publishing on linear models, AI enterprises, and education impacts.

Unleashing the Power of Vision Processing Units: Revolutionizing Image and Video Analysis

What exactly is a VPU, and why is it a game changer for image and video analysis? A Vision Processing Unit is a purpose-built AI accelerator that accelerates machine-vision tasks, prioritizing on-chip dataflow and dedicated scratchpad memory. Unlike GPUs, which are geared toward 3D graphics and general purpose throughput, VPUs optimize for the sequential and parallel nature of vision pipelines: preprocessing, feature extraction, object detection, and neural inference all pathway through tightly coupled hardware blocks. They also differ from video processing units by providing deterministic latency and on-device intelligence, essential for real-time decision-making in robotics, smart cameras, and mobile devices. In 2025, the VPUs market is marked by an expanding set of ecosystems and silicon families that address edge AI demands across automotive, security, and consumer electronics. For deeper context on AI hardware trends and enterprise perspectives, see resources like the landscape of artificial intelligence enterprises and the role of pioneering AI companies, which help frame how VPUs fit into broader AI strategy. You can also explore discussions on how Gemini is shaping modern AI leadership and the impact of AI on education to understand the societal dimension of on-device intelligence. landscape of artificial intelligence enterprises, gemini interview, pioneering AI companies.

| Aspect | VPUs | GPUs | Video Processing Units |

|---|---|---|---|

| Architecture focus | On-chip dataflow, scratchpad memory, dedicated vision blocks | Massively parallel cores for general compute | Video streams transformation, decoding/encoding |

| Latency | Low, deterministic for vision pipelines | High throughput, variable depending on workload | Moderate, optimized for streaming tasks |

| Power efficiency | High efficiency for edge devices | Higher power budget, desktop/server scales | Optimized for video throughput, moderate efficiency |

| Best-use cases | Real-time object detection, feature extraction, on-device inference | General AI workloads, 3D rendering, training/inference at scale | Video decoding/encoding, streaming analytics |

In contemporary deployments, VPUs sit alongside NVIDIA platforms for edge AI, and are complemented by ARM and Qualcomm solutions in mobile and embedded contexts. Corporations are increasingly weaving VPUs into security cameras from Hikvision and smart devices, where on-device vision reduces bandwidth and preserves privacy. For broader business contexts, consult the insights on linear normal models and linear mixed models, which help analysts compare edge AI performance across different modeling approaches, and the insights into the future of AI enterprises. linear normal models, landscape of artificial intelligence enterprises.

Beyond the technical specifics, VPUs enable a new class of applications where devices must interpret the world locally and instantly. This shift reduces cloud dependency, improves privacy, and lowers latency—advantages highlighted in industry discussions about the future of AI and education, and analyses of the power of VPUs in modern devices. For readers seeking broader strategic context, additional analyses on Gemini’s insights and AI leadership, and the role of AI in education offer complementary perspectives. gemini interview, AI in modern education.

Architectural nuances and performance indicators

- On-chip scratchpad memory minimizes data movement, reducing energy per inference.

- Dedicated vision accelerators handle convolution, normalization, and pooling with low latency.

- Edge deployments prioritize deterministic timing for safety-critical tasks (e.g., robotics).

- Tabled performance metrics across common datasets and tasks can guide procurement decisions.

- Trade-offs between power, area, and accuracy depend on the target application (robotics vs. surveillance).

- Vendor ecosystems influence software tooling and SDK availability, impacting integration speed.

To broaden the technical and strategic context, explore additional resources like the AI landscape and the role of promotional AI leaders, which discuss modern AI infrastructure choices and enterprise adoption strategies. landscape of artificial intelligence enterprises, pioneering AI companies.

Note: For deeper technical dives into VPU architectures and edge AI trends, see industry discussions and case studies that compare VPU architectures with contemporary GPUs and dedicated image processors. power of VPU unlocking new frontiers, deep dive into AI concepts.

Intel, NVIDIA, Google, Apple, Qualcomm, AMD, Samsung, Hikvision, Movidius, and ARM continue to expand their VPUs-related offerings, reinforcing on-device intelligence across consumer and industrial devices. To gain broader industry context, read about innovative AI leaders and the power of VPUs in processing technology.

Applications and use cases across industries

VPUs enable a spectrum of real-time vision capabilities across multiple sectors. In robotics, VPUs power autonomous navigation, grasping, and human-robot interaction with predictable latency and low power. In digital cameras and smart cameras, VPUs deliver edge inference for facial recognition, scene understanding, and activity detection without cloud round-trips. In IoT and mobile devices, VPUs run on-device perception tasks that preserve privacy and reduce bandwidth. Healthcare and retail also benefit from on-device analytics, including patient monitoring and product analytics on edge devices. For a deeper look at the AI landscape and enterprise adoption, refer to the resources on AI enterprises and pioneering AI companies, and explore how education is reshaping AI literacy and workforce readiness. landscape of artificial intelligence enterprises, pioneering AI companies, AI in education.

| Industry | Representative VPUs/Use-cases | Value Delivered |

|---|---|---|

| Robotics | Object detection, SLAM, edge inference | Lower latency, reduced energy, safer autonomous operation |

| Smart cameras | Facial recognition, activity detection | Local processing, privacy preservation |

| IoT/mobile | Edge inference, sensor fusion | Bandwidth savings, faster responses |

For readers seeking broader industry context, the following resources provide complementary perspectives: AI concepts and applications, neural networks overview.

The synergy between VPUs and established hardware ecosystems accelerates time-to-market for vision-enabled products. Major vendors continue to enhance software ecosystems, SDKs, and developer tooling to ease integration with existing AI stacks from Google and Apple, while hardware partners like NVIDIA, AMD, and ARM contribute accelerators and compiler support. See additional context on AI leadership and the evolving enterprise landscape for a fuller picture. pioneering AI companies.

To explore more about VPUs, consider these supplementary readings: power of VPU unlocking new frontiers, embracing AI opportunities, AI concepts and applications.

Movidius and other VPUs are also reshaping camera ecosystems from Hikvision to consumer devices, highlighting the practical value of on-device vision. For those exploring the broader implications of AI infrastructure, additional reads on neural networks and Alexandr Wang’s work offer broader strategic context. neural networks overview, Alexandr Wang interview.

ARM and Samsung continue to push edge AI capabilities, emphasizing low-power, compact VPUs for mobile and embedded devices. This complements the existing hardware mix from Intel and its Movidius lineage, NVIDIA platforms, and the broader hardware-software stack that powers modern vision applications. For deeper industry context, consult industry paths and leadership analyses above.

Intel and its hardware ecosystem, including Movidius, remain central to on-device vision development, while partnerships with camera vendors, automotive suppliers, and smartphone OEMs expand VPUs’ reach into new domains. See related analyses on AI enterprise landscapes and education to understand the broader impact of vision-enabled analytics. linear normal models, artificial intelligence enterprises.

Future trends, challenges and opportunities in VPU technology

- On-device intelligence will expand, with VPUs becoming embedded in more automotive, camera, and wearables contexts.

- Software ecosystems will mature, easing deployment and cross-vendor interoperability.

- Privacy and data sovereignty will boost demand for edge-only vision pipelines.

- Energy efficiency and silicon specialization will drive smaller, cheaper, and faster vision accelerators.

To keep a broader perspective, check out resources on AI education and enterprise strategy. AI in education, embracing AI opportunities.

Two quick dives to illustrate the current state of VPUs in action:

Another look at edge AI strategy and device-level perception for practical deployment scenarios across industries. VPU processing technology.

Roadmap considerations and practical adoption tips

- Define latency budgets tightly for critical tasks like object detection in robotics.

- Evaluate the software ecosystem and available SDKs when choosing a VPU vendor.

- Consider on-device privacy requirements and bandwidth constraints to justify edge inference strategies.

For further context on AI leadership and the broader landscape, consult the following resources: pioneering AI companies, AI concepts.

FAQ

What is the main difference between a VPU and a GPU?

A VPU is optimized for on-device vision pipelines with dataflow architecture and scratchpad memory to minimize data movement and latency, whereas a GPU emphasizes broad parallel compute for generic AI and graphics workloads.

Why are VPUs important for edge devices in 2025?

VPUs provide deterministic latency and energy efficiency, enabling real-time vision tasks without relying on cloud processing, which improves privacy and reduces bandwidth requirements.

Which brands are leading in VPU development?

Key players include Intel (Movidius), NVIDIA, Google, Apple, Qualcomm, AMD, Samsung, Hikvision, ARM and others who offer various edge AI accelerators and vision-specific blocks.

How do VPUs differ from video processing units?

VPUs are optimized for machine-vision workloads with complex pipelines and AI inference, while video processing units focus primarily on video decode/encode and streaming tasks.