En bref

- The GDPR landscape for Large Language Models (LLMs) in 2025 is characterized by fundamental tensions between data privacy rights, model training paradigms, and regulatory expectations.

- Key challenges include data diffusion across billions of parameters, practical data erasure, limited transparency, and the tension with data minimization and anonymization principles.

- Practical compliance requires a blend of governance, privacy-preserving techniques, and regulatory adaptation that balances innovation with user rights.

- Industry actors such as Microsoft, Google, OpenAI, and AWS are actively exploring technical controls and policy frameworks that align LLM development with privacy requirements, while vendors like IBM, Meta, Salesforce, SAP, Oracle and Palantir contribute to ecosystem standards and auditability.

- For organizations aiming to adopt LLMs responsibly, a layered approach that combines data provenance, risk assessments, and user-centric transparency is essential, complemented by accessible resources such as advisory content on business applications.

In the digital era, the General Data Protection Regulation (GDPR) remains a foundational shield for personal data, but the advent of large language models (LLMs) — including leaders like GPT-family systems from OpenAI, and transformer architectures behind Google, Microsoft, and other giants — complicates the enforcement landscape. LLMs learn from vast, heterogeneous corpora and generate text by predicting the most probable next token, rather than retrieving exact stored records. This fundamental property reshapes how rights such as erasure, access, and data provenance operate in practice. The regulatory challenge is not merely technical; it is a crucible where policy design, machine learning theory, and real-world deployment intersect. As of 2025, this hybrid space demands that policymakers, auditors, and engineers collaborate to craft frameworks that protect individuals while enabling responsible AI innovation. The following sections dissect these dynamics, offer actionable guidance for practitioners, and illustrate how industry players are translating GDPR principles into concrete controls and processes. The aim is to illuminate a path where privacy-by-design becomes integral to the deployment and governance of LLM-based solutions, rather than an afterthought layered atop fragile deployments.

GDPR compliance in the age of large language models: deciphering data flows, rights, and responsibilities

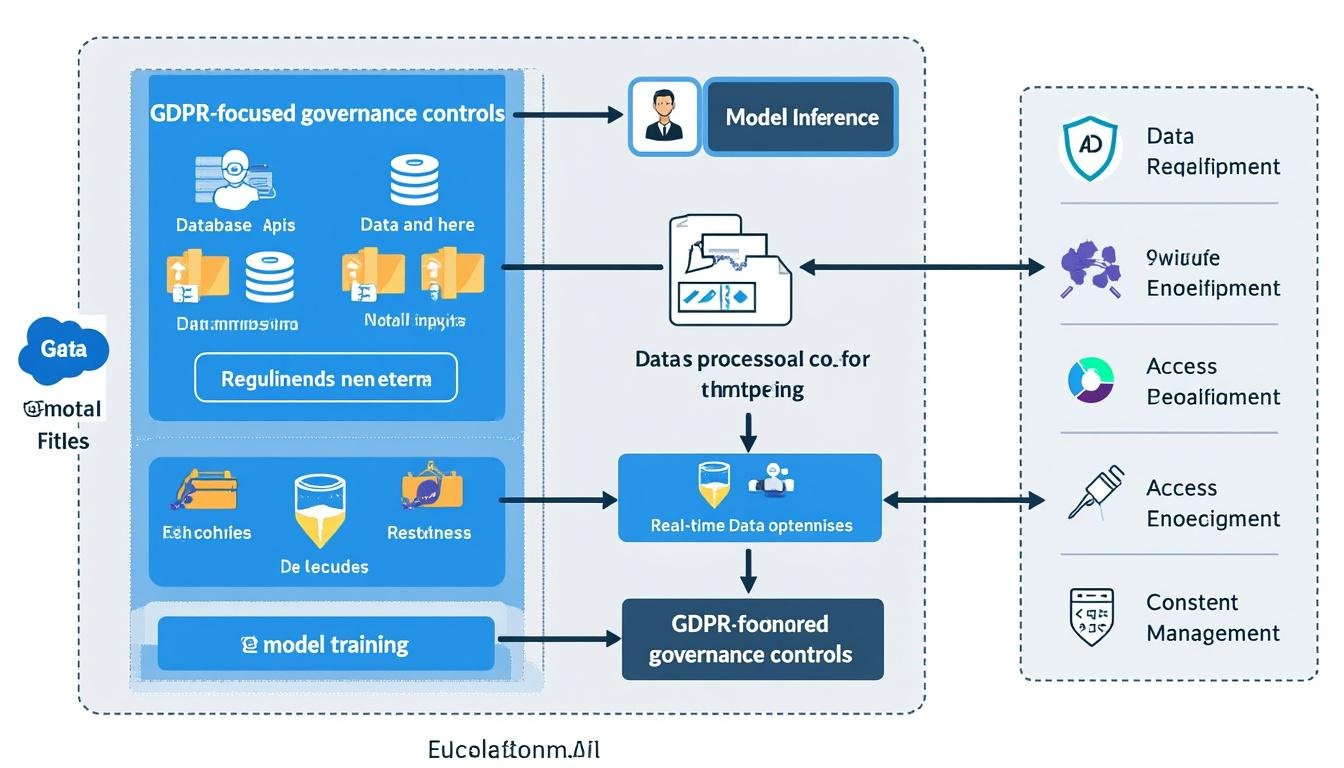

The core difficulty of enforcing GDPR against LLMs lies in the diffuse nature of data representation within large models. Unlike traditional databases, where data is stored in retrievable entries, LLMs encode information within billions of parameters shaped during training on massive, often imperfectly scoped datasets. The practical implication is that a “data point”—a personal detail from a user or a dataset contributor—may be distributed across the model without a simple, direct pointer to erase. The right to be forgotten becomes a multifaceted and technically demanding obligation, pushing organizations to rethink how they plan data collection, model training, and post-training updates. In 2025, several governance practices have emerged to address this: rigorous data source vetting, explicit model-sharing agreements, and the deployment of privacy-preserving training regimes that attempt to prevent memorization of sensitive content beyond a certain threshold. This section outlines the dominant data-flow patterns, the GDPR obligations most impacted, and the practical steps that enterprises can adopt to reduce risk while maintaining useful model performance.

To build an actionable picture, consider a hypothetical enterprise that leverages an open-ended text generation tool integrated with business workflows. The data lifecycle begins with raw user inputs and corporate documents, some of which may contain personal data. During preprocessing, organizations can implement automated redaction and token-level filtering, aiming to minimize exposure. Yet even with filtration, the model may retain sensitive cues in its internal representations. This reality forces firms to adopt a combination of strategies: (1) restrict data collection to necessity-driven inputs, (2) encapsulate training data provenance with robust metadata, (3) use differential privacy or other privacy-preserving training methods where feasible, and (4) implement post-training auditing to monitor memorization risks. These layered controls align with GDPR’s demands for data minimization, purpose limitation, and accountability.

In practice, transparency requirements present another layer of complexity. GDPR emphasizes the need to explain data processing and decisions that affect individuals. Yet LLMs are often described as “black boxes” because tracing a specific output back to a single input or a precise portion of training data is not straightforward. Industry practices are evolving: organizations are adopting curated, auditable training datasets; they publish privacy notices that explain data usage in accessible terms; and they implement model cards that describe capabilities, limitations, and the safeguards in place. The combination of user-facing explanations and internal governance records contributes to a more comprehensible data-use narrative, even if the underlying model remains technically opaque in certain aspects.

From a regulatory perspective, 2025 has seen regulators emphasize risk management, DPIAs (Data Protection Impact Assessments), and the need for robust data-protection measures during both training and deployment. The role of third-party providers is increasingly scrutinized, with expectations around contractual safeguards, data processing agreements, and evidence of data-subject rights handling. In this context, major technology players like Microsoft, Google, and AWS exhibit evolving frameworks that pair technical controls with governance and auditability. These efforts aim to create a more predictable environment for enterprise users who want to balance the benefits of LLM-enabled automation with GDPR compliance. Organizations should also recognize the value of external guidance: professional communities and industry associations are coalescing around best practices for data governance in AI systems, offering practical roadmaps that complement formal regulations.

Key considerations for practitioners include the following:

- Data provenance matters: Document every data source and its purpose to support accountability and potential risk analyses.

- Controls at training time: Apply data minimization, consent management, and selective data inclusion to reduce exposure.

- Memory versus inference: Distinguish memorized content from generative outputs to inform compliance strategies.

- Transparency built-in: Provide explanations about data handling and model behavior to data subjects where appropriate.

- Auditing readiness: Maintain logs, model-card disclosures, and traceable decision pathways to facilitate audits.

- Data subject rights process: Create procedures for access, correction, and deletion requests that align with model governance.

- Vendor governance: Establish clear DPIAs and data processing agreements with providers, including clauses for retraining or model updates that impact privacy posture.

- Differential privacy adoption: Explore differential privacy and related techniques to limit memorization without sacrificing utility.

| Aspect | GDPR Challenge | Practical Mitigation | Real-World Example |

|---|---|---|---|

| Data diffusion in parameters | Personal data embedded in model weights makes erasure difficult. | Use privacy-preserving training; validate memorization risk via probes. | Large language model deployments in enterprise support systems with restricted data scopes. |

| Right to be forgotten | Deleting from the model is practically intractable. | Adopt data minimization, de-identification, and model retraining on updated data only when feasible. | contractual obligations requiring data removal during model updates by cloud providers. |

| Transparency and explainability | Regulatory expectations for explanations collide with black-box nature. | Model cards, auditing logs, and post-hoc explanations where possible. | Public disclosures from major cloud vendors about privacy safeguards and data handling policies. |

| Data minimization | Training on vast data runs counter to minimization goals. | Structured data governance, synthetic data use, and controlled corpora curation. | Use-case-driven data collection with consented data subsets. |

For a deeper dive into practical governance patterns, see how major cloud providers frame privacy-friendly AI policies and how enterprises map their data flows to regulatory requirements. Additionally, exploring community resources like business-apps guidance can provide actionable tactics for integrating privacy into daily operations. Explore practical business app guidance to see how privacy-by-design translates into real-world tooling. In parallel, leading technology players including Microsoft, Google, OpenAI, Amazon Web Services, IBM, and Meta continue to publish frameworks that help organizations implement privacy controls without stifling innovation. These efforts are reinforced by ecosystem participants like Salesforce, SAP, Oracle, and Palantir, who contribute to interoperable standards and practical auditability across business contexts.

Transitioning from theory to practice requires careful sequencing: start with governance design, then adopt privacy-preserving training, and finally implement robust user-rights workflows. In 2025, a growing cadre of organizations is implementing “privacy-by-design” playbooks that map data domains to model use cases, define retention and deletion policies, and establish clear lines of accountability for data protection officers and engineering teams. This approach aligns with GDPR’s core principles while accommodating the unique capabilities and constraints of LLMs. It also helps organizations respond to regulatory inquiries with a structured audit trail that demonstrates responsible AI usage and a commitment to data protection. As the regulatory environment continues to evolve, those who invest early in governance maturity and technical safeguards will be better positioned to scale AI initiatives responsibly and sustainably.

Key takeaways for GDPR-aware LLM deployments

In practice, the successful alignment of GDPR with LLMs rests on three pillars: disciplined data governance, privacy-preserving machine learning techniques, and transparent stakeholder communication. The following operational considerations help organizations move beyond compliance checkboxing toward a resilient privacy posture that adapts to evolving threats and regulatory expectations. First, institute a formal DPIA process for any new LLM project, with explicit consideration of purposes, data categories, retention periods, and potential risks to data subjects. Second, implement data minimization by default, leveraging synthetic data where possible and restricting real data usage to clearly defined use cases under consent or legitimate interest. Third, invest in experimental evaluation of memorization risks, using red-teaming and model-probing techniques to identify and mitigate unintended data retention. Fourth, establish observable, interpretable decision traces that enable regulators and stakeholders to understand how outputs are generated. Fifth, foster cross-functional collaboration between privacy, security, and product teams to ensure privacy controls are embedded in the product development lifecycle. These steps, when taken together, create a practical pathway to GDPR-aligned LLM deployment that balances user rights with business value.

Data governance and anonymization in LLM pipelines: turning privacy theory into practice

As LLMs scale, the governance questions intensify: how to govern data input, how to audit model behavior, and how to maintain privacy while unlocking value from language-based automation. This section delves into the architecture of data governance for AI, focusing on data minimization, anonymization, and the lifecycle controls that ensure ongoing privacy protection. It explores how enterprises can architect pipelines that minimize privacy risk at every stage—from data intake to model refinement—without sacrificing functionality. The discussion is grounded in real-world constraints, including data access controls, consent management, and vendor oversight, and is enriched by concrete examples and practical guidelines for implementation.

One central concept is data provenance—a traceable lineage that records the origin, transformation, and usage of every data item used in model training or inference. By maintaining provenance metadata, organizations can demonstrate compliance with purpose limitation and data retention policies, while also enabling targeted data subject rights requests. Equally important is the use of anonymization and pseudonymization techniques aimed at reducing identifiability. However, the anonymization landscape is complex: even carefully sanitized datasets can sometimes reveal sensitive information when combined with auxiliary data. Therefore, robust testing and verification are essential, including re-identification risk assessments and statistical disclosure control methodologies. The goal is not to erase all traces of data, but to reduce the risk of re-identification to an acceptable level while preserving the model’s utility for its intended tasks.

A practical framework for governance combines policy, people, and technology. On the policy side, organizations define explicit privacy-by-design commitments, data handling rules, and accountability structures. On the people side, roles such as Data Protection Officers, Privacy Engineers, and Product Privacy Advocates coordinate across legal and engineering teams. On the technology side, a suite of controls is deployed: access management, secure data storage, tokenization, synthetic data generation, and privacy-preserving training algorithms. These controls are not one-off; they require ongoing management, updating as data sources evolve, as new use cases emerge, and as the threat landscape shifts. The interplay of governance and technology creates an adaptive privacy ecosystem that can scale with business needs and regulatory expectations.

In practice, anonymization is never a silver bullet. Even when data is de-identified, the sheer volume and variety of data used to train LLMs can inadvertently enable re-identification under certain conditions. Therefore, a conservative approach to anonymization, combined with privacy-preserving learning techniques such as differential privacy, can help reduce risks. When combined with strong data access controls and continuous auditing, anonymization becomes part of a broader risk management strategy rather than a standalone solution. Enterprises should also consider the governance implications of data generated by LLMs, including user-generated inputs, model outputs, and any logs that capture interactions. Each data artifact should be categorized, protected, and retained according to policy, with clear rules about who can access it and for what purpose.

For readers seeking hands-on guidance, the following steps offer a practical roadmap: (1) map data flows to GDPR obligations using a data-provenance ledger; (2) implement rigorous input filtering and data minimization at the ingestion stage; (3) adopt privacy-preserving training where possible; (4) establish a robust audit trail for model outputs; (5) design user-centric explanation capabilities to satisfy transparency requirements; (6) create a process for handling data subject rights requests; (7) continuously monitor and test the system for memorization and leakage; (8) engage with cloud and software vendors through clear DPAs and governance audits. These steps translate privacy theory into actionable, repeatable operations that support compliance while enabling AI-driven innovation.

| Governance Area | Privacy Objective | Practical Controls | Operational Benefit |

|---|---|---|---|

| Data provenance | Traceability of data sources and usage | Metadata tagging, lineage tracking, access logs | Clear audit trails and faster rights management |

| Anonymization/pseudonymization | Reduce identifiability in training data | De-identification, differential privacy techniques | Lower re-identification risk while preserving utility |

| Data minimization | Limit data collection to essential inputs | Consent-based data collection, synthetic data where possible | Smaller privacy surface and easier compliance |

| Transparency | Explainability of data usage and decisions | Model cards, post-hoc explanations, user notices | Regulatory clarity and user trust |

Access to high-quality, privacy-preserving data resources is essential for effective LLM deployment. Enterprises can leverage trusted partners and platforms that emphasize privacy controls, and they should consider how relationships with providers like Microsoft, Google, OpenAI, and Amazon Web Services shape their risk posture. The ecosystem also includes enterprise software players such as IBM, Meta, Salesforce, SAP, Oracle, and Palantir, whose governance frameworks can inform internal practices and help standardize cross-organizational privacy hygiene. For teams seeking practical reading on modern privacy tooling, a curated list of business apps can be found here: best business apps for growth.

Illustrative case study: anonymization challenges in a customer support application

A well-documented case study illustrates how anonymization approaches can fail and what mitigations help. Consider a customer support assistant trained on transcripts containing email addresses and order numbers. Even after redaction, patterns in the data might enable re-identification when combined with public data. A practical remedy is to segment training data by sensitivity level, use synthetic datasets for testing, and maintain a separate, private fine-tuning workflow with restricted data access. The case underscores the necessity of end-to-end privacy design—from ingestion to deployment—and reinforces the principle that anonymization is a spectrum rather than a binary state. Stakeholders should also maintain an ongoing risk register that captures evolving threats and the effectiveness of applied controls.

Regulatory frameworks and the path to practical GDPR-aligned compliance for LLMs

Regulatory frameworks around AI and GDPR continue to evolve, and 2025 has seen sustained momentum toward clarifying responsibilities, auditing standards, and risk management practices for LLMs. This section examines how regulations translate into concrete compliance programs, including the role of Data Protection Authorities (DPAs), the use of DPIAs for high-risk AI deployments, and the alignment with broader international privacy regimes. It discusses how standards such as ISO-based privacy controls and new AI governance guidelines intersect with GDPR, and how organizations can implement a pragmatic, auditable, and repeatable compliance program that scales with model complexity. The discussion also highlights the balance between innovation and protection, noting how major cloud and AI players are shaping industry norms through transparency reports, ethical guidelines, and collaborative research on privacy-preserving technologies.

Achieving GDPR-aligned compliance requires more than checkbox compliance; it demands a culture of accountability and robust governance across the model lifecycle. A DPIA helps identify data protection risks early, enabling organizations to design privacy safeguards into system architecture, data handling processes, and user interactions. This approach aligns with GDPR’s emphasis on accountability and the need to demonstrate compliance to regulators and customers alike. In practice, DPIAs should be iterative, revisited when data sources change, or when new use cases emerge. The documents produced should describe risk assessment methodologies, mitigation strategies, and residual risks with clear labels of owners and timelines for remediation. When done well, a DPIA becomes a living artifact: a dynamic map of privacy risk and control efficacy that informs both regulatory reporting and internal decision-making.

Regulators have also encouraged industry collaboration to establish common vocabulary and best practices. In 2025, efforts to harmonize privacy expectations across jurisdictions help reduce friction for multinational deployments. For organizations, engaging with DPAs, industry consortia, and independent auditors can yield valuable feedback on policy alignment and technical adequacy. The synergy between policy and technology is critical: regulators need to understand how LLMs operate and the constraints of privacy-preserving methods, while developers need clear guidance on acceptable risk thresholds and auditability requirements. As a result, the path to practical GDPR-aligned compliance is iterative and collaborative, rooted in transparent governance, robust testing, and documented accountability.

From a corporate governance perspective, the accountability chain should extend to board-level oversight for AI privacy risk, with clear escalation paths and decision rights. The roles of Chief Privacy Officer (CPO) and Chief Compliance Officer (CCO) become crucial in ensuring that privacy controls are not merely technical features but integral parts of strategic planning. Enterprises can also adopt cross-functional privacy councils to review model changes, data flows, and external partnerships. When applied consistently, these governance mechanisms create a durable compliance posture that can adapt to new regulatory developments and emerging threat models. Industry actors such as Microsoft, Google, OpenAI, and Amazon Web Services continue to contribute to governance best practices through transparent reporting and collaborative standards, while enterprise technology ecosystems from IBM, Meta, Salesforce, SAP, Oracle, and Palantir expand the pool of governance patterns available to practitioners.

| Regulatory Topic | GDPR Relevance | Compliance Mechanism | Example(s) in 2025 |

|---|---|---|---|

| DPIA for AI systems | High risk; mandatory in many contexts | Structured DPIA templates, risk scoring, mitigation plans | Industry DPIA playbooks published by cloud vendors and partners |

| Data minimization & purpose limitation | Core GDPR principles | Input filtering, consent frameworks, purpose-based data handling | Use-case driven data governance policies across platforms |

| Transparency requirements | Right to explanation and processing disclosure | Model cards, user-facing privacy notices, explainability tooling | Public disclosures from major providers on privacy safeguards |

| Cross-border data transfers | GDPR adequacy and transfer mechanisms | Standard Contractual Clauses, data localization strategies | Multinational deployments with compliant data routing |

For readers seeking practical regulatory insights, the following point is crucial: regulatory guidance increasingly favors a risk-based, proactive stance rather than reactive compliance. This shift encourages organizations to implement privacy-by-design in product development, maintain robust data governance, and maintain open channels with regulators. Industry leaders such as Microsoft, Google, OpenAI, IBM, and Palantir contribute to a collaborative privacy ecosystem by sharing frameworks and evaluation methodologies. Organizations should also remain engaged with practitioner-oriented resources and case studies, including accessible guidance on business apps at the linked resource above. The end goal is a transparent, auditable, and defensible privacy program that earns user trust and regulatory confidence, even as technologies and regulatory expectations evolve.

Checklist for a GDPR-aligned AI program

Organizations can use a practical checklist to guide their journey toward GDPR-compliant LLM deployment. This list covers governance, data handling, auditing, and stakeholder communication to ensure a holistic approach. Each item connects to day-to-day operations and strategic planning.

- Establish an AI privacy charter with clear roles and responsibilities across privacy, security, and product teams.

- Define purpose-specific data collection and employ consent mechanisms for data usage where required.

- Maintain an auditable data lineage and robust access-controls around training data, inputs, and logs.

- Implement privacy-preserving training methods and test memorization risks through red-teaming exercises.

- Publish model cards and user-friendly privacy notices that explain data usage and protections.

- Conduct DPIAs early in project lifecycles and update them as data sources or use cases evolve.

- Engage with DPAs and external auditors to validate governance controls and regulatory alignment.

- Choose cloud and software partners with transparent privacy frameworks and enforceable DPAs.

As you consider external resources and ecosystem guidance, remember that privacy is as much about culture as it is about technology. The 2025 privacy landscape rewards organizations that embed privacy considerations early, document decisions comprehensively, and maintain a culture of continuous improvement. To that end, reading up on practical business-applications guidance and case studies can illuminate how privacy controls translate into everyday operations. See the linked resource for more context, and explore the broader ecosystem of privacy-driven AI governance among industry leaders.

Technical routes to GDPR-aligned models: privacy-by-design, explainability, and controllable inference

The technical dimension of GDPR compliance for LLMs centers on privacy-preserving training, controllable inference, and enhanced explainability. Tech teams ask: can we build models that respect user privacy by design while still delivering high-quality language understanding and generation? The answer lies in a combination of algorithmic innovation, architectural choices, and robust evaluation protocols. This section surveys the most prominent techniques, their trade-offs, and practical considerations for deployment in 2025. We examine differential privacy, federated learning, data sanitization, synthetic data generation, and interpretability frameworks, highlighting how each method contributes to GDPR goals such as minimization, purpose limitation, and transparency.

Differential privacy offers a principled way to limit the influence of any single data point on model outputs. In practice, applying differential privacy in LLM training reduces memorization risk but can introduce a noise-utility trade-off. Organizations often adopt tiered privacy strategies, applying stronger protections for sensitive domains (e.g., health or financial data) while preserving performance for generic language tasks. Federated learning can help by training models locally on user devices or isolated environments, reducing raw data exposure. However, federated settings introduce challenges in aggregation, drift, and communication overhead. Hybrid approaches mix centralized training with privacy controls, ensuring that sensitive data usage remains constrained and auditable.

Explainability and interpretability are not merely academic concerns; they are actionable requirements for regulatory scrutiny. Techniques like feature attribution, counterfactual explanations, and model-agnostic explanations can help stakeholders understand why outputs are produced in particular ways. While perfect transparency remains elusive for many LLMs, progress in interpretability can build trust and satisfy accountability requirements for sensitive applications. Additionally, post-hoc auditing tools allow organizations to inspect model behavior and identify patterns that may indicate bias, leakage, or privacy risk. The practical upshot is that a privacy-friendly AI stack blends the right mix of privacy-preserving learning, explainability, and governance tooling to deliver regulated yet useful systems.

From a governance perspective, engineering teams should partner with privacy and legal professionals to ensure that privacy controls are present from design to deployment. Model cards should be updated to reflect new capabilities and privacy safeguards; usage policies should guide data handling; and data processing agreements with vendors should spell out data-privacy guarantees and incident response expectations. As with the other sections, a strong emphasis on documentation and auditability is essential. The goal is not merely to “pass” a GDPR check but to create a living privacy infrastructure that adapts to evolving data landscapes, regulatory expectations, and emerging threats.

| Technical Route | GDPR Alignment | Pros | Cons/Trade-offs |

|---|---|---|---|

| Differential privacy | Minimizes memorization risk | Strong theoretical privacy guarantees; less data leakage | Potential utility loss; tuning required for high-quality results |

| Federated learning | Reduces raw data exposure | Improved data locality; reduces central data collection | Communication overhead; challenges in aggregation and drift control |

| Model explainability | Supports transparency and accountability | Actionable insights for regulators and users | Explanations may be approximations; complexity persists for deep models |

| Data sanitization and synthetic data | Supports minimization and privacy | Improved privacy; safer test environments | Quality and representativeness of synthetic data can vary |

In practice, 2025 dashboards and governance tools increasingly integrate privacy metrics into CI/CD pipelines, enabling continual validation of GDPR-related safeguards. Enterprises should pursue a pragmatic balance: apply strong privacy controls where the risk is high, while preserving model performance for broad use cases. Collaboration with ecosystem vendors—such as Microsoft, Google, IBM, and Palantir—helps organizations access up-to-date privacy-preserving technologies and validated audit methodologies. The relationship between technical controls and regulatory expectations is not static; it evolves with new attack surfaces, data sources, and enforcement practices. A robust privacy program recognizes this dynamic, implementing repeatable processes, continuous improvement loops, and transparent reporting to stakeholders.

One practical path is to pilot privacy-focused iterations of LLM deployments in controlled environments, measure performance and privacy impact, and then scale successful configurations. This approach helps organizations learn where privacy protections can be tightened without compromising essential functionality. It also supports regulatory dialogues by providing concrete evidence of how privacy controls are implemented and monitored. As the field advances, the best practice is to treat privacy as a core architectural requirement rather than a post-launch add-on. The goal is to build systems that are both privacy-conscious and performance-driven, enabling organizations to deliver compelling language-based services while respecting user rights and regulatory obligations.

- Integrate privacy-preserving algorithms into the core training and inference pipelines from day one.

- Document data provenance, retention, and access policies with clear ownership and accountability.

- Adopt explainability and auditing capabilities that produce usable insights for regulators and users alike.

- Employ privacy metrics in development reviews and continuous monitoring cycles.

- Engage with the broader AI governance community to stay current on best practices and standards.

For readers seeking concrete guidance on privacy-centric AI development, consider exploring industry case studies and practitioner resources. The link to business-app guidance above provides practical context for applying these principles to day-to-day operations and product strategies. In parallel, collaborations with cloud providers and AI platforms—such as Amazon Web Services, Microsoft, and Google—offer access to privacy-centric tooling and governance templates that can accelerate compliant deployment while enabling innovation. As the GDPR landscape continues to adapt to AI realities, technical teams that couple strong privacy methods with transparent governance will be well-positioned to deliver responsible, trustworthy AI experiences at scale.

Industry readiness and cross-sector collaboration: GDPR, AI, and the 2025 enterprise playbook

With LLM adoption accelerating across industries, the 2025 enterprise playbook for GDPR-aligned AI emphasizes cross-sector collaboration, risk-aware governance, and practical deployment strategies. The challenges are not only technical; they are organizational, legal, and strategic. Enterprises must align privacy practices with business objectives, ensure that procurement and partner ecosystems reflect privacy commitments, and establish clear lines of responsibility for AI-enabled processes. This section examines how large organizations integrate GDPR considerations into their enterprise architecture, the role of vendors and platform ecosystems, and the cultural shifts required to sustain privacy protections as models evolve and expand into new domains.

In practice, large organizations rely on a mix of internal policies, third-party assurances, and external audits to maintain GDPR compliance in AI deployments. Data protection officers (DPOs) work alongside product teams to map data flows, define retention schedules, and identify high-risk use cases that demand heightened privacy safeguards. For enterprises relying on cloud and AI platforms from leading providers, governance agreements and service-level commitments must explicitly address data handling, model updates, and responsibility for data subject rights. This degree of clarity helps reduce ambiguity and fosters trust among customers and regulators. The dialogue among industry players is increasingly cooperative, with shared best practices and evolving standards aimed at harmonizing privacy expectations across geographies and sectors.

From a practical standpoint, the enterprise playbook includes several recurring themes. First, risk-based prioritization: identify high-risk applications (health, finance, child-focused services) and apply stronger privacy controls there. Second, procurement discipline: require privacy-by-design commitments in vendor contracts, with clear accountability for data handling and incident response. Third, transparency with users: provide clear notices about how data is used, how outputs are generated, and what rights users have. Fourth, ongoing monitoring and testing: implement continuous evaluation of privacy safeguards, including memorization checks and auditability verifications. Fifth, workforce training: equip teams with privacy literacy and incident response capabilities. This multi-pronged approach reflects the reality that GDPR compliance in AI cannot be achieved by a single policy or tool; it requires sustained, coordinated effort across the organization.

Major technology and services ecosystems serve as accelerators for these practices. Microsoft, Google, and OpenAI contribute to the development of privacy-preserving tools and governance frameworks; Amazon Web Services provides privacy-aware cloud infrastructure and compliance resources; IBM, Meta, Salesforce, SAP, Oracle, and Palantir expand the range of use cases and integration scenarios that organizations can adopt. In parallel, industry associations and cross-sector coalitions publish guidelines and reference architectures that help standardize privacy expectations and improve interoperability. For practitioners, following these collaborative developments—and adapting them to specific regulatory environments—will be essential to sustained GDPR compliance in AI-enabled enterprises.

- Adopt a cross-functional governance model that includes privacy, security, legal, and product leadership.

- Prioritize high-risk use cases for privacy protection and regulatory alignment.

- Utilize vendor governance frameworks and DPAs to ensure accountability across the stack.

- Embed privacy-by-design into product development cycles and deployment pipelines.

- Engage with industry benchmarks and best-practice materials to stay current with standards and expectations.

Beyond the corporate audience, individuals and civil society play a crucial role in shaping a privacy-centric AI future. Public discourse, consumer advocacy, and transparency initiatives contribute to normative expectations about how AI systems should behave. The evolving GDPR ecosystem thus benefits from a broad ecosystem that includes policymakers, researchers, practitioners, and end users. The ongoing collaboration among technology giants, enterprise users, and policymakers fosters a more mature privacy culture and a clearer regulatory roadmap for GDPR-compliant AI. As we approach a mature 2025-2026 horizon, this collaborative momentum offers a pragmatic path to harness the benefits of LLMs while protecting individual privacy and fundamental rights. The journey is ongoing, but the direction is increasingly defined by collective responsibility and continuous improvement across the AI lifecycle.

Closing reflections: answering questions about GDPR, LLMs, and the future of privacy

The GDPR-compliance debate in the era of large language models is a multifaceted one with technical, legal, and organizational dimensions. While the technical challenges—such as data diffusion in parameters, the right to erasure, and model explainability—pose concrete hurdles, the regulatory response increasingly favors structured governance, data provenance, and privacy-preserving methodologies. A practical, scalable compliance program will combine policy commitments, auditable processes, and transparent user communication with a robust technical stack that includes differential privacy, synthetic data, and model auditing capabilities. Across the industry, collaboration among technology leaders—such as Microsoft, Google, OpenAI, IBM, Meta, Salesforce, SAP, Oracle, and Palantir—as well as cloud platforms like Amazon Web Services—is accelerating the maturation of privacy practices and governance standards. The practical takeaway for organizations is clear: privacy cannot be treated as a compliance checkbox but must be embedded in product design, governance, and culture. The 2025 privacy landscape rewards those who build durable, auditable, and user-centric AI systems that respect rights while enabling responsible innovation.

What is the most significant GDPR-related challenge posed by large language models?

The diffusion of personal data across billions of parameters makes erasing a specific data point effectively impossible, complicating the right to be forgotten and demanding privacy-preserving training and governance strategies.

How can organizations demonstrate GDPR compliance for LLM deployments?

By combining data provenance, model cards, DPIAs, auditable logs, and transparent user notices, alongside privacy-by-design practices and ongoing testing for memorization risk and explainability.

Which industry players are leading the privacy governance conversation around LLMs?

Major tech companies including Microsoft, Google, OpenAI, IBM, Meta, Salesforce, SAP, Oracle, Palantir, and cloud providers like Amazon Web Services are shaping frameworks, tools, and standards for privacy in AI.

What practical steps can an enterprise take to start GDPR-aligned LLM projects?

Begin with a DPIA, implement data minimization, establish data provenance and access controls, pilot privacy-preserving training techniques, publish model cards, and maintain an auditable governance loop with regulators and auditors.