En bref:

– A comprehensive journey through the language of artificial intelligence, from fundamental terms to cutting-edge architectures and deployment practices.

– Clear explanations of ML paradigms, neural models, and major platform ecosystems used in industry in 2025.

– Practical examples, real-world case studies, and a curated glossary of terms tied to OpenAI, DeepMind, IBM Watson, Google AI, Microsoft Azure AI, Amazon Web Services AI, NVIDIA AI, Anthropic, Hugging Face, and DataRobot.

– Actionable insights on how terminology translates into product decisions, governance, and future developments in AI research and enterprise adoption.

– A structured set of resources and reading to deepen understanding with easily accessible links to curated guides and glossaries.

In the rapidly evolving field of artificial intelligence, the language we use to describe models, data, and outcomes shapes how teams design, deploy, and govern AI systems. This guide serves as a practical compass for professionals, developers, managers, and researchers who want to navigate key terms, understand how concepts connect, and keep pace with the latest advancements across leading tech ecosystems such as OpenAI, DeepMind, IBM Watson, Google AI, Microsoft Azure AI, Amazon Web Services AI, NVIDIA AI, Anthropic, Hugging Face, and DataRobot. Each section treats a distinct angle of the AI language, offering in-depth explanations, concrete examples, and structured summaries to reinforce learning and application in 2025.

Foundational Terminology in Understanding the Language of Artificial Intelligence: Core Concepts, Key Terms, and Practical Examples

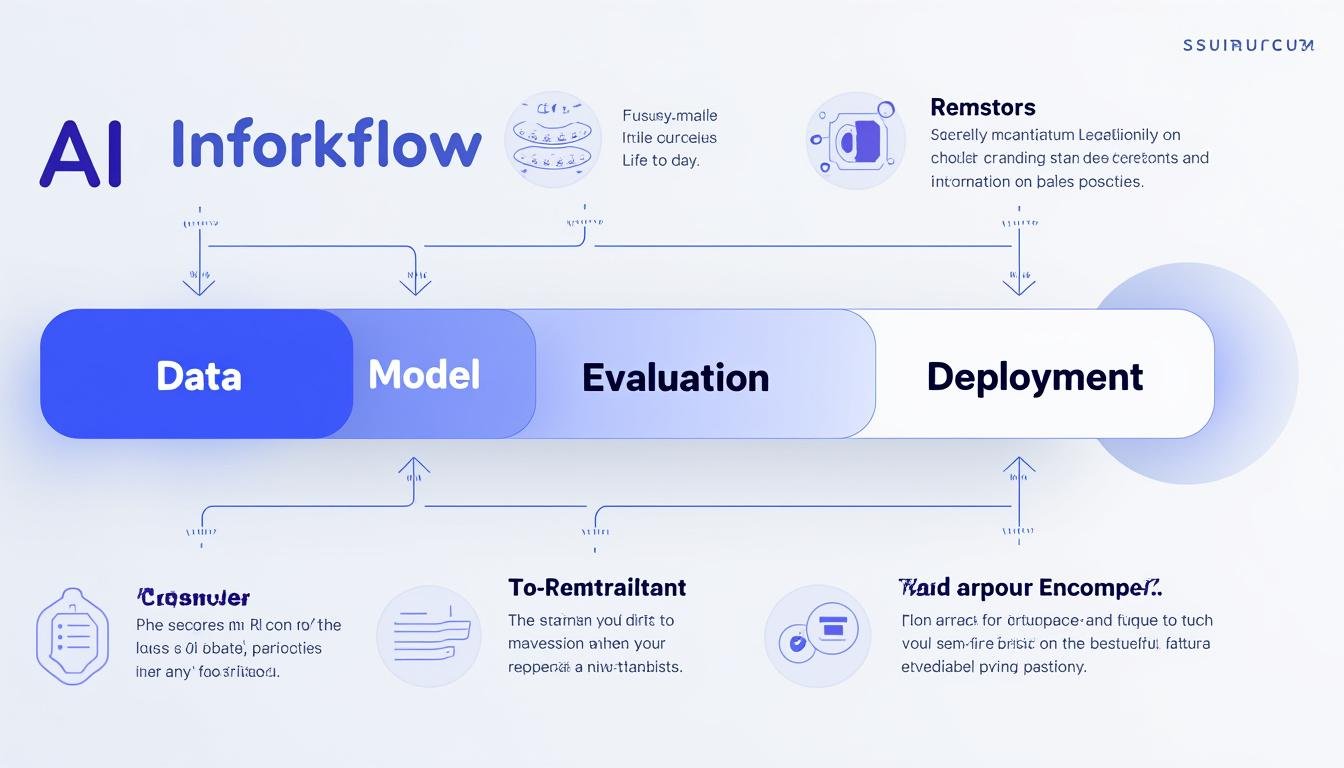

The language of artificial intelligence rests on a set of foundational concepts that consistently appear across research papers, product teams, and vendor documentation. At the core is the idea that data, models, and optimization objectives interact to produce intelligent behavior. Terms such as artificial intelligence, machine learning, and neural networks describe a progression from broad ambitions to concrete computational mechanisms. In practice, teams use these terms to clarify roles, responsibilities, and success criteria when building AI-enabled products, whether they are chatbots powered by OpenAI-style large language models or vision systems deployed on cloud platforms like Google AI or Microsoft Azure AI.

To understand the language, it helps to map each term to its closest real-world analogue and to recognize how models learn from data. A neural network is inspired by the brain’s structure but implemented as mathematical layers of nodes. A typical learning process involves adjusting weights to minimize a loss function, a measure of the discrepancy between model predictions and actual outcomes. When this process is guided by labeled examples, we call it supervised learning; when the system discovers structure in unlabeled data, it enters the realm of unsupervised learning. If the agent improves through interaction with an environment, we speak of reinforcement learning.

For practitioners, the terminology often expands into model families and training paradigms that power modern AI. Transformers have become a dominant architecture for language tasks, enabling large language models (LLMs) such as those from OpenAI and Google AI. Other architectures include GANs (generative adversarial networks) for realistic data synthesis and diffusion models for high-fidelity image generation. A related set of concepts describes data representations and learning signals, such as embeddings (dense vector representations) and loss functions (the objective the model seeks to minimize). Understanding these terms helps practitioners articulate why a model behaves in a certain way and where improvements lie.

In practice, terminology evolves with practice. The 2025 AI landscape reflects advancements in reinforcement learning from human feedback (RLHF), efficiency-focused techniques like quantization and pruning, and new directions in variational autoencoders and probabilistic modeling. As you explore the lexicon, you’ll notice recurring themes: data quality and governance, evaluation metrics, deployment considerations, and ethical implications. For readers seeking to connect theory to industry practice, it helps to anchor terms to concrete tools and platforms. You’ll frequently encounter references to AI terminology glossaries, key terms explained, and other curated resources that track term usage across major players like IBM Watson, Hugging Face, and Anthropic.

- Artificial intelligence — the broad capability of machines to perform tasks that would require human intelligence, spanning perception, reasoning, and decision-making.

- Machine learning — a subset of AI focused on learning patterns from data to improve predictions or actions over time.

- Neural network — a computational model composed of interconnected nodes arranged in layers that simulate brain-inspired processing.

- Supervised learning — training with labeled data where the correct answer is provided during learning.

- Unsupervised learning — discovering structure, patterns, or representations from unlabeled data.

- Reinforcement learning — agents learn by trial and error through feedback from interacting with an environment.

- Transformers — a neural architecture that enables effective handling of sequential data, crucial for language tasks.

- Embeddings — numerical representations that capture semantic meaning in dense vector form.

| Term | Definition | Common Context |

|---|---|---|

| Neural network | A network of computational units that transform inputs through learned weights to produce outputs. | Vision, language, signal processing |

| Transformer | A architecture leveraging attention mechanisms to model dependencies across data sequences. | LLMs, translation, summarization |

| Loss function | A mathematical objective the model minimizes during training to improve accuracy or other metrics. | Training optimization |

| Embeddings | Dense vector representations that capture semantic relationships between items or tokens. | Recommendation, clustering, semantic search |

| Supervised learning | Learning from labeled examples to map inputs to outputs. | Image classification, speech recognition |

| Reinforcement learning | Learning by interacting with an environment to maximize cumulative reward. | Robotics, game playing |

Key terms and practical links

To deepen your understanding, consider exploring targeted glossaries and guides from major AI ecosystems. For example, vendors and research labs often publish material bridging theory and practice, including updates on OpenAI developments, Google AI research, and enterprise implementations via IBM Watson or Microsoft Azure AI. These resources help translate the vocabulary into concrete product decisions. Useful reading includes a glossary that tracks term usage in 2025 context and a primer on terminology changes as models grow larger and more capable.

In addition to internal knowledge, several community-driven references offer structured vocabularies and examples of how terms appear in real projects. The glossary landscape evolves rapidly as more teams adopt standardized language for evaluation, bias mitigation, and governance. For ongoing learning, bookmark a curated glossary and glossary-linked case studies from organizations like Understanding the Language of AI: Key Terms (Part 2) and Decoding AI: A Comprehensive Guide.

- Explore practical examples of a diffusion model for image synthesis used by creative teams at design studios and tech giants alike.

- Consider how quantization and pruning improve inference speed on edge devices or cloud deployments.

- Review how RLHF shapes the behavior of consumer-facing chatbots and enterprise assistants.

| Term | Definition | Real-World Context |

|---|---|---|

| Diffusion model | A generative model that creates data by gradually refining noise into a desired output. | Image and audio synthesis |

| Quantization | Reducing the numerical precision of model weights to save memory and compute. | Edge deployment, model compression |

| Pruning | Removing less important connections or neurons to shrink model size. | Efficient inference |

| RLHF | Reinforcement learning from human feedback; aligns models with human preferences. | Chatbot safety, user experience |

| Embeddings | Vector representations capturing semantic meaning between items or tokens. | Semantic search, recommendations |

Further reading and resources

For a structured dive, see curated articles that juxtapose theory with deployment realities across leading platforms. Links to in-depth glossaries and explainers frame the vocabulary against practical constraints, such as latency budgets, compliance requirements, and user-centric design. OpenAI, Google AI, IBM, AWS, and NVIDIA all publish practitioner-focused glossaries and tutorials that help operationalize terminology in product teams. Consider these references as you build a living glossary within your team’s knowledge base.

To keep pace with the broader ecosystem, consult the following resources:

- Understanding the Language of Artificial Intelligence: A Glossary of Key Terms

- A Guide to Understanding the Language of Artificial Intelligence

- Decoding AI: A Comprehensive Guide

Machine Learning Fundamentals: Supervised, Unsupervised, and Reinforcement Learning in Action

The machine learning pyramid organizes how systems learn from data, anchoring vocabulary in practical decision-making. In industry, teams often begin by choosing a learning paradigm that aligns with data availability, business goals, and deployment constraints. Supervised learning requires labeled data, which is often abundant in structured business domains such as finance, healthcare, and customer analytics. In these contexts, teams rely on metrics like accuracy, precision, recall, and AUC-ROC to gauge performance. Conversely, unsupervised learning unlocks insights when labels are scarce or unavailable; clustering customers by behavior, for example, can support segmentation strategies and personalized experiences without explicit targets. Finally, reinforcement learning enables agents to improve through interaction, powering applications such as autonomous robotics, game playing, and complex decision systems in dynamic environments.

In practice, many real-world deployments blend these paradigms. A typical enterprise workflow starts with exploratory data analysis to identify structure in unlabeled data, followed by labeling critical examples to bootstrap supervised training. During product iteration, teams might switch to reinforcement learning or human-in-the-loop methods to fine-tune behavior, converge on desirable outcomes, and address safety concerns. The success of these strategies depends not only on algorithmic choices but also on data quality, feature engineering, model monitoring, and governance frameworks that ensure fairness, accountability, and transparency. For leaders, the key question becomes: how do we balance model capability with reliability, explainability, and ethical considerations?

Consider the following practical breakdown of core learning paradigms and their typical use cases:

- Supervised learning: Image recognition, fraud detection, demand forecasting, and language classification with labeled data.

- Unsupervised learning: Market basket analysis, anomaly detection, population clustering, and representation learning when labels are scarce.

- Reinforcement learning: Robotic control, recommendation optimization through exploration, and game-playing agents that adapt to user interactions.

- Semi-supervised learning: Scenarios with limited labels but abundant unlabeled data, combining both worlds for better generalization.

| Paradigm | Strengths | Limitations | Typical Use Case |

|---|---|---|---|

| Supervised learning | High accuracy with labeled data; predictable behavior. | Requires large labeled datasets; labels may be biased. | Credit scoring, medical imaging, sentiment analysis |

| Unsupervised learning | Discoveres hidden structure; great for exploration. | Hard to evaluate; results may be ambiguous. | Customer segmentation, anomaly detection |

| Reinforcement learning | Adaptable to dynamic environments; optimizes long-term goals. | Training can be unstable; safety concerns in real-world deployments. | Robotics, game AI, autonomous systems |

Industry players embed these paradigms into platforms and services that power modern AI. For instance, NVIDIA AI accelerates training and inference for large-scale models; IBM Watson offers governance-ready ML workflows; Hugging Face provides accessible repositories and evaluative tools to compare models across tasks. As you build a ML strategy, consider how your data strategy, evaluation framework, and deployment environment align with these paradigms. Practical resources, including glossaries and roadmaps, help organizations track changes in 2025 and beyond, from small startups to global enterprises leveraging Google AI and Microsoft Azure AI services for experimentation and production deployment.

- Supervised learning often dominates initial applications in labeled domains such as image classification and natural language processing.

- Unsupervised and semi-supervised approaches are powerful for discovery and scale when labels are expensive or impractical to obtain.

- Reinforcement learning shines in sequential decision tasks where long-term rewards matter, though it demands careful safety and policy considerations.

| Paradigm | Typical Algorithm Types | Industry Examples |

|---|---|---|

| Supervised | Decision trees, logistic regression, SVMs, neural nets | Fraud detection, spam filtering, medical diagnosis |

| Unsupervised | K-means, hierarchical clustering, PCA, autoencoders | Customer segmentation, dimensionality reduction |

| Reinforcement | Q-learning, policy gradient, actor-critic | Robotics, game AI, autonomous systems |

For deeper exploration into this practical spectrum of learning methods, you can consult a curated set of resources that cover terminology, models, and deployment strategies across prominent AI ecosystems. See references and further reading at these pages: Understanding the Vocabulary of AI and Key Terms Explained.

- Open-source ecosystems like Hugging Face democratize access to state-of-the-art techniques and models.

- Commercial platforms from IBM Watson to Amazon Web Services AI enable scalable training and deployment.

- Reliability and ethical governance should accompany any learning strategy, especially when models interact with users or affect decisions.

Real-world learning pathways in 2025

Organizations often chart pathways that begin with small supervised models and progressively incorporate unsupervised discovery and reinforcement-based optimization. This phased approach mirrors how teams experiment with models hosted on Google AI or Microsoft Azure AI, adjusting data pipelines, evaluation criteria, and governance policies as they scale. For teams pursuing ambitious AI programs, a well-documented glossary becomes a living artifact—one that evolves as new terms enter the discourse and as practices change with regulatory developments and industry standards.

References and related glossaries can be found at a variety of sources, including industry glossaries for terminology and practical case studies. These resources help translate abstract terms into operational decisions, such as how to interpret a model’s bias or how to design patient-friendly explanations for AI-assisted clinical tools. In the spirit of transparency and collaboration, practitioners often contribute to and consult such references to keep pace with the field’s rapid changes.

- OpenAI and Google AI continue to publish accessible introductions to model architectures, training methods, and deployment considerations.

- Anthropic and Hugging Face emphasize safety and open-access model evaluation frameworks for responsible AI.

- DataRobot and IBM Watson illustrate how enterprise-grade ML platforms operationalize these concepts for production workloads.

Key takeaway: The language of AI is a map. By learning the terms, you gain the ability to navigate the landscape of models, data pipelines, and deployment ecosystems with greater clarity and confidence. This improves collaboration, accelerates experimentation, and supports responsible AI practices across all sectors.

Neural Architectures and Transformers: From RNNs to Large Language Models

Neural architectures form the backbone of most contemporary AI systems. While early neural networks relied on relatively shallow structures, today’s landscape is dominated by deeper, more sophisticated designs that enable unprecedented capabilities in natural language, vision, and multimodal perception. Among these architectures, transformers stand out for their ability to model long-range dependencies and context with high efficiency. They power many of the most impactful language systems in use today and are central to tradecraft across major AI platforms, including Google AI, OpenAI, and Anthropic.

Transformer models leverage attention mechanisms to weigh the relevance of different input tokens, allowing the model to focus on the most informative parts of a sequence. This design enables scalable pretraining on massive corpora followed by fine-tuning for downstream tasks. Alongside transformers, other architectures such as GANs and diffusion models have transformed creativity and content generation. GANs provide a competitive framework for generating realistic data by pitting a generator against a discriminator, while diffusion models create high-quality outputs by gradually refining noise into structured samples. Understanding these architectures helps teams choose the right tool for the job, whether building a multilingual assistant, a medical imaging assistant, or a creative content generator.

As models scale, we also encounter sophisticated training regimes like self-supervised learning, which reduces labeling costs by creating its own supervisory signals from data. In practice, practitioners pair these models with robust evaluation frameworks to assess safety, fairness, and reliability. The AI ecosystem has matured to include robust tooling and libraries from companies like Hugging Face and NVIDIA AI, which provide pretrained weights, model cards, and performance benchmarks to guide adoption. This collaborative approach lowers barriers to experimentation while encouraging responsible deployment across sectors such as healthcare, finance, and education, where quality and ethics bear significant weight.

In 2025, the field sees ongoing refinement in transformer efficiency, multi-task learning, and multilingual capabilities. Tokenization strategies and embedding techniques continue to evolve, enabling models to operate across diverse languages, domains, and modalities with improved reliability. The practice of fine-tuning and instruction tuning helps align models with human expectations, making systems more predictable and useful in real-world contexts. It’s essential to stay attuned to standards and best practices set by leading organizations, including IBM Watson, Google AI, and OpenAI, as well as the broader community through platforms like Hugging Face and DataRobot.

- Transformers enable long-range context handling and scalable language modeling for chatbots, translation, and summarization.

- GANs and diffusion models push the envelope in generative content, from synthetic images to music and beyond.

- Self-supervised learning reduces labeling burdens and expands the reach of AI into domains with limited annotated data.

| Model Type | Core Mechanism | Strengths | Representative Use |

|---|---|---|---|

| Transformers | Attention-based processing of sequences | Handles long-range dependencies; scalable pretraining | Language modeling, translation, code generation |

| GANs | Adversarial training between generator and discriminator | High-quality realistic data synthesis | Image synthesis, video generation |

| Diffusion models | Iterative denoising from noise | Stability and realism in generated content | Image and audio generation |

In practice, practitioners leverage a spectrum of tools and platforms to accelerate experimentation and deployment. NVIDIA AI provides optimized accelerators and libraries that speed up training of large transformers, while Hugging Face offers a rich ecosystem of pretrained models, datasets, and evaluation metrics. Enterprises integrate these technologies with cloud services like Google AI, Microsoft Azure AI, and AWS AI to deliver scalable, production-ready AI solutions. Understanding the architecture landscape helps teams select the right model for the job and contextualize results within business goals and user expectations. For further exploration, consider resources that compare model families and discuss deployment considerations across the major vendors, including Term explanations and model perspectives.

Key takeaway: Transformers have redefined what is possible in language and multimodal AI, while complementary architectures expand the toolkit for generation, synthesis, and robust perception. Leaders should align architectural choices with governance, safety, and user needs to unlock responsible and impactful AI in production environments.

| Architecture | Key Feature | Best Use | Notes |

|---|---|---|---|

| Transformer | Self-attention and parallelism | LLMs, translation, summarization | Core of modern NLP and many multimodal models |

| GAN | Adversarial training | High-fidelity image/video generation | Quality can outperform traditional generative models |

| Diffusion | Iterative denoising | Realistic content generation | Computationally intensive but shows strong realism |

Practical considerations for model selection

When choosing an architecture for a project, teams weigh data availability, compute budgets, latency constraints, and risk tolerance. For language-heavy tasks where speed and interpretability matter, transformer-based models with careful fine-tuning may provide a balanced solution. For creative output or synthetic data needs, diffusion or GAN-based approaches can deliver compelling results, provided safety and ethical guidelines are in place. Across sectors, collaboration between OpenAI, Google AI, and Anthropic—as well as tooling from Hugging Face and DataRobot—helps teams experiment efficiently while maintaining governance and transparency.

- Establish clear evaluation protocols to compare alternative architectures on task-specific metrics.

- Monitor latency, throughput, and energy consumption to ensure scalable deployment.

- Incorporate safety and fairness testing early in the development cycle.

| Decision Factor | Impact | Example |

|---|---|---|

| Data regime | Driven approach to model selection | Abundant labeled data → supervised or fine-tuned transformer |

| Performance vs. cost | Influences architecture choice and hardware | Edge deployment favors lighter models |

| Safety & ethics | Must shape design, training, and evaluation | RLHF and bias mitigation strategies |

Two essential resources for staying current on terminology and architecture are vendor glossaries and community-driven benchmarks. For a deeper dive into model terminology and architecture comparisons, visit Key Terms (Glossary 2) and AI Terminology Glossary. If you want curated insights on how major platforms approach model deployment, check Deployment and Governance Guides.

AI Deployment, Ecosystems, and Ethical Considerations in 2025

Deploying AI is as much about ecosystems, governance, and user trust as it is about algorithms. In 2025, enterprises leverage cloud-native AI services and hardware accelerators to scale models while maintaining control over costs, latency, and compliance. The major cloud providers—Microsoft Azure AI, Google AI, and Amazon Web Services AI—offer turnkey pipelines for data ingestion, model training, evaluation, and monitoring. They empower teams to deploy models securely, with built-in governance features such as access control, audit trails, and bias detection. Enterprises often blend these with specialized platforms from IBM Watson and NVIDIA AI to optimize both the development and the deployment phases. In addition, startups and enterprises frequently rely on community-driven repositories and marketplaces from Hugging Face to accelerate experimentation and adoption.

Ethical considerations play a central role in deployment. Responsible AI requires ongoing evaluation of model behavior, fairness across user groups, explainability where feasible, and robust safety controls to prevent harmful outcomes. As models become embedded in decision-making processes—ranging from customer support to healthcare—the demand for transparency and accountability grows. Organizations increasingly adopt model cards, data sheets for datasets, and third-party audits to communicate capabilities and limitations to stakeholders. Such practices help build trust with users, regulators, and business partners while supporting safer and more reliable AI systems.

From a platform perspective, the AI landscape in 2025 emphasizes interoperability and standardization. Cross-vendor pipelines, model registries, and common evaluation metrics enable teams to reuse components and compare results across environments. Industry leaders—including OpenAI, Anthropic, IBM Watson, and Google AI—promote shared guidelines and security practices that advance the field while reducing risk. As models become more capable, governance structures—covering data provenance, model bias, and user consent—become a critical part of the deployment plan. Enterprises that implement these practices typically realize better regulatory alignment, higher user acceptance, and stronger competitive positioning.

- Design governance as a core component of the deployment strategy; include bias assessment and explainability where feasible.

- Adopt platform-agnostic tooling for data management, model evaluation, and monitoring to enable portability across clouds.

- Invest in safety reviews, red-teaming, and continuous auditing to protect users and organizations from adverse outcomes.

| Platform/Tool | Strengths | Ideal Use Case | Governance Features |

|---|---|---|---|

| Microsoft Azure AI | Integrated enterprise services, strong governance | Production AI in business apps | Access control, data lineage, monitoring |

| Google AI | Advanced research, scalable infrastructure | ML-powered products and research | Bias checks, safety reviews |

| IBM Watson | Industry-grade compliance, enterprise AI | Healthcare, finance, regulated domains | Privacy, explainability, audits |

The practical path to responsible deployment blends technical rigor with organizational discipline. Companies typically establish a cycle that includes data governance, model evaluation, ongoing monitoring, and incident response planning. The surrounding ecosystem—featuring OpenAI, DeepMind, and major cloud providers—provides tools and frameworks that help teams implement these practices consistently. For deeper pointers on governance, exploration into the latest industry guides and glossaries is invaluable, including the pairings referenced in this article and the quick-start guides from major AI vendors.

- OpenAI and Google AI publish safety and alignment frameworks that teams can adapt to their contexts.

- IBM Watson emphasizes governance and regulatory compliance for enterprise AI deployments.

- DeepMind provides research contributions that inform safe and beneficial AI design patterns.

Finally, the materials cited below connect the deployment realities with terminology and practical guidance. For readers who want a broader reading list, consult these resources: Decoding AI: AI Terminology Guide, Glossary of Key AI Terms (Part 2), and AI Terminology: Key Concepts.

Practical deployment insights will continue to evolve as 2025 progresses. The best teams maintain a living glossary, encourage cross-disciplinary collaboration, and continuously adapt governance and safety practices to reflect emerging capabilities and use cases. This approach ensures AI remains a force for positive impact—delivering value while respecting user rights and societal norms.

The Future of AI Language: Glossaries, Standards, and Trends for 2025 and Beyond

The terminology of artificial intelligence is not static; it evolves with scientific breakthroughs, industry needs, and regulatory developments. In the coming years, we can expect glossaries to expand toward greater granularity in areas like agent-based systems, multi-modal learning, and responsible AI. The expansion of ubiquitous AI in everyday products will demand clearer definitions for terms related to user experience, safety, privacy, and fairness. Organizations across sectors will invest in formal standards and shared vocabularies to facilitate collaboration, benchmarking, and compliance. The result will be a more coherent AI language that helps teams communicate complex ideas succinctly, accelerate innovation, and measure impact with clarity.

For practitioners, staying current means following updates from leading research labs and industry consortia. Glossaries will not only define terms but also illustrate their practical implications with examples, case studies, and governance checklists. Expect terms related to explainability, model cards, and dataset risk management to become more prominent in product teams’ day-to-day language. The convergence of research and industry practice around these concepts will shape how AI is built, evaluated, and trusted by users in 2025 and beyond. As tools and platforms from Google AI, Microsoft Azure AI, IBM Watson, and OpenAI mature, the vocabulary will reflect a more mature, responsible, and scalable AI ecosystem.

- Emerging terms will address agent autonomy, explainability, and safety in more explicit, actionable ways.

- Standardized metrics and model cards will become commonplace for transparent reporting of capabilities and limitations.

- Organizations will increasingly adopt multilingual, cross-domain glossaries to support global collaboration and regulatory alignment.

| Emerging Term | Definition | Anticipated Use |

|---|---|---|

| Agent-based AI | Systems that act autonomously to achieve goals within an environment | Automation, interactive assistants, decision support |

| Explainability | Techniques and practices to make AI decisions understandable | Compliance, user trust, debugging |

| Model cards | Documentation summarizing a model’s intended use, data, and limitations | Responsible deployment and governance |

As 2025 unfolds, the language of AI will increasingly reflect ethical frameworks, governance mechanisms, and international standards. By embracing a shared vocabulary, teams can accelerate collaboration, reduce risk, and deliver AI that aligns with human values across diverse contexts. The interplay between industry leaders—such as NVIDIA, DeepMind, and Anthropic—and open communities like Hugging Face will continue to shape the lexicon, with new terms becoming commonplace in product lifecycles, research, and policy discussions. For ongoing reading, consult comprehensive glossaries and guides noted in the references, and remember to tether terminology to concrete outcomes that benefit users and society at large.

- Glossaries will increasingly include practical checklists and governance indicators for each term.

- Industry standards will surface related terms in a consistent format across platforms and vendors.

- Cross-language and cross-domain glossaries will support global AI adoption and regulatory compliance.

| Term Area | What to Watch | Impact on Practice |

|---|---|---|

| Explainability | Techniques to interpret model decisions | Improved trust, regulatory compliance |

| Governance | Policies for data, safety, and accountability | Safer, auditable AI systems |

| Multilingual AI | Cross-language capabilities and fairness across languages | Broader accessibility and global impact |

For readers seeking a deeper dive, consult the curated reading list linked throughout this article. The evolving language of AI reflects both technical progress and societal responsibility, and staying engaged with glossary updates helps teams translate advances into responsible, user-centered outcomes. See these resources for further reading and exploration into the terminology that will shape AI practice in 2025 and beyond:

- Glossary: Key AI Terms (Part 2)

- Decoding AI: Understanding the Language of AI

- Glossary of Key AI Terms

With the landscape moving quickly, a disciplined approach to terminology—grounded in real-world use cases—will help teams communicate clearly, design responsibly, and deliver AI that meaningfully benefits users and organizations alike. The vocabulary you adopt today will shape the success of your AI initiatives tomorrow.

FAQ

What makes a term foundational in AI terminology?

Foundational terms describe broad concepts and common mechanisms that recur across models and platforms. They provide the vocabulary to discuss data, learning, model behavior, and deployment with precision.

Which platforms are most referenced for AI terminology in 2025?

Leading platforms include OpenAI, Google AI, IBM Watson, Microsoft Azure AI, Amazon Web Services AI, NVIDIA AI, Anthropic, Hugging Face, and DataRobot. These ecosystems publish glossaries and model cards that help align terminology with practice.

How should organizations use glossaries in practice?

Glossaries should be living documents tied to governance, evaluation, and monitoring. They help align cross-functional teams, support regulatory compliance, and facilitate transparent discussions with stakeholders.

Where can I find additional reading on AI terminology?

Explore resource hubs linked in this article, including glossaries and guides hosted on MyBuziness and related AI terminology resources.