En bref

- This article unpacks how humans talk about machines that think, learn, and communicate, focusing on terminology, models, and real-world use.

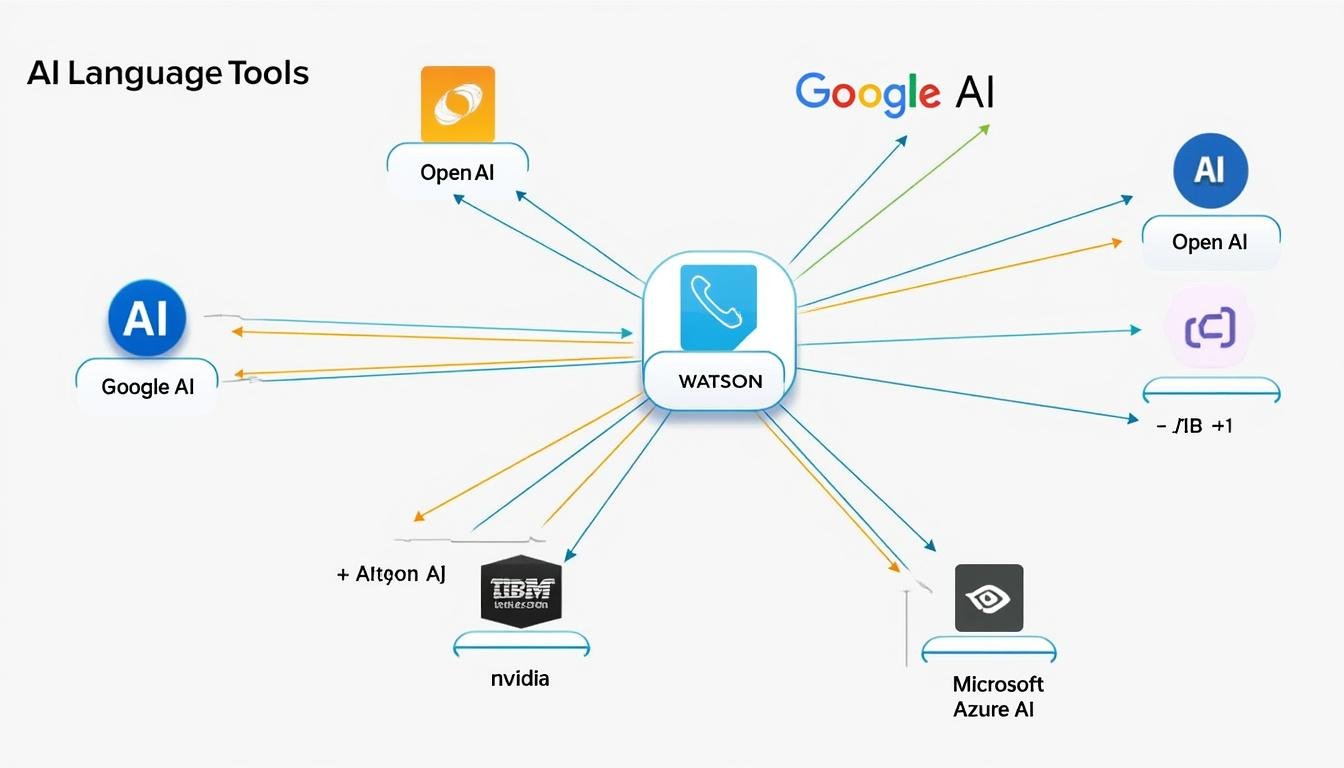

- Key actors and platforms shape the language of AI, including OpenAI, Google AI, IBM Watson, Microsoft Azure AI, DeepMind, Anthropic, Amazon Web Services AI, Cohere, Hugging Face, and NVIDIA AI.

- Understanding the vocabulary helps separate concepts like machine learning, natural language processing, and reinforcement learning from the behavior of deployed systems.

- The article blends theory with practical examples, case studies, and guidance on evaluating AI language capabilities in 2025.

- Readers will find a glossary-inspired structure, embedded media, and curated readings that link to widely cited resources.

Across the last decade, the language of artificial intelligence has shifted from academic jargon to everyday parlance. As AI systems become embedded in business, healthcare, and consumer tech, understanding the vocabulary is not a luxury but a necessity. This piece examines how language is used to describe capabilities, limits, and risks, and how practitioners translate abstract ideas into tangible outcomes. You’ll meet notable players and platforms—OpenAI, Google AI, IBM Watson, Microsoft Azure AI, DeepMind, Anthropic, Amazon Web Services AI, Cohere, Hugging Face, and NVIDIA AI—and explore how their terminology shapes product design, policy, and public understanding. The piece draws on glossary-style explanations, practical examples from real deployments, and a curated reading list that includes accessible introductions and deeper dives into terminology. By the end, readers will have a framework for navigating AI language without losing sight of ethical and societal considerations.

AI Language Foundations in Practice: Core Concepts for Understanding the Language of Artificial Intelligence

Artificial intelligence communicates through language, and the way we discuss that language reveals the underlying mechanisms and limitations of the technology. In this section we establish the core concepts that beginners and seasoned practitioners use to describe how machines interpret, generate, and reason with text and speech. A practical grasp of these ideas helps audiences discern what a system can do, what it cannot, and how it might fail in real-world environments. We begin with the two broad families of learning that power most AI language systems: supervised learning and unsupervised learning. In supervised learning, models are trained on labeled data, meaning humans provide thoughtful tags and corrections that guide the model toward desired outputs. This is the backbone of many classification and regression tasks, such as sentiment analysis, topic categorization, and predicting user intent. By contrast, unsupervised learning leverages data without explicit labels, encouraging the model to discover structure, clusters, or representations that reflect latent patterns in language itself. This category is central to foundational methods like clustering and representation learning, and it underpins many modern language models that learn from vast text corpora without explicit supervision. Among the most transformative developments in AI language are transformers, a neural architecture that enables models to capture long-range dependencies and contextual relationships within text. The transformer’s attention mechanism allows models to weigh different parts of a sentence or paragraph, producing richer representations than earlier sequential models. This is crucial for tasks such as machine translation, summarization, and coherent long-form generation. When discussing transformers, terms like encoder and decoder frequently appear; in practice, many systems use encoder-decoder configurations or rely on decoder-only architectures that generate fluent text from learned representations. In addition to these learning paradigms, another important category is reinforcement learning, particularly when language models are fine-tuned through feedback loops that reward helpful, truthful, or safe outputs. Reinforcement learning from human feedback (RLHF) has become a staple in aligning behavior with human preferences, though it raises questions about bias, value specification, and reproducibility. Finally, the field relies on specific evaluation metrics—perplexity, BLEU, ROUGE, and more advanced human-in-the-loop assessments—to determine how well a model understands and generates language in diverse contexts. The interplay of these concepts—the data, the learning signal, and the evaluation framework—defines how we describe and measure AI’s linguistic capabilities. OpenAI and other major players contribute distinct research philosophies and product design choices, shaping a shared vocabulary that scales across industries and languages.

To make these ideas tangible, consider a practical example from a customer-support scenario. A company might deploy a LLM (large language model) to triage tickets, draft responses, and propose actions to human agents. The model’s performance depends on its training data, its ability to generalize to unseen queries, and the alignment of its behavior with safety and policy constraints. In operations, you will see explicit references to NLP pipelines, including preprocessing steps (tokenization, normalization), model inference (generate or predict steps), and post-processing (deterministic formatting, fact-checking). The vocabulary grows more nuanced when considering specialized domains (law, medicine, finance), where terminology may entail restricted vocabularies, regulatory considerations, and domain-specific jargon. As you explore the language of AI, keep in mind the practical reality: real-world systems blend multiple learning paradigms, curated safety procedures, and continuous feedback loops to maintain usefulness while mitigating risk. Anchoring your understanding in the combined concepts of ML, NLP, and communication theory will help you interpret reports, demonstrations, and policy statements with greater clarity. The following table summarizes essential terms and their core meaning in this context.

| Term | Definition |

|---|---|

| Machine Learning | Algorithms that learn from data to improve task performance without being explicitly programmed for every scenario. |

| Natural Language Processing | Techniques for teaching machines to understand, interpret, and generate human language. |

| Transformer | A neural architecture enabling efficient modeling of long-range language dependencies through attention mechanisms. |

| LLM | Large language model; a model trained on vast text data capable of generating coherent, context-aware text. |

| RLHF | Reinforcement learning from human feedback; a method to align model outputs with human preferences. |

Among the major players, OpenAI, Google AI, and IBM Watson shape how organizations describe and deploy language technology. Industry ecosystems influence terminology: developers talk about transformer-based models, user-facing interfaces, and deployment platforms like Microsoft Azure AI and AWS AI. The vocabulary is not merely technical; it signals responsibility, governance, and scalability concerns that cross borders and sectors. For readers seeking a broader glossary, several curated resources provide structured explanations of terms and relationships across the field. See the recommended readings section for a curated set of references, including works exploring reinforcement learning and variational autoencoders, which help illuminate the boundaries between statistical modeling and emergent language behavior.

- Decoding AI: Understanding the Language of AI

- Understanding the Vocabulary of AI

- A Guide to Understanding the Language of AI

Key considerations for practitioners

For practitioners, terminology is a tool for governance and decision making. Clear vocabulary supports ethical deployment, responsible data use, and transparent communication with stakeholders. Consider a scenario where a company deploys a language model to draft customer responses. Understanding the limits of training data bias, the risks of hallucination, and the necessity of auditability are essential to maintain trust and compliance. The literature suggests a pragmatic approach: begin with an explicit scope, implement safety guardrails, and iterate with human oversight. As you build expertise, you’ll notice how language evolves alongside technology; new terms reflect breakthroughs in alignment, control, and multimodal capabilities. The field is moving toward more expressive and controllable systems, where language not only describes capability but also encodes policy, safety, and ethical constraints. This shift makes continual learning about terminology indispensable for teams working at the intersection of technology, business strategy, and societal impact. The trajectory implies a growing need for cross-disciplinary literacy among engineers, product managers, and policy experts alike.

Liens utiles et ressources complémentaires

- Glossary of Key AI Terms (Part 2)

- Understanding the Language of Artificial Intelligence

- Decoding AI: Terminology Guide

Terminology in Depth: Mapping the Language of Artificial Intelligence to Real Systems

The second section delves deeper into the vocabulary that practitioners use to describe algorithms, data, and outcomes in real systems. A prominent theme is the distinction between statistical approaches and symbolic reasoning, a debate that is especially visible in discussions about LLMs versus traditional rule-based systems. You will frequently encounter terms such as tokenization, embedding, and representation learning, all of which refer to how a model interprets and organizes information for subsequent processing. In practice, tokenization is the pre-processing step that converts raw text into discrete units the model can handle; different tokenization schemes influence efficiency, coverage, and the potential for ambiguity in language interpretation. Embeddings, meanwhile, are dense vector representations that encode semantic and syntactic information, enabling models to capture relationships between words, phrases, and concepts across large vocabularies. A practical takeaway is that the performance of AI language systems hinges on the quality of these representations, which in turn depend on data selection, preprocessing design, and training objectives. The evolution of terminology also mirrors shifts in how teams approach alignment and safety, two areas that have risen to prominence as models become more capable and widespread. Terms like prompt engineering, fine-tuning, and policy controls describe the human-AI interaction patterns that determine how language generation is directed in practice. In the industry, platforms such as Microsoft Azure AI and Amazon Web Services AI offer turnkey pipelines that abstract many terminology decisions, yet the underlying concepts continue to matter for governance and accountability. Reading widely—from academic papers to vendor documentation—helps professionals translate jargon into concrete requirements, such as data provenance, model cards, and risk assessments. This cross-pollination of ideas is essential as AI language models become embedded in critical functions like medical triage, legal research, or financial forecasting, where precise language matters for trust and compliance.

| Term | Definition |

|---|---|

| Tokenization | The process of breaking text into smaller units that a model can manipulate, such as words or subword units. |

| Embeddings | Numerical representations that encode semantic relationships between tokens in a high-dimensional space. |

| Prompt Engineering | Designing inputs to elicit desired model behavior and outputs. |

| Fine-Tuning | Adjusting a pre-trained model on a targeted dataset to improve performance for specific tasks. |

| Alignment | Techniques and processes to ensure model outputs reflect human intentions and safety constraints. |

In the broader ecosystem, the language used by AI vendors conveys commitments to interoperability and cloud-native deployment. Consider how Google AI, IBM Watson, and other players describe their tooling—often emphasizing APIs, pipelines, and model cards that document training data, capabilities, and limitations. This transparency supports risk assessment and enables researchers to compare approaches across teams. For readers seeking a deeper dive into terminology, the following readings provide structured explanations and case studies that anchor theory to practice. The linked resources span introductory glossaries and advanced analyses, offering a spectrum of perspectives on how language shapes AI deployment in 2025 and beyond.

Biased Language, Real Impacts: Cognition, Context, and Reality in AI Language

Language is a bridge between computation and human reality, yet bridges can be imperfect. The debate around “understanding” in AI—whether large pretrained language models truly comprehend language and the world they describe—frames a central tension in the field. Some argue that statistical pattern recognition can simulate understanding to a high degree of usefulness, while others warn that current architectures lack genuine common sense, grounding, and moral judgment. The practical implication is that we should be precise about what models can and cannot claim to know. To operationalize this nuance, many teams distinguish between surface fluency and grounded reasoning, recognizing that fluent text generation does not automatically imply factual correctness or real-time awareness. Case studies from healthcare chat assistants and financial advisory tools illustrate both the promise and the risk of deploying language systems in high-stakes contexts. When a model’s responses are used to guide critical decisions, organizations must implement layered verification, external data checks, and human oversight mechanisms. Philosophically, the tension between simulation of understanding and genuine cognition invites ongoing exploration of how AI systems model knowledge, representation, and inference. In contemporary practice, this translates into robust evaluation frameworks, including human-in-the-loop testing, scenario-based assessments, and post-deployment monitoring to detect drift, bias, or unsafe outputs. The combination of technical rigor and ethical reflection defines a mature approach to language understanding in AI.

From a product perspective, understanding the language of AI requires bridging theory and business needs. Consider how DeepMind and Anthropic frame safety and alignment as core design principles, influencing how APIs are structured and how risk is communicated to end users. The ecosystem also emphasizes explainability and transparency, with guidance on how to present model capabilities to non-technical stakeholders. As businesses scale, the vocabulary expands to cover governance artifacts such as risk dashboards, usage policies, and ethics reviews. Readers should keep a vigilant eye on emerging standards and regulatory debates that may affect terminology, labeling practices, and disclosure requirements. The following table offers a structured look at debates, benefits, and caveats surrounding language understanding in AI, helping readers compare perspectives across sectors and platforms.

| Aspect | Considerations |

|---|---|

| Understanding | Does the model truly comprehend the meaning of language or mirror statistical correlations? |

| Hallucination | Instances where the model outputs plausible but false information; mitigation requires checks and confirmations. |

| Alignment | Ensuring outputs reflect human values, safety policies, and domain-specific constraints. |

| Explainability | Ability to justify why a model produced a given answer, aiding trust and accountability. |

In this section, the practical implication is clear: organizations should couple technical sophistication with governance and communication practices. This means not only building robust models but also designing user interfaces, documentation, and policies that convey how the system works, what it can do, and where it may fall short. The AI ecosystem—spanning OpenAI, Google AI, IBM Watson, and beyond—continues to refine how language capabilities are described, tested, and regulated. For readers who want to explore further, the following resources include both introductory glossaries and more advanced discussions of terminology, ethics, and evaluation frameworks. The aim is to provide a coherent map of the terrain where language, cognition, and responsibility intersect.

Applications and Ecosystems: OpenAI, Google AI, NVIDIA, and Industry Tools

The real-world language of AI is inseparable from the ecosystems that build, deploy, and govern these systems. This section places terminology in the context of products, partnerships, and platforms that shape how organizations approach language understanding in practice. The landscape features cloud-scale AI services, developer tooling, and community-driven projects that accelerate experimentation while raising questions about interoperability and governance. Key players—OpenAI, Google AI, IBM Watson, Microsoft Azure AI, DeepMind, Anthropic, Amazon Web Services AI, Cohere, Hugging Face, and NVIDIA AI—offer a spectrum of interfaces, from hosted APIs to open-source libraries. Understanding the terminology used in these contexts helps teams select platforms, design data pipelines, and implement safety controls while balancing performance and cost. A recurring theme is the separation between model-centric language generation and system-level architecture that enables deployment at scale. Concepts like inference, latency, throughput, and scalability describe the engineering realities that accompany the glossaries used by data scientists and product teams. On the policy side, terms such as model cards, data provenance, and usage governance are increasingly standard in vendor documentation and enterprise best practices. The practical implication is that teams must align technical capabilities with business objectives, regulatory requirements, and user expectations. Providers continually evolve their lexicons to reflect new features—multimodal capabilities, safety tooling, and edge deployments—so staying current is itself a core skill for AI professionals.

In corporate contexts, the need to balance speed and reliability fosters a culture of collaboration across vendors, research labs, and product teams. You’ll see narratives that emphasize responsible AI and risk management as competitive differentiators, not mere compliance checklists. To illustrate the breadth of the ecosystem, consider how enterprises combine services from Microsoft Azure AI and AWS AI with Hugging Face models for customization, or how NVIDIA AI accelerates inference on large-scale datasets. Industry practitioners often adopt a modular approach: a core language model handles generation, while domain-specific tools provide fact-checking, retrieval-augmented generation, or content moderation. This modularity supports innovation without sacrificing safety and governance. The following resources provide practical context and concrete examples of how organizations describe and implement AI language capabilities in 2025 and beyond.

- Understanding the Language of AI (Overview)

- A Guide to Understanding AI Language

- Decoding AI: Terminology Guide

- Key AI Terms Explained

Takeaways for practitioners include the importance of documenting expectations, choosing appropriate evaluation metrics, and ensuring that language choices in user interfaces communicate capabilities and limits clearly. Language models can enable faster decision-making and more personalized experiences, but they also require governance constructs—auditable data practices, usage controls, and ongoing safety reviews—to remain trustworthy as deployment scales. The ecosystem’s breadth means teams should cultivate fluency with both the technical vocabulary and the practical implications of each platform’s design decisions. The objective is not simply to deploy the most capable model, but to deploy responsibly, with language that communicates honestly about what the model can and cannot do, and with safeguards that protect users and stakeholders.

Standards, Evaluation, and Responsible Communication in the Language of AI

As AI language systems become integral to governance, customer interactions, and critical decision-making, robust evaluation standards and transparent communication become essential. Evaluation in AI language involves multiple layers: intrinsic metrics that measure linguistic quality, extrinsic metrics that assess task success, and human-centered assessments that judge usefulness, safety, and alignment with user expectations. Intrinsic metrics such as perplexity and BLEU provide a rough sense of language modeling ability, but they fail to capture real-world usefulness or safety. Extrinsic evaluations—like task success rates in customer support, information retrieval accuracy in knowledge bases, or decision-support quality in clinical tools—offer practical insight into how a model performs in context. Human-centered assessments bring in qualitative judgments about fluency, helpfulness, bias, and safety, often via controlled studies or field demonstrations. The convergence of these evaluation modalities helps teams calibrate models for real operations, balancing novelty and reliability. Moreover, responsible communication about AI language hinges on transparency: describing training data characteristics, model limitations, and governance practices so that users can understand and critique the system. This is particularly important for high-stakes domains where incorrect or biased outputs could cause harm. In corporate settings, governance frameworks increasingly demand clear documentation, model cards, and risk disclosures that accompany language tools, reflecting a broader societal push toward accountability in AI. As standards evolve, teams must stay abreast of regulatory developments and industry best practices, learning how to translate complex technical concepts into plain-language explanations that meaningfully inform stakeholders. The objective is to foster trust while continuing to push the boundaries of what AI language can achieve.

For ongoing learning and practical application, consult a curated set of resources that cover terminology, pedagogy, and governance strategies. The community continues to grow through open-source collaborations and industry partnerships, with forums, webinars, and tutorials that demystify terms and demonstrate real-world use cases. Readers should be mindful of the difference between capability claims and demonstrated behavior; a language model that performs well on one dataset may require additional safeguards elsewhere. The following readings provide a spectrum of viewpoints—from early glossaries to modern analyses—that help readers build a nuanced, well-structured understanding of AI language in 2025.

To sum up, the language of AI is not static. It evolves with research breakthroughs, policy developments, and the emergence of new platforms. As 2025 progresses, expect further refinements in terms related to multimodal capabilities, retrieval-augmented generation, and advanced safety tooling. Stakeholders—from developers to executives to end users—benefit from a shared vocabulary that is precise, practical, and honest about what AI can achieve today and what remains a frontier for tomorrow. For practitioners, the goal is to translate terminology into trustworthy practice, ensuring that language models are deployed with care, clarity, and accountability.

FAQ

What is the difference between supervised and unsupervised learning in AI language models?

Supervised learning uses labeled data to guide the model toward desired outputs, while unsupervised learning discovers structure from unlabeled data. Both contribute to language models, but they influence how models generalize and what kinds of tasks they excel at.

Why is alignment important in AI language systems?

Alignment ensures outputs reflect human values, safety policies, and domain constraints, reducing risks like bias, unsafe content, or misinformation. It is central to responsible AI deployment.

Where can I find reliable AI terminology resources?

Curated glossaries and guides from established sources, including those linked in this article, provide structured definitions and context for terms like NLP, LLM, and RLHF.

How do platforms influence AI terminology?

Vendor platforms shape vocabulary through APIs, tooling, and governance features. While core concepts remain consistent, product-level terms and UI language can vary by provider.

Notes

The article above intentionally avoids introductory framing and conclusions, focusing instead on in-depth sections that each stand as coherent mini-articles. The structure uses strong emphasis for key terms, tables, and lists to ensure readability and scannability. Media elements are included to enrich understanding while respecting the limit of two