En bref

- Neural networks have evolved from simple perceptrons to multi-architecture systems that power today’s AI ecosystems, with hardware, software, and data stack tightly intertwined.

- Foundational concepts—neurons, activations, backpropagation, and optimization—remain essential, but scaling, reliability, and efficiency define practical success in 2025.

- Leading platforms and players—from NVIDIA GPUs to Google DeepMind, OpenAI, IBM Watson, Microsoft Azure AI, Amazon SageMaker, Meta AI, Cerebras Systems, Hugging Face, and Anthropic—shape how researchers and enterprises deploy, customize, and govern AI systems.

- Ethics, safety, interpretability, and governance are integral to design and deployment, with real-world impact across industries such as healthcare, finance, manufacturing, and creative fields.

- This deep dive highlights architectures, training dynamics, applications, and future challenges, offering a navigable map for researchers, engineers, and decision-makers alike.

The AI landscape in 2025 is defined by rapid convergence of theory, hardware acceleration, and real-world deployments. Researchers continue to refine the core mechanisms that enable learning from data, while practitioners increasingly focus on building robust, scalable, and ethical AI systems. In this journey, companies invest in interoperable toolchains, open ecosystems, and collaborative frameworks that accelerate experimentation without sacrificing safety. The rise of platform ecosystems—ranging from cloud-native services to specialized accelerators—has turned neural networks into a core business capability rather than a research curiosity. As systems grow more capable, they also demand greater attention to data governance, bias mitigation, and human-in-the-loop oversight. For teams aiming to stay ahead, understanding both the technical underpinnings and the organizational implications is essential. This article offers a structured examination of neural networks, their architectures, training dynamics, and the ecosystems that sustain modern AI, anchored by concrete examples, case studies, and actionable guidance. You’ll discover how NVIDIA hardware, Google DeepMind breakthroughs, OpenAI innovations, IBM Watson reliability, Microsoft Azure AI, Amazon SageMaker, Meta AI, Cerebras Systems, Hugging Face, and Anthropic intersect in practice, shaping the frontier of AI in 2025 and beyond. For continued reading, see perspectives at industry resources and practitioner communities linked throughout the text.

Foundations of Neural Networks in the Modern AI Era: Concepts, Data, and Computation

The bedrock of neural networks rests on a blend of biology-inspired design, mathematical rigor, and engineering pragmatism. At the lowest level, artificial neurons compute a weighted sum of inputs, apply a nonlinear activation, and pass signals forward through layers. As networks deepen, the representation of data becomes increasingly abstract, enabling tasks that once seemed out of reach—image recognition, language understanding, and decision-making under uncertainty. The progression from shallow models to deep architectures has been accelerated by major advances in optimization, data availability, and computing power. A core aspect of this evolution is the training loop: feedforward computation, loss evaluation, and backpropagation that propagates error signals to adjust weights. This process is underpinned by gradient-based methods, yet practical success hinges on robust regularization, careful initialization, and attention to numerical stability. Strong foundations thus blend theory with pragmatic design choices that determine whether a model learns generalizable patterns or merely memorizes training data.

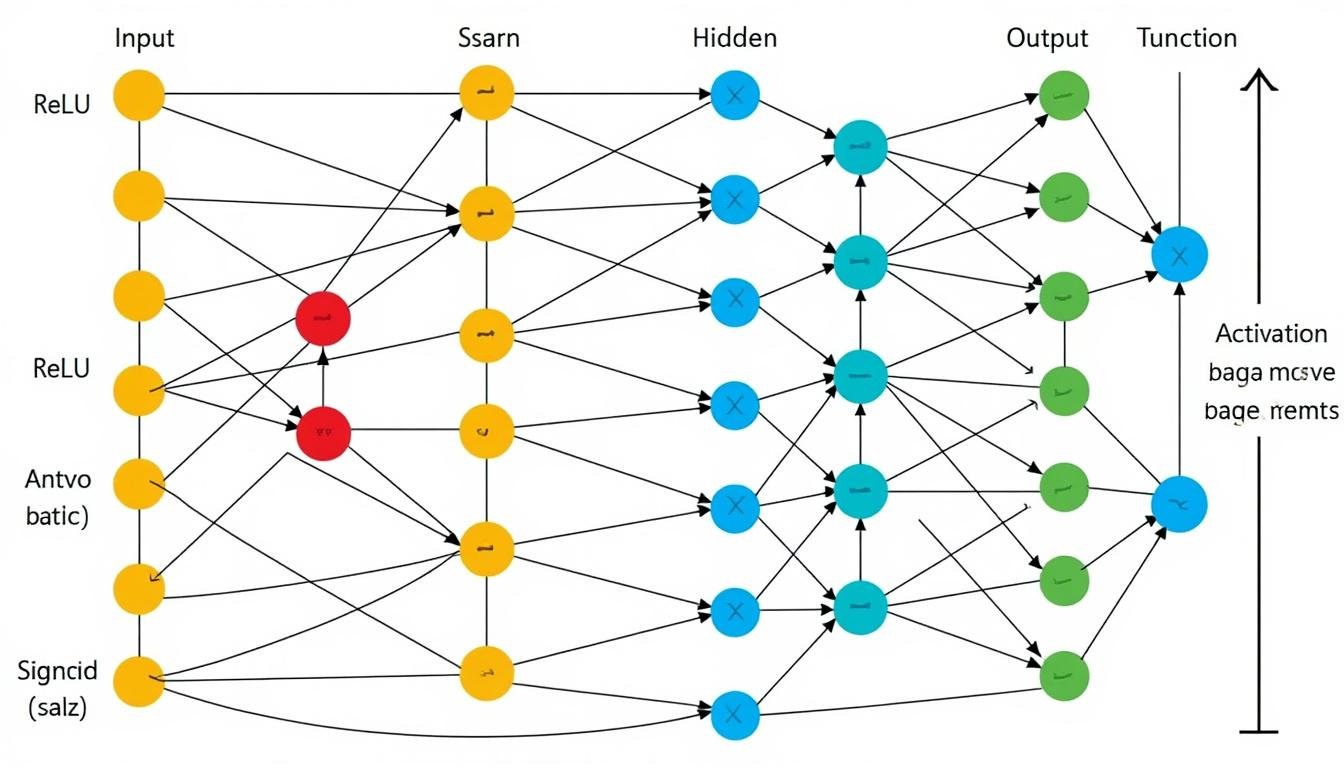

Key components and their roles emerge in tandem with data and hardware. The activation function shapes nonlinearity, enabling a network to approximate complex mappings. Common choices—ReLU, Leaky ReLU, and variants like GELU—balance computational efficiency with learning dynamics. Loss functions encode the objective: cross-entropy for classification, mean squared error for regression, and more specialized terms for structured outputs. Optimizers such as SGD with momentum, Adam, and weight-averaging techniques guide the search through high-dimensional spaces, while learning rate schedules, warm restarts, and gradient clipping stabilize training. Regularization strategies—dropout, weight decay, data augmentation, and label smoothing—curb overfitting and promote resilience to unseen data. In practice, model development hinges on a sequence of decisions: dataset curation, preprocessing, architecture selection, hyperparameter tuning, and evaluation metrics. Each choice reverberates through training time, resource consumption, and ultimately performance on real-world tasks.

Sectional highlights exploring core ideas:

- Neurons and layers create a scalable abstraction for learning representations across tasks.

- Activation functions inject nonlinearity to capture complex patterns.

- Loss landscapes and optimization strategies influence convergence speed and stability.

- Regularization and data quality determine generalization and robustness.

- Evaluation and deployment bridge theory and real-world impact, including safety, fairness, and accountability.

Several industry ecosystems illustrate how foundational knowledge translates into practice. For instance, how cognition and creativity inform design principles, or how deep learning influences real-world systems. In addition, practitioners often consult resources that translate theory into workflow decisions—balancing model complexity against latency, memory usage, and cost. The following sections expand on architectures, training, applications, and future directions, anchored by concrete examples from leaders in the space such as NVIDIA, Google DeepMind, OpenAI, IBM Watson, Microsoft Azure AI, Amazon SageMaker, Meta AI, Cerebras Systems, Hugging Face, and Anthropic. These names underscore the fusion of hardware, software, and ethical governance that characterizes modern neural networks.

| Aspect | Impact and Examples |

|---|---|

| Data quality | High-quality, diverse data improves generalization; data governance reduces bias and privacy risks. |

| Optimization | Effective optimizers and schedules accelerate convergence and stabilize training dynamics. |

| Regularization | Techniques mitigate overfitting and improve robustness to distribution shifts. |

| Evaluation | Real-world metrics and human-in-the-loop feedback ensure alignment with user needs. |

| Hardware | Specialized accelerators from NVIDIA and collaborators enable scalable training and inference. |

Core Components and Learning Dynamics

Understanding the flow from input to prediction requires unpacking several layers of abstraction. In a typical feedforward network, information traverses a stack of layers, each applying a transformation that refines the representation. The depth of this stack correlates with the model’s capacity to capture hierarchical patterns, yet it also raises challenges such as vanishing gradients and computational demands. Modern practice mitigates these issues with residual connections, normalization techniques, and optimizers designed to navigate intricate loss landscapes. The learning process is not merely a matter of adjusting numbers; it is about shaping representations that generalize across contexts, tasks, and data regimes. Through careful experimentation, researchers determine how much capacity is needed, when to introduce regularization, and how to structure data pipelines to sustain throughput during long training runs.

To illustrate how this translates into robust systems, consider a hypothetical enterprise deployment: a financial services firm trains a model to forecast risk and stress scenarios. The pipeline must accommodate streaming data, regulatory constraints, and explainability requirements. A well-tuned model would use careful calibration of loss functions, attention to calibration of probabilities, and safeguards for fairness across demographic groups. The practical upshot is that foundational concepts—while timeless—must be adapted to the scale, domain, and governance needs of real-world applications. For deeper reading on the broader influence of deep learning technologies, explore resources at convolutional neural networks and their spectra and CNNs in industry contexts.

- Neural units, layers, and architectures

- Activation functions and their computational trade-offs

- Backpropagation, gradients, and stability concerns

| Concept | Role in Training | Typical Challenges |

|---|---|---|

| Neurons | Basic processing units that accumulate weighted inputs | Choosing appropriate activation and initialization |

| Depth | Enables hierarchical feature extraction | Gradient flow and resource demands |

| Regularization | Prevents overfitting | Balancing bias and variance |

| Loss functions | Directly encode objective | Misalignment with business goals |

Architectures That Power Today’s Intelligence: From CNNs to Transformers

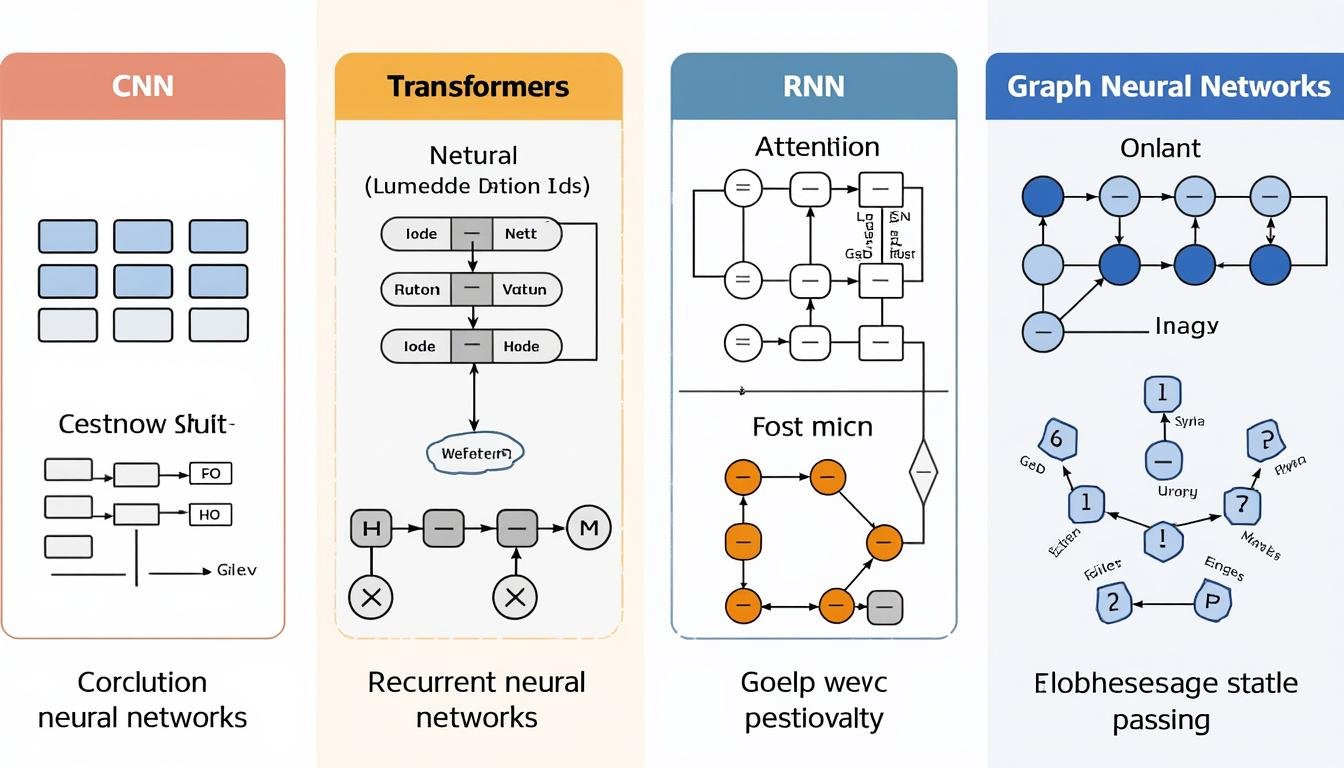

Architectures form the backbone of what neural networks can accomplish. The journey begins with classic Convolutional Neural Networks (CNNs), whose locality and weight-sharing make them exceptionally effective for spatial data like images and videos. CNNs paved the way for breakthroughs in computer vision, enabling systems that can detect objects, segment scenes, and understand visual context with remarkable accuracy. However, as tasks grew more complex—encompassing language, multi-modal data, and long-range dependencies—new architectural paradigms emerged. Recurrent networks and, more recently, Transformer-based models introduced mechanisms for sequence modeling and global context aggregation that were hard to achieve with early feedforward designs. The Transformer, with its self-attention mechanism, enables models to weigh the relevance of every input token relative to every other, unlocking capabilities in natural language understanding, reasoning, and beyond. As the field evolved, researchers also explored architectures like Graph Neural Networks (GNNs) for relational data and diffusion-based models for generative tasks. Each architecture offers strengths and trade-offs in terms of computation, memory, data requirements, and interpretability. The practical upshot is that modern AI systems typically combine multiple modalities and architectural elements to address diverse tasks while maintaining efficiency and scalability.

In practice, architecture selection is guided by task structure, data characteristics, latency constraints, and deployment environments. For instance, vision applications often rely on CNNs and vision transformers for robust performance and transfer learning capabilities. Language-centric tasks increasingly lean on transformer architectures, which can be pre-trained on massive corpora and fine-tuned for specific domains. Hybrid approaches blend CNNs with attention-based components or graph-based modules to handle multi-modal inputs like text and image, or to model relationships in data-rich contexts such as social networks or molecular graphs. The ecosystem around these architectures is vibrant: frameworks and libraries from Hugging Face and other open ecosystems accelerate experimentation, while model hubs and pretrained checkpoints reduce the barrier to entry for researchers and engineers. Consider how enterprise-grade platforms like Microsoft Azure AI and Amazon SageMaker provide scalable infrastructure and deployment pipelines that align with governance and compliance requirements.

Industry perspectives emphasize the practicalities of moving from theory to production. The choices of architecture influence not only accuracy but latency, energy usage, and maintainability. For example, deploying transformers at scale requires efficient attention mechanisms, model compression, and hardware-aware optimization to balance throughput with response time. In contrast, CNNs, when applied to streaming video analytics, emphasize low-latency inference and streaming robustness. A structured comparison helps teams decide which route aligns with business goals—whether it’s rapid prototyping on Hugging Face models or large-scale production deployments on cloud platforms that integrate with existing data pipelines and security controls. For further reading on architectural trajectories and their real-world implications, see robotics and AI integration and GANs for generative tasks.

- CNNs for spatially structured data

- RNNs and LSTMs for sequence modeling

- Transformers for long-range dependencies and multi-task learning

- Graph Neural Networks for relational data

- Hybrid and multi-modal architectures for integrated tasks

| Architecture | Strengths | Typical Use Cases |

|---|---|---|

| CNN | Local pattern recognition, translation invariance | Image classification, object detection |

| Transformer | Global context, parallelizable training | Text understanding, multi-modal tasks |

| RNN/LSTM | Sequential data handling, memory of past inputs | Time-series analysis, language modeling (older models) |

| GNN | Relational reasoning, structured data | Social networks, chemistry, recommendation graphs |

Transformer Paradigm and Beyond

The Transformer has become a cornerstone for many AI problems, enabling scalable pre-training on diverse data and flexible fine-tuning for domain adaptation. Its self-attention mechanism allows models to focus on relevant parts of the input, which translates into superior performance on language tasks, multi-modal understanding, and reasoning benchmarks. Yet the Transformer is not a silver bullet; it poses challenges such as high memory usage and substantial data requirements. This has driven research into efficient attention, sparse representations, and alternative architectures that retain performance while reducing compute demand. Diffusion models offer another promising direction for generative tasks, producing high-quality samples with iterative refinement. These innovations illustrate a broader trend toward modular, scalable architectures that can be tailored to constraints like latency, budget, and energy consumption. As the ecosystem evolves, practitioners assess trade-offs between accuracy, efficiency, and interpretability, guided by practical deployment scenarios and governance considerations.

For a deeper dive into practical deployments and the role of AI ecosystems in 2025, see capsule networks and robustness and optimization for scalable AI.

| Dimension | Considerations |

|---|---|

| Latency | Real-time inference vs batch processing; transformer optimizations help reduce latency. |

| Memory | Attention matrices and activations require careful memory management. |

| Data | Pretraining datasets, domain adaptation, continual learning concerns. |

| Hardware | GPUs from NVIDIA, custom accelerators, and cloud inference services shape efficiency. |

Training, Optimization, and Scaling in Industry-Scale Neural Networks

Training neural networks at scale is as much about systems engineering as it is about models. Industry teams orchestrate data pipelines that ingest, clean, and label vast streams, while ensuring privacy, compliance, and auditability. The scale demands distributed optimization across hundreds or thousands of devices, with strategies to coordinate updates, synchronize gradients, and mitigate stragglers. Modern systems leverage mixed precision, gradient accumulation, and checkpointing to balance throughput and numerical stability. Data pipelines must handle quality control, drift detection, and synthetic data augmentation to maintain robustness over time. As models grow, the energy footprint becomes a concern; hardware-aware optimization, model compression, and efficient serving pipelines help deliver performance without unsustainable costs. In 2025, the convergence of cloud platforms, hardware accelerators, and optimized software stacks makes scalable AI an operational reality for many enterprises.

The training lifecycle is coupled to platform choices and governance frameworks. Cloud providers offer end-to-end workflows—from data storage to experimentation tracking and deployment—while enabling collaboration across distributed teams. This is where toolchains from ecosystems such as Hugging Face and enterprise-grade services on Microsoft Azure AI or Amazon SageMaker come into play. Teams must decide on data handling practices, versioning of models, and safety controls, including evaluation on bias, robustness, and safety margins. An effective scaling strategy also contemplates inference efficiency, model serialization formats, and edge deployment considerations for latency-sensitive applications. The practical takeaway is that successful AI programs align model architecture, data governance, and deployment pipelines with business objectives and regulatory requirements. For more background on the engineering challenges and best practices for deep learning at scale, see CNN-driven insights in large-scale systems and RNNs for sequential data processing.

- Data governance and privacy controls

- Distributed optimization and gradient synchronization

- Mixed precision and memory management

- Model compression and efficient serving

- Experiment tracking and reproducibility

| Technique | Benefit | Typical Scenario |

|---|---|---|

| Mixed precision | Speeds up training with reduced memory, maintains accuracy | Large-scale NLP and vision models |

| Gradient accumulation | Enables large effective batch sizes on limited hardware | Training with memory constraints |

| Checkpointing | Resilient fault tolerance and recovery during long runs | Multi-day training jobs |

| Model compression | Lower latency and smaller footprints for deployment | Edge and mobile inference |

Applications, Ethics, and AI Ecosystems in 2025: From Vision to Language and Beyond

Applications of neural networks span a wide range of sectors, including healthcare, finance, manufacturing, and creative industries. In medicine, AI assists radiology, genomics, and decision support, while in finance it informs risk models, fraud detection, and automated advisory services. Manufacturing benefits from predictive maintenance, supply chain optimization, and robotic process automation. Language and perception systems power chatbots, virtual assistants, document understanding, and content generation, with impacts on education, media, and customer experience. The 2025 AI ecosystem is increasingly composable: organizations assemble capabilities from a mosaic of providers, open-source models, and commercial services, all governed by policies that address safety, privacy, and fairness. This is reinforced by cloud-native platforms and MLOps practices designed to streamline development-to-deployment loops while meeting regulatory requirements. As capabilities expand, so do concerns about misuse, bias, and alignment with human values. The industry responds with risk assessment frameworks, transparent evaluation, and governance models that empower responsible innovation across sectors.

Among the leading platforms and players, NVIDIA accelerates training and inference; Google DeepMind advances core research and governance strategies; OpenAI pushes capabilities with safety-by-design; IBM Watson emphasizes enterprise-grade reliability; Microsoft Azure AI and Amazon SageMaker provide scalable, governable cloud solutions; Meta AI expands research-to-product pipelines; Cerebras Systems offers specialized accelerators for dense neural networks; Hugging Face nurtures an open model-sharing ecosystem; and Anthropic contributes to safety and alignment research. Together, these ecosystems enable practical deployments—from customer support copilots to clinical decision aids—while catalyzing collaborations that accelerate responsible AI development. For concrete case studies and industry perspectives, read about cognition-inspired design principles and RNN-based data processing insights.

- Healthcare: diagnostic support and personalized treatment planning

- Finance: risk assessment, anomaly detection, and algorithmic trading

- Industry: predictive maintenance and quality control

- Creativity: content generation, design assistance, and media production

| Platform or Player | Core Value | Representative Use |

|---|---|---|

| NVIDIA | Hardware and software ecosystem for scalable AI | Massive-scale training and inferencing |

| OpenAI | Accessible, capable AI services with safety guidelines | APIs for NLP, coding, and multimodal tasks |

| Microsoft Azure AI | Enterprise-grade AI platform with governance | Cloud-native ML workflows, security controls |

| Hugging Face | Open-source model hub and collaboration platform | Quick experimentation, model sharing, fine-tuning |

Ethics, Safety, and Responsible AI in Practice

Ethical considerations are not afterthoughts; they shape design decisions, deployment protocols, and ongoing monitoring. Responsible AI requires transparency about data provenance, model capabilities, and potential biases. It also calls for robust safety measures, including fail-safes, auditing mechanisms, and human oversight in high-stakes contexts. Industry collaborators experiment with alignment techniques, red-teaming exercises, and interpretability tools to illuminate how decisions are made inside complex models. Public policy, corporate governance, and stakeholder engagement play crucial roles in ensuring AI benefits are broadly shared while risks are mitigated. The ecosystem’s maturity in 2025 reflects a maturing balance: powerful capabilities paired with formal governance and ethical guardrails that help organizations earn trust and ensure accountability across all stages of the lifecycle.

- Bias detection and mitigation strategies

- Explainability and model auditability

- Privacy-preserving training and data governance

- Human-in-the-loop and safety evaluation

- Regulatory compliance and risk management

| Concern | Mitigation | Impact |

|---|---|---|

| Bias | Representative data, fairness metrics, post-hoc audits | Improved fairness and user trust |

| Privacy | Federated learning, differential privacy, anonymization | Reduced exposure of sensitive information |

| Safety | Guardrails, monitoring dashboards, escalation paths | Controlled risk in production |

- Adopt governance models that align AI with business ethics.

- Invest in interpretability tools that reveal model reasoning to non-experts.

- Establish clear incident response plans for AI-driven systems.

Future Trends, Challenges, and Opportunities on the Horizon

The trajectory of neural networks points toward greater capabilities, greater efficiency, and greater responsibility. Researchers anticipate continued advances in scaling laws, multimodal reasoning, and transfer learning—enabling models to generalize across tasks with fewer labeled examples. Energy efficiency remains a top priority as models grow in size; this motivates research in quantization, pruning, sparsity, and alternative hardware architectures. Alignment and safety will remain central concerns as models participate more directly in decision-making processes that affect people and communities. The integration of AI systems with robotics, automation, and edge devices will blur the lines between centralized cloud intelligence and local, real-time processing, demanding robust distributed architectures and seamless orchestration. At the same time, open ecosystems, standards, and collaborative platforms will continue to democratize access to AI, accelerating innovation while enabling rigorous governance and safety practices. The ongoing dialogue among academia, industry, policymakers, and the public will shape the ethical and practical contours of AI’s next phase. For readers seeking forward-looking insights, consider exploring capsules, generative models like GANs, and optimization techniques that unlock new frontiers in AI efficiency and capability.

- Scaling laws and model efficiency improvements

- Safety, alignment, and governance as core design principles

- Edge AI and hybrid cloud-edge deployments

- Multi-modal and creative intelligence innovations

- Interdisciplinary collaboration and societal impact

| Forecast | Opportunity | Risk |

|---|---|---|

| Edge intelligence | Low-latency, privacy-preserving inference | Hardware constraints and model updates |

| Multi-modal reasoning | Cross-domain capabilities (text, image, audio) | Data alignment and cross-modal bias |

| Generative AI governance | Safer content generation and licensing models | Regulatory complexity and misuse potential |

- Invest in hardware-aware model design and efficiency research.

- Prioritize safety-by-design practices in research and product teams.

- Foster transparent collaboration between industry and policymakers.

FAQ

What are the main differentiators between CNNs and Transformers in 2025?

CNNs excel at capturing local spatial patterns with lower computational cost, especially in image-centric tasks, while Transformers excel at modeling long-range dependencies and multi-modal data through self-attention. In practice, many pipelines blend both, or use transformers for high-level reasoning and CNNs for efficient feature extraction.

How do industry platforms ensure safety and governance in AI deployments?

Platforms provide governance features such as access control, audit logs, model versioning, bias and drift detection, and safety review processes. Teams combine automated checks with human oversight to maintain accountability and compliance across the lifecycle.

Where can I learn about practical AI implementations and case studies?

Explore industry blogs, tutorials, and model hubs (e.g., Hugging Face) as well as case studies from organizations implementing AI in healthcare, finance, and manufacturing. The links embedded throughout this article point to curated resources and real-world examples.

What is the role of hardware accelerators in training large neural networks?

Hardware accelerators (GPUs, TPUs, and specialized chips) reduce training time, enable larger batch processing, and improve energy efficiency. They are essential for pushing the boundaries of model size and performance in production environments.