En bref

- OpenAI unveils GPT-4, a landmark multimodal AI model that processes text and images to produce refined text outputs, signaling a step-change beyond GPT-3.5.

- human-level performance on a broad set of benchmarks, including standardized tests, while elevating reliability, steerability, and factual accuracy.

- Microsoft, Google, Amazon Web Services, IBM, NVIDIA, Anthropic, DeepMind, Meta, and Cohere.

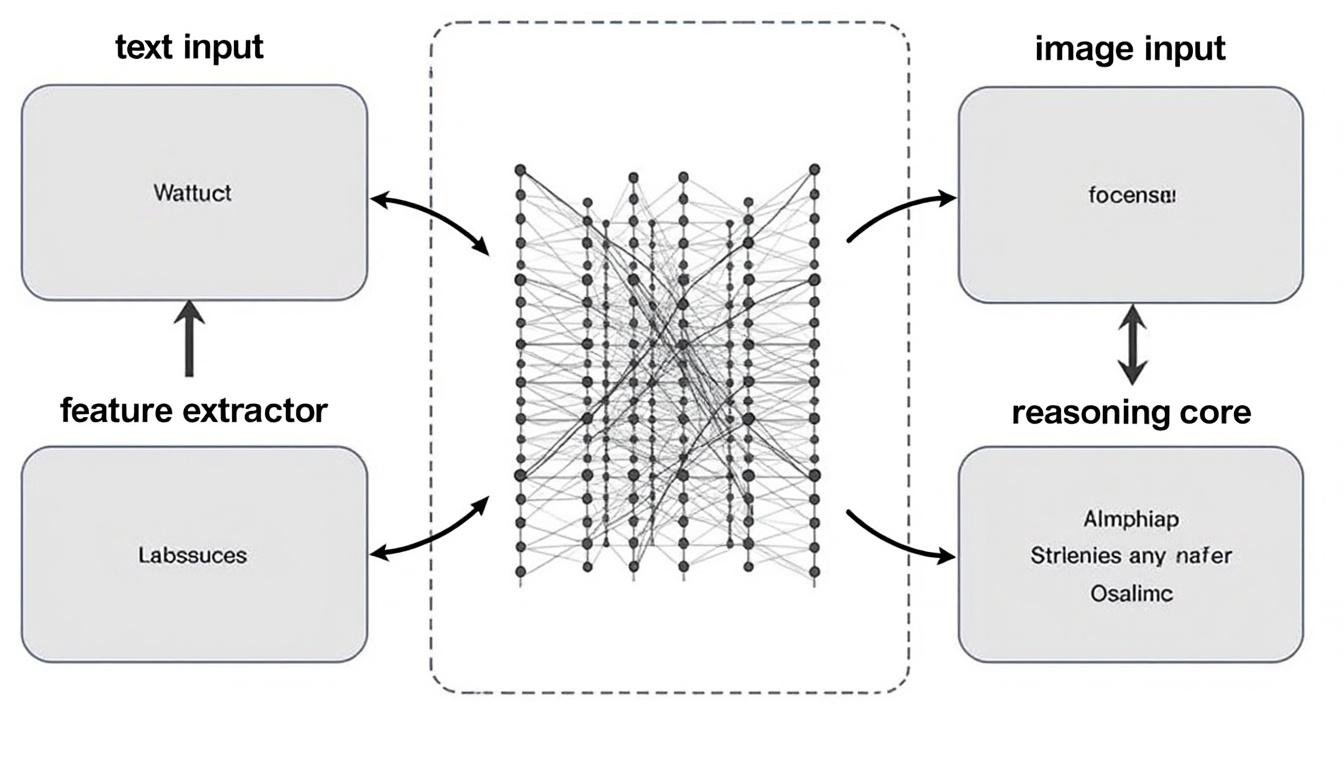

OpenAI has released GPT-4 as a multimodal AI system capable of taking both text and image inputs and generating text outputs, a design that redefines how machines understand context and align with human intent. The shift from purely text-based processing to a fused modality approach ripples through virtually every industry. By combining robust reasoning with flexible instruction-following, GPT-4 shows improved handling of complex prompts, better resistance to prompt degradation, and a higher ceiling for applying AI to real-world tasks that demand contextual comprehension across diverse data types. The development underscores a broader trend in AI toward models that operate reliably in open-ended environments and that can be steered toward specific outcomes without sacrificing accuracy or safety. The result is not merely a larger model but a more capable, more accountable system that can be tuned for the needs of enterprises, researchers, and developers alike. This article explores the architecture, capabilities, applications, and ecosystem surrounding GPT-4, anchored in concrete examples and practical guidance for organizations navigating the 2025 AI landscape.

1) GPT-4’s Multimodal Leap: Architecture, Capabilities, and Early Evidence

The core entrance point for GPT-4 is its multimodal design, a deliberate expansion beyond the text-only paradigm that characterized previous generations. In practice, the model can ingest an image alongside textual prompts, extract salient visual features, associate them with linguistic constructs, and then produce coherent, contextually relevant narratives, explanations, or actions in text form. This capability enables use cases that hinge on the joint interpretation of language and visuals, such as analyzing medical imaging alongside patient notes, interpreting diagrams in technical manuals, or generating descriptive captions that are tightly aligned with visual content. The architectural emphasis is on robust cross-modal fusion, where the model learns to map multimodal inputs into a shared representation space that preserves semantic fidelity while enabling sophisticated reasoning. In effect, GPT-4 becomes a bridge between visual perception and language-based cognition, broadening the domain of problems it can address without demanding bespoke pipelines for each modality.

Key features and improvements can be summarized as follows. In a technical sense, multimodal capabilities replace the prior bottlenecks that constrained models to text alone, unlocking integrated workflows that fuse perception with language. The model also demonstrates enhanced performance on professional and academic benchmarks, in some cases approaching or reaching human-level proficiency on complex problem sets, including standardized tests that historically relied on human reasoning across multiple domains. The improvement is not merely in accuracy, but in the distribution of errors: GPT-4 tends to produce fewer hallucinations, and when it does err, the errors are more traceable and easier to correct through fine-tuning and prompt design. The reliability and steerability of outputs have benefited from targeted calibration, making the model more predictable in structure and tone, with clearer alignment to user intent and contextual constraints. This operational stability is essential for deployment in sensitive environments such as healthcare or finance, where misinterpretations carry tangible risk. Equally important is the emphasis on factual accuracy, achieved through a combination of training data curation, improved verification techniques, and post-output evaluation pipelines that reduce the likelihood of disseminating misinformation.

From an architectural perspective, GPT-4 incorporates a refined framework for evaluating and mitigating risks, including automated safety checks and a governance layer that supports safer experimentation. OpenAI’s strategy includes public engagement and feedback loops, ensuring that safety controls evolve in response to real-world use and societal concerns. The combination of a multimodal core, improved reliability, and a principled safety scaffold positions GPT-4 not only as a more capable tool but as a platform capable of supporting diverse product strategies—ranging from conversational assistants to content generation, to assistive analytics in complex professional settings.

In practice, the model demonstrates substantial real-world utility. For example, in education, it can produce interactive materials that weave textual explanations with illustrative visuals, enabling more engaging, multimodal learning experiences. In healthcare, GPT-4 has potential to assist clinicians by interpreting imaging data in tandem with descriptive notes, aiding diagnostic workflows and treatment planning when used with transparent provenance and clinician oversight. Industry benchmarks reveal consistent gains in tasks such as information extraction, summarization, and reasoning across datasets that combine text and images. Beyond raw performance, feedback from early pilots points to improved user satisfaction thanks to clearer instructions, better alignment to goals, and a reduced need for manual prompt engineering. As organizations explore adoption, they increasingly demand architectures that generalize well across tasks, scale with organizational needs, and integrate smoothly with their existing cloud and data ecosystems. The evidence suggests GPT-4 is not a single-use tool but a scalable platform for building intelligent solutions that leverage both language and visual reasoning to augment human capabilities.

Table 1. GPT-4 versus GPT-3.5: key capabilities and metrics

| Aspect | GPT-4 | GPT-3.5 | Notes |

|---|---|---|---|

| Multimodal input support | Text and image inputs | Text only | Enables joint interpretation of visuals and language |

| Benchmark performance | Near human-level on several assessments | Strong but below human parity on many tests | Improvements vary by domain |

| Reliability | Higher consistency, reduced error rate | Lower consistency, more ambiguous outputs | Supports enterprise-grade use cases |

| Factual accuracy | Improved with verification channels | Higher risk of misinformation without checks | Dependent on data and prompts |

Family and ecosystem signals reinforce the significance of GPT-4’s emergence. The model’s launch aligns with ongoing strategies by OpenAI to weave AI capability into cloud and enterprise environments. The interplay with cloud platforms from Microsoft is a cornerstone of deployment, reflecting mutual interests in scaling AI services and embedding advanced capabilities into productivity tools. Industry observers expect continued collaboration with major providers, including Amazon Web Services and Google, while security, governance, and auditability become primary design constraints for any organization leaning into multimodal AI at scale. As the field evolves, other players—such as IBM, NVIDIA, Anthropic, DeepMind, Meta, and Cohere—will contribute through complementary models, tooling, or platform-specific integrations, shaping a heterogeneous yet interconnected AI landscape. For readers seeking broader context on AI concepts and applications, the linked analyses provide deeper explorations into the technology’s foundations and practical implications: Understanding Artificial Intelligence: A Deep Dive into Its Concepts and Applications, The Emergence of GPT-4o: A New Chapter in AI Innovation by OpenAI, GPT-3.5 vs GPT-4o: Crafting the Ultimate Money-Making Machine.

Gauging impact: industry readiness and measurement

Organizations often ask how to translate GPT-4’s capabilities into measurable outcomes. The immediate lens centers on four axes: productivity gains, accuracy improvements, risk management, and customer experience. On productivity, teams report faster content generation, more precise coding assistance, and streamlined data interpretation. In terms of accuracy, the enhanced ability to verify and cross-reference outputs reduces the burden of manual fact-checking, though it does not eliminate the need for domain-specific validation. For risk management, the new safety and steering features enable tighter control over output style, tone, and content boundaries, which is particularly valuable in regulated industries. Customer experience gains come from more natural, context-aware interactions, including the ability to understand and respond to visual cues in addition to textual prompts. These shifts require careful orchestration with existing workflows, data governance rules, and explainability requirements. The combination of robust capability, improved reliability, and cost-effectiveness is attracting attention from senior leaders who are balancing innovation with risk controls.

Industry partners are already piloting integrated workflows that leverage GPT-4 as a core decision-support component. In healthcare, clinical decision support can be enhanced when imaging data and textual records are interpreted in parallel under clinician supervision. In education, dynamic tutoring systems can present multimedia lessons tailored to a student’s visual and textual preferences. In manufacturing and technical domains, GPT-4 can analyze schematics and manuals to generate troubleshooting narratives or procedural updates. The economic takeaway is that GPT-4 expands the boundary of what is feasible with AI at scale, encouraging a rethinking of process design, service delivery models, and talent strategies. As with any disruptive technology, successful adoption hinges on a combination of clear use-case definitions, robust data governance, and a culture that prizes iterative testing and transparent accountability.

For those seeking more context on the broader AI ecosystem and market dynamics in 2025, consider the following resources and perspectives: Blogging Revolutionized: Harnessing the Power of AI, The Rise of AI: Understanding Its Impact and Future, and a broader overview of AI concepts at Understanding AI Concepts and Applications.

The following section turns to concrete use cases and architectures that organizations can study when mapping GPT-4 into product roadmaps and enterprise pipelines. The emphasis is on practical steps, not just theoretical potential.

2) Practical Applications Across Industries: How GPT-4 Reshapes Workflows

The practical implications of GPT-4’s multimodal capabilities extend across sectors, from healthcare to media to education. The ability to ingest both images and text enables workflows that were previously impractical or error-prone, aligning with enterprise needs for faster insight, better decision support, and more engaging user experiences. In healthcare, for example, clinicians increasingly rely on AI to interpret radiology images in conjunction with patient histories and lab results. The model can help generate structured narratives that accompany visual findings, highlight discrepancies, and propose next-step actions in a way that supports, rather than replaces, clinical judgment. In education, GPT-4 can craft interactive modules that blend diagrams, charts, and textual explanations, offering a more holistic approach to learning that adapts to individual student contexts. In finance and operations, the model can parse contracts with visual redlines or annotated diagrams, summarize key terms, and produce risk assessments that are accessible to non-specialists. The versatility of multimodal processing makes it possible to design end-to-end experiences that align with real-world tasks while upholding governance and safety standards.

Adopting GPT-4 at scale requires disciplined product design and platform engineering. Teams should start with a few high-value use cases, establish feedback loops, and implement robust evaluation metrics that capture qualitative and quantitative outcomes. A common pattern is to pair GPT-4 with domain experts who can validate outputs, verify sources, and guide the iterative tuning process. The architecture must support data provenance, explainability, and auditable decision trails so that outputs can be traced back to inputs and constraints. This approach helps build trust, especially in regulated sectors where stakeholders demand accountability and transparency. The organizational impact often includes a shift in roles from static content creation to dynamic content curation, where AI augments human expertise rather than replacing it. For managers, the focus becomes designing interfaces that enable operators to steer behavior and to customize the system for specific job functions, while for engineers, the emphasis is on building reliable pipelines that manage prompt design, model updates, and monitoring at scale.

Industry leaders are experimenting with platform-level integrations that connect GPT-4 to major AI ecosystems. This includes cloud platforms and enterprise software suites from Microsoft, Google, and Amazon Web Services, enabling seamless deployment of multimodal capabilities into existing workflows. The broader AI supply chain features collaborations with other leading organizations such as IBM, NVIDIA, Anthropic, DeepMind, Meta, and Cohere, each contributing complementary tooling, safety frameworks, or specialized models. Organizations should also consult external perspectives to understand market dynamics and governance considerations: GPT-4o: The AI-Comedian and Narrative Applications, Perspectives on Politicians Through GPT-4 Lenses, and Exploring the Joyful World of AI.

In healthcare, the capacity to analyze imaging with textual context can enhance diagnostic workflows when combined with decision-support systems. In education, instructors may deploy multimodal tutors that adapt to student responses in real time, weaving visual cues with textual explanations to reinforce concepts. In customer service, AI agents can interpret screenshots or product images provided by customers to diagnose issues more precisely and to propose targeted remedies. Beyond individual workflows, GPT-4 shapes new business models such as AI-assisted content studios, personalized learning platforms, and on-demand expert systems that relay sophisticated reasoning in accessible language. The economic implications are substantial, with potential productivity gains and new revenue streams that reflect the model’s adaptability across tasks. For readers seeking deeper dives into related topics, consult the linked resources: The Rise of AI: Understanding Its Impact and Future, GPT-3.5 vs GPT-4o: Money-Making Machine, and Engaging in Conversation with GPT-4o in an Invented Language.

- Healthcare: diagnostic support, imaging interpretation, patient education

- Education: multimodal tutoring, interactive materials, accessibility improvements

- Enterprise: knowledge extraction, contract analysis, procedural guides

- Media and entertainment: content generation, narrative design, humor-aware assistants

- Research and development: hypothesis generation, literature summarization, data interpretation

Industries adopt multimodal AI with attention to data governance and ethical considerations. The ability to harness both text and images creates opportunities for more personalized, context-aware solutions, but it also raises questions about data provenance, consent, bias, and the need for human oversight in critical decisions. As enterprises experiment, they should pair GPT-4 implementations with robust monitoring, bias mitigation, and feedback mechanisms that enable continuous improvement. The goal is to reduce the cognitive load on human operators while maintaining control over outputs, sources, and decision rationales. For a broader panorama of AI concepts and applications that inform these decisions, the following links provide in-depth reads: Understanding AI Concepts and Applications, Blogging Revolutionized by AI, and The Emergence of GPT-4o: A New Chapter in AI Innovation.

To bridge theory and practice, consider a practical blueprint for a multimodal AI deployment: start with a diagnostic use case, gather representative text and image data under governance guidelines, and design prompts that preserve determinism while allowing for controlled creativity. Then, establish success metrics that capture both output quality and user satisfaction, while monitoring safety controls and user feedback to adjust steering controls as needs evolve. This blueprint emphasizes iteration, cross-functional collaboration, and a culture of responsible experimentation—principles that have proven essential as AI enters production contexts across sectors.

3) Reliability, Steerability, and Factual Accuracy: Building Trust in AI Outputs

Trust in AI outputs rests on several pillars: reliable reasoning, transparent steering controls, and verifiable factuality. GPT-4 has been engineered to minimize the generation of incorrect or misleading information through iterative refinement, more rigorous evaluation pipelines, and explicit alignment with user intent. The steerability dimension refers to the ability of users to influence the style, scope, and constraints of responses without sacrificing usefulness or accuracy. This is particularly important in enterprise contexts where outputs must adhere to brand guidelines, regulatory constraints, and domain-specific knowledge. The combination of reliable reasoning and user-guided steering makes the model more predictable, enabling teams to integrate it into critical decision-making processes with greater confidence.

The improvements in factual accuracy stem from several design choices. Data curation choices, improved alignment with source materials, and ongoing refinements in verification processes contribute to higher-quality outputs. However, no AI system is error-free, and the best practice remains to deploy multi-layer validation, human-in-the-loop oversight for high-stakes tasks, and transparent logging of inputs and outputs. OpenAI emphasizes safety and ethics as a core component of GPT-4’s lifecycle, including automated evaluation frameworks and opportunities for public input to refine safety guardrails over time. Organizations can harness these features by building layered governance around model usage, establishing guardrails that enforce content boundaries, source citation when possible, and explicit disclaimers when outputs are probabilistic or uncertain. The objective is to combine high capability with robust safeguards, ensuring that users receive informative, accurate results while maintaining trust and accountability.

Practical implications for teams include setting explicit success criteria, designing prompt templates that encourage precise reasoning, and implementing continuous monitoring to detect drift in model behavior or knowledge updates. The aim is to create an environment where AI augments human expertise rather than replacing it, with clear channels for feedback and remediation. For readers seeking additional perspectives on AI ethics and governance in 2025, explore analyses such as Perspectives on Politicians from the GPT-4 Lens and Exploring the Joyful World of AI.

Table 2 compares risk and steering characteristics for GPT-4 across dimensions of reliability, steerability, and factual accountability.

| Dimension | GPT-4 Profile | GPT-3.5 Profile | Operational Considerations |

|---|---|---|---|

| Reliability | High consistency with tighter response boundaries | Moderate consistency; more variability | Favor deterministic prompting and validation |

| Steerability | Explicit controls, adaptable to task constraints | Limited steering precision | Implement task-specific prompts and safety checks |

| Factual accuracy | Improved accuracy with verification channels | Higher rate of factual drift without checks | Mandatory source validation and clinician or expert oversight when appropriate |

In the ecosystem, OpenAI continues to shape best practices for responsible AI deployment, while collaborating with major cloud and technology partners to ensure alignment with safety, privacy, and governance standards. For organizations evaluating GPT-4’s usefulness, it is critical to understand not only what the model can do, but how to monitor, correct, and iterate on outputs in production. Detailed case studies and technical analyses offer deeper insight into the practical steps required to implement robust risk controls and measurable performance improvements. For readers seeking broader context on AI ethics and governance frameworks, the following resources provide further guidance: The Rise of AI: Understanding Its Impact and Future, Blogging Revolutionized by AI, and Understanding AI Concepts and Applications.

Table 3. Stakeholder concerns and mitigations for GPT-4 deployments

| Concern | Mitigation | Responsible Stakeholders | Success Metric |

|---|---|---|---|

| Hallucinations | Verification pipelines, citations, and rule-based constraints | AI engineers, data stewards | Reduction in unverified outputs by X% |

| Bias | Bias detection, diverse datasets, inclusive prompting | Ethics leads, researchers | Bias incident rate and remediation cycle time |

| Privacy | Data minimization, access controls, audit trails | Security, compliance | Auditable usage patterns |

Additional readings on governance and enterprise deployment include Gemini’s Wit: Humorous Takes on AI and The Emergence of GPT-4o: A New Chapter in AI Innovation. These pieces illustrate how organizations balance capability growth with responsibility, a balance that is central to 2025 AI strategy planning.

4) Ecosystem and Enterprise Readiness: The Big Players and the Cloud Horizon

GPT-4’s advent intensifies the collaboration between OpenAI and a spectrum of technology and platform leaders. The relationship with Microsoft remains foundational for enterprise deployment, given their integrated AI offerings across Azure services and productivity tools. Beyond Microsoft, the field is populated by other tech behemoths and specialist firms that contribute through cloud deployments, hardware acceleration, and research collaboration. Google continues to push advances in large-scale model training and multimodal reasoning, while Amazon Web Services expands AI services in the cloud that cater to developers seeking scalable, secure environments for experimentation and production. On the hardware and accelerator side, NVIDIA remains critical for optimizing inference performance, while IBM and Anthropic contribute safety-focused capabilities and alignment research that informs production-grade deployments. The research ecosystem is enriched by DeepMind, Meta, and Cohere, each adding specialized models, tooling, and API ecosystems that help enterprises tailor GPT-4 to niche domains.

Operational readiness is as important as technical capability. Enterprises must design for data governance, access control, and regulatory compliance when integrating multimodal AI into mission-critical workflows. A pragmatic approach combines pre-built templates and modular components with custom prompts and control guards. The deployment model should align with cloud strategy, including the potential to leverage Microsoft and AWS-first pathways, depending on compliance and latency requirements. Partnerships and ecosystem compatibility matter for procurement, support, and upgrade paths, ensuring that organizations can scale responsibly while balancing performance, cost, and risk. The industry trend indicates growing adoption of AI-as-a-Service patterns, standardized governance frameworks, and shared best practices across sectors. For readers seeking broader perspectives on AI ecosystems and industry dynamics, consider the following resources: The Rise of AI: Understanding Its Impact and Future, Exploring the Joyful World of AI, and GPT-4o: The AI Comedian and Narrative Applications.

In practice, enterprise leaders should map the AI strategy to business outcomes, choosing partner ecosystems that align with their data strategy and governance posture. They should also invest in talent capable of bridging AI capabilities with domain expertise, ensuring that the model’s outputs are both actionable and explainable to stakeholders. The overarching goal is to create a sustainable, adaptable AI capability that can evolve with advances in multimodal modeling, alignment research, and cloud-native deployment patterns. The journey is ongoing, and the market’s direction will be shaped by continued collaboration among AI labs, cloud providers, and industry incumbents. For a deeper dive into the broader AI landscape and its corporate implications, consult the linked resources and analyses: GPT-4o: The AI Comedian and Narrative Applications, Perspectives on Politicians Through GPT-4 Lenses, and The Emergence of GPT-4o: A New Chapter in AI Innovation.

5) Safety, Ethics, and the Future Landscape: OpenAI and the Ecosystem of Responsible AI

As AI capabilities accelerate, governance, safety, and ethical considerations come to the forefront. OpenAI frames GPT-4 within a broader safety architecture that combines automated evaluations, human oversight, and public input to refine risk controls and alignment. The goal is to minimize misuse, curb the spread of misinformation, and ensure that AI systems operate within clearly defined boundaries. This emphasis on safety coexists with a commitment to experimentation and innovation, recognizing that responsible progress requires both robust technical safeguards and community engagement. The safety framework is designed to be iterative, with updates informed by real-world use cases, safety audits, and external feedback. For organizations adopting GPT-4, this means embedding safety reviews into the development lifecycle, establishing clear accountability for outputs, and maintaining transparency about model limitations and uncertainties.

Ethical considerations extend to bias, privacy, consent, and the equitable distribution of benefits. The 2025 AI policy landscape increasingly favors transparent data provenance, auditable decision processes, and user empowerment in steering AI behavior. Enterprises must design user interfaces and governance processes that make the model’s behavior legible to non-experts, including explicit explanations of how outputs are generated and which sources informed them. The social implications of multimodal AI—how it interprets visuals, how it communicates, and how it influences decision-making—call for careful reflection on inclusivity and potential harm. In practice, this translates to robust red-teaming exercises, impact assessments, and continuous alignment work with diverse user groups and stakeholders. OpenAI’s approach to safety is not a static checklist; it is a living program that evolves with ongoing public discourse and policy developments across jurisdictions.

Looking ahead, the AI ecosystem will likely see a proliferation of specialized accelerators, model variants, and governance tools that complement GPT-4’s capabilities. Cloud providers will compete on performance, privacy features, and ease of integration, while industry consortia work toward common interoperability standards. The result should be a more mature market where multimodal AI is embedded in enterprise processes with explicit risk controls and measurable outcomes. For readers seeking broader context on AI governance and the evolving landscape in 2025, the following materials offer additional perspectives: The Rise of AI: Understanding Its Impact and Future, Gemini’s Wit: Humorous Takes on AI, and Engaging in Conversation with GPT-4o Using an Invented Language.

From a practical standpoint, organizations should plan for a multi-year roadmap that includes governance maturation, continuous safety evaluation, and scalable deployment. This involves not only technical readiness but also organizational readiness—training teams to interpret AI outputs correctly, to challenge results when necessary, and to communicate findings clearly to executives and stakeholders. In parallel, industry observers will watch for the emergence of new AI platforms and collaborations that expand multimodal capabilities, enabling more sophisticated applications across education, healthcare, and industry. The narrative around GPT-4 is not simply about a leap in capability; it is about shaping an ethical, resilient, and productive AI ecosystem that improves human decision-making while preserving trust and accountability.

Table 4. Safety and governance levers for GPT-4 deployment

| Levers | Implementation | Impact | Owner |

|---|---|---|---|

| Automated evaluations | Regular automated safety checks and impact assessments | Early detection of risky outputs | Safety team |

| Public input | Solicit feedback from users and experts | Refined alignment and transparency | Policy and governance |

| Explainability | Output provenance and justification prompts | Enhanced trust and auditability | Engineering and compliance |

Links for further insights into ethics, governance, and public discourse around AI in 2025 include The Rise of AI: Understanding Its Impact and Future and Understanding AI Concepts and Applications. These resources help frame GPT-4 within a broader conversation about responsible innovation. The ecosystem perspective remains essential: OpenAI collaborates with platform partners and research allies to ensure that the benefits of AI scale responsibly, with safety and ethics as foundational commitments rather than afterthoughts. The 2025 landscape rewards those who couple technical prowess with disciplined governance, stakeholder engagement, and a clear vision for how AI augments human capability without compromising trust or safety.

FAQ-style guidance and practical considerations will be provided in the closing section of this article to address common questions organizations have as they plan their GPT-4 adoption strategies. For more perspectives on AI’s trajectory and practical deployment patterns, the following readings offer useful context: Blogging Revolutionized by AI, The Emergence of GPT-4o: A New Chapter in AI Innovation, and GPT-4o: The AI Comedian and Narrative Applications.

What makes GPT-4 a multimodal model, and why does it matter?

GPT-4 accepts text and image inputs and generates text outputs. This multimodal capability enables integrated reasoning across linguistic and visual information, expanding use cases in healthcare, education, manufacturing, and beyond while improving alignment with user intent and safety requirements.

How does GPT-4 improve reliability and steerability compared to earlier models?

GPT-4 benefits from refined prompt design, better output controls, and verification mechanisms. Steering controls allow users to constrain style, tone, and scope, while reliability improvements reduce hallucinations and inconsistent responses, especially on complex prompts.

Which industries are most likely to benefit early from GPT-4?

Healthcare for imaging-assisted interpretation, education with multimodal tutoring, enterprise knowledge management, content creation, and customer support. Early pilots emphasize explainability, governance, and domain-specific validation.

What governance practices should organizations adopt when deploying GPT-4?

Implement automated safety checks, human-in-the-loop validation for high-stakes tasks, provenance and explainability of outputs, bias mitigation, privacy safeguards, and clear accountability trails across inputs, outputs, and prompts.

Where can I find further reading on GPT-4 and AI governance?

See linked analyses and case studies in the article, including materials on AI concepts, ethics, and applications at the referenced URLs.