In brief

- Neural networks are powerful, brain-inspired models that learn from data by adjusting internal parameters called weights and biases.

- From feedforward nets to Transformers, a wide spectrum of architectures underpins today’s AI applications across vision, language, and multimodal tasks.

- Training hinges on backpropagation and optimization techniques that minimize error and improve generalization on unseen data.

- By 2025, major players like OpenAI, Google AI, NVIDIA, IBM Watson, and Microsoft Azure AI have integrated neural nets into products, research, and industry workflows.

- Ethical and practical challenges—interpretability, energy use, data privacy—shape the path forward for reliable AI systems.

Neural networks represent a central pillar of modern artificial intelligence, implementing mathematical abstractions of how information flows through interconnected processing units. In 2025, these networks have evolved from simple multilayer perceptrons to sprawling, highly capable systems that can understand text, analyze images, transcribe audio, and even learn from multimodal data that blends language, vision, and sound. The core idea remains elegantly simple: data enters an arrangement of artificial neurons organized in layers; each neuron computes a weighted sum of its inputs, adds a bias, passes the result through a nonlinear activation function, and forwards the outcome to the next stage. Through exposure to vast datasets, the network tunes its weights to minimize error, progressively improving its ability to recognize patterns, forecast outcomes, and generate content. This explanatory arc—from concept to practical engine—frames how neural networks have become the backbone of today’s AI applications. As you explore, you’ll encounter the major architectures, training strategies, and real-world use cases that define the field, along with the OpenAI, DeepMind, NVIDIA, IBM Watson, Google AI, and Microsoft Azure AI ecosystems that anchor both research and industry practice. For a deeper dive into the landscape, you’ll find insightful discussions at resources such as the growth of Transformer models in recent years and the emergence of efficient, scalable networks designed for edge devices, cloud platforms, and enterprise systems. The conversations surrounding neural networks also touch on powerful frameworks—TensorFlow and PyTorch—that researchers and developers rely on to prototype, train, and deploy models at scale. Links to related explorations and case studies are embedded throughout this article to illuminate how these architectures translate into tangible capabilities across sectors—from healthcare diagnostics to autonomous systems, from fraud detection to creative content generation. Learn how these trends intersect with industry titans and innovations from OpenAI through DeepMind to NVIDIA, and how they shape the AI strategies of tech giants such as Google AI, IBM Watson, and AWS Machine Learning pipelines. This is not merely a technical tour; it’s a map of the practical, ethical, and strategic dimensions of 2025 AI through the lens of neural networks.

Foundations of Neural Networks: Structure, Learning, and Core Operations

At the heart of neural networks lies a design that mirrors the modular, hierarchical nature of information processing. A typical network comprises layers of interconnected units called artificial neurons, each performing a compact computation that contributes to a larger prediction. The basic processing unit takes a set of inputs, applies a set of weights to those inputs, adds a bias, and then passes the result through a non-linear activation function. This nonlinearity is essential: without it, stacked layers would collapse into a single linear transformation, no matter how many layers exist. The training objective is to adjust these weights and biases so that the network’s outputs align with the desired targets across a broad array of examples. In this sense, learning is an optimization problem over high-dimensional parameter spaces, tackled with iterative algorithms and vast computational resources. For practitioners, understanding these mechanisms is a prerequisite to designing robust models that generalize beyond training data. The following sections unpack how this structure translates into practical capabilities and how different components interact in real systems. The material below also connects to widely used frameworks and tools such as TensorFlow and PyTorch, which empower researchers to experiment with loss functions, optimizers, and activation choices. You can explore more about the practical implications of these decisions in references linked throughout this article, including industry leaders like OpenAI, NVIDIA, and Google AI, whose work informs both theory and application.

- Neurons aggregate inputs via weighted sums and nonlinear activations to produce outputs.

- Layers create a hierarchical representation, from low-level features to abstract concepts.

- Activation choices (ReLU, sigmoid, tanh, etc.) shape the network’s expressiveness and training stability.

- Backpropagation computes gradients that guide the adjustment of weights and biases to reduce a loss function.

- Effective learning requires a balance of data volume, regularization, and computational resources to prevent overfitting.

| Component | Role | Typical Example |

|---|---|---|

| Input Layer | Receives raw data; passes it to hidden layers for processing. | Pixel values for an image, word embeddings for text. |

| Hidden Layers | Transform representations through learned weights. | Intermediate features in image recognition, syntax features in NLP. |

| Output Layer | Produces predictions, probabilities, or decisions. | Class probabilities in image classification, regression value in forecasting. |

| Weights and Biases | Parameters adjusted during training to fit data. | Matrix entries that scale and shift inputs at each layer. |

| Activation Function | Injects nonlinearity to enable complex mappings. | ReLU, sigmoid, tanh, GELU. |

To understand the full picture, consider a practical scenario where a neural network classifies medical images. The input layer ingests pixel intensity values. Hidden layers progressively extract patterns—edges, textures, and high-level structures. The final layer outputs probabilities for each disease category. Training on labeled images adjusts weights to minimize a loss function such as cross-entropy. The entire process benefits from robust data pipelines, careful validation, and monitoring of metrics like accuracy and calibration. In this context, the work of leading research groups and companies—OpenAI, Google AI, NVIDIA—illustrates how design choices in architecture and optimization translate into clinical and industrial impact. The broader ecosystem continues to enrich practice: TensorFlow and PyTorch provide the building blocks; enterprise platforms from AWS Machine Learning and Microsoft Azure AI offer scalable training and deployment. Papers and tutorials from IBM Watson, DeepMind, and Meta AI contribute to the collective knowledge that practitioners rely on to push boundaries. As you read, consider how activation functions influence gradient flow, how regularization strategies mitigate overfitting, and how data quality shapes model outcomes. The journey from fundamentals to deployment is iterative, collaborative, and deeply dependent on the right combination of theory, tooling, and real-world constraints. For historical context and future directions, see related discussions on link resources provided here, including perspectives on how neural networks have evolved since their early mechanistic roots to the modern, transformer-driven landscape.

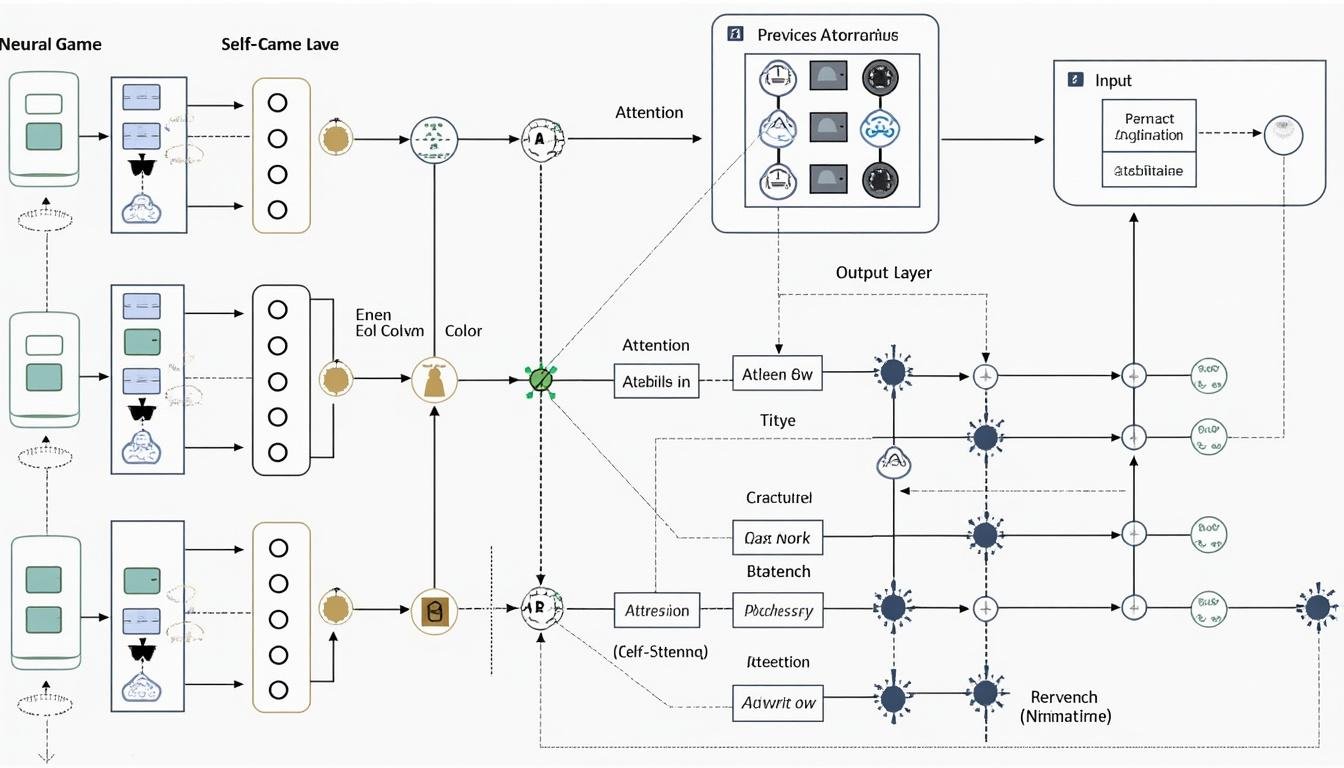

Architectures and Variants: From Feedforward to Transformers and Hybrids

Architectures define what a neural network can learn and how efficiently it can learn it. The simplest lineage begins with feedforward neural networks, where information moves in one direction from input to output. These networks excel at structured prediction tasks where the data points are independent and identically distributed. However, real-world data often exhibit dependencies across time or space, which calls for more sophisticated designs. That realization led to recurrent structures that maintain a memory of previous inputs, enabling sequences, context, and temporal reasoning. In parallel, the rise of convolutional architectures brought a powerful lens for analyzing visual information, capturing local patterns with shared weights across spatial dimensions. Today, transformers dominate many AI systems by leveraging self-attention mechanisms and scalable training regimes, enabling large language models and multimodal capabilities that fuse text, images, and audio. Hybrids blend these ideas to tackle specialized tasks—combining the strengths of CNNs for vision with transformers for language, or integrating recurrent components for time-series data. The section that follows maps these variants, outlines their typical applications, and discusses trade-offs in efficiency, interpretability, and data requirements. As you explore, you’ll see how the balance between bias, variance, and computation shapes architectural choices. The discussion also touches on open-source ecosystems and commercial platforms that accelerate experimentation, including the role of open benchmarks and industry collaborations with OpenAI, Google AI, NVIDIA, and AWS Machine Learning. The practical takeaway is that the right architecture is rarely universal; it depends on data structure, task objectives, latency constraints, and deployment context. For those planning real-world systems, understanding these distinctions helps align research ideas with measurable impact and governance considerations. The conversation is ongoing and actively informed by cutting-edge work at DeepMind, IBM Watson, Meta AI, and related research communities, with ongoing conversations about efficiency, robustness, and safety shaping future designs. For more, explore linked resources on convolutional networks, recurrent architectures, and the latest transformer innovations in 2025.

- Feedforward networks for straightforward classification tasks with clear input-output mappings.

- Recurrent networks (RNNs, LSTM, GRU) for sequences such as text, speech, and time-series data.

- Convolutional networks (CNNs) for image and video analysis, with spatial hierarchies and pooling operations.

- Transformers and attention-based models for language understanding, text generation, and multimodal tasks.

- Hybrid architectures that combine CNNs, RNNs, and transformers to handle complex data structures.

| Architecture | Key Feature | Typical Applications |

|---|---|---|

| Feedforward | Unidirectional data flow; simple training dynamics. | Tabular data classification, basic pattern recognition. |

| RNN / LSTM / GRU | Sequential processing; memory of past inputs. | Language modeling, speech recognition, forecasting. |

| CNN | Local receptive fields; weight sharing across spatial dimensions. | Image classification, object detection, medical imaging. |

| Transformer | Self-attention; parallelizable; handles long-range dependencies. | Language translation, summarization, multimodal tasks. |

| Hybrid / Multimodal | Cross-domain integration; flexible architectural patterns. | Video captioning, visual question answering, robotics. |

In practice, practitioners select architectures with attention to data modality, required latency, and resource availability. For image-heavy tasks, CNNs retain practical strength, though they increasingly appear in tandem with transformers for end-to-end vision-language models. In natural language processing, transformers define the current frontier, enabling models with billions of parameters to learn rich linguistic and world knowledge. Beyond textual data, multimodal models fuse signals from speech, text, and images to form coherent representations that support tasks such as captioning, question answering, and interactive agents. The ecosystem surrounding these architectures is vibrant, with open benchmarks and ecosystems that include TensorFlow and PyTorch as the primary tooling belt, and with cloud providers—AWS Machine Learning, Microsoft Azure AI—offering scalable training environments that minimize time-to-value for enterprises. The industry’s leading players—OpenAI, Google AI, NVIDIA, IBM Watson, and Meta AI—are pushing both efficiency and capability, driving innovations in transfer learning, fine-tuning, and on-device inference. To this day, every architectural decision propagates downstream: it affects data requirements, training dynamics, and the interpretability of the resulting model. The journey from architectures to deployment is iterative and collaborative, anchored in community standards, reproducible experiments, and responsible AI practices. For further reading on architecture-specific innovations, consult the linked resources covering convolutional networks, capsules, and transformer refinements across the community and industry literature.

Training Deep Networks: Backpropagation, Optimization, and Generalization

Training a neural network is a careful orchestration of mathematics, data, and computation. The core procedure—backpropagation—propagates error signals from the output layer back through the network to adjust weights and biases. This gradient-based learning process relies on differentiable components, loss functions that quantify prediction error, and optimization algorithms—most famously stochastic gradient descent (SGD) and its modern variants like Adam, RMSprop, and AdamW. The selection of a loss function depends on the task: cross-entropy for classification, mean squared error for regression, or more specialized objectives for structured prediction or generative modeling. Beyond the basic scheme, practitioners implement regularization techniques—dropout, weight decay, data augmentation, label smoothing—to prevent overfitting and encourage the model to generalize to unseen data. The scale of modern models, often trained on massive datasets, introduces practical concerns around compute efficiency, energy use, and distributed training strategies. As models grow, parallelism schemes—from data parallelism to model parallelism and pipeline parallelism—become essential to harness hardware like GPUs and specialized accelerators from NVIDIA. The 2025 landscape features large language models and multimodal systems that require careful engineering to maintain stability, calibration, and safety. The narrative from theory to deployment weaves through loss landscapes, learning rate schedules, and convergence diagnostics, emphasizing that successful training is as much about data as it is about algorithms. In this section, we unpack the training loop’s essential components, then ground them with concrete examples and practical considerations that practitioners encounter in industry settings, including AI platforms from AWS, Google Cloud, and Microsoft Azure AI. At every step, the aim is to build models that perform robustly outside the lab, across diverse domains and real-world conditions. For deeper dives into backpropagation techniques and optimization strategies, the linked articles and resources provide extended analyses and case studies, including discussions of how to balance efficiency with accuracy in contemporary AI workflows.

- Data preparation and labeling to ensure high-quality supervision.

- Initialization strategies to set a good starting point for learning.

- Learning rate schedules to adapt step sizes during training.

- Regularization methods to prevent overfitting and improve resilience.

- Evaluation protocols to measure generalization and calibration on unseen data.

| Training Element | Purpose | Common Techniques |

|---|---|---|

| Loss Function | Quantifies error to minimize during learning. | Cross-entropy, MSE, MAE, focal loss. |

| Optimizer | Guides parameter updates to minimize loss. | SGD, Adam, AdamW, RMSprop. |

| Regularization | Promotes generalization and reduces overfitting. | Dropout, weight decay, data augmentation, early stopping. |

| Learning Rate Schedule | Controls step size over time to promote convergence. | Step decay, cosine annealing, warm restarts, cyclical learning rates. |

| Evaluation | Assesses performance on held-out data and distribution shifts. | Validation metrics, calibration curves, robustness tests. |

Training also intersects with practical deployment concerns. Large models demand scalable cloud infrastructure and efficient data pipelines. The ecosystem around training includes AI research blogs and technical notes that explain nuances in optimization and architecture search. Tools like TensorFlow and PyTorch provide extensive APIs for building custom loss functions, implementing complex schedules, and distributing workloads across hardware. In industry contexts, major platforms—RNNs for sequence analysis, AWS Machine Learning, Google AI, Microsoft Azure AI, and NVIDIA accelerators—enable teams to iterate rapidly while maintaining governance and reproducibility. Training is also a grounded human practice: it requires careful data curation, annotation quality control, and ongoing monitoring of model behavior as it encounters new scenarios in production. Cases from healthcare to finance demonstrate the importance of interpretability and reliability, underscoring why teams increasingly invest in accountability frameworks and external audits as part of responsible AI initiatives. For more technical depth on optimization and generalization challenges, see related discussions in the linked resources and industry reports, which connect theory to practice in 2025 and beyond.

Applications and Real-World Impact in 2025

The practical reach of neural networks extends across sectors, touching everyday tools and groundbreaking research alike. In 2025, enterprises deploy AI systems to automate routine decision-making, augment creative workflows, and enable data-driven insights that were previously out of reach. The integration of Transformers and multimodal models into product pipelines has amplified the ability to understand and generate human-like language, analyze complex visual scenes, and fuse these modalities into coherent outputs. Across industries, organizations rely on leading platforms—Google AI, AWS Machine Learning, Microsoft Azure AI, and NVIDIA-backed pipelines—to train and serve models with the scale and reliability required by production environments. The interplay of OpenAI research with industry deployments continues to accelerate capabilities, while DeepMind and Meta AI push advances in areas like reasoning, planning, and robust learning under uncertainty. These developments are not merely technical; they reshape workflows, business models, and user experiences. This section surveys the major application domains, highlighting use cases, outcomes, and implementation considerations, with concrete examples and practical lessons for practitioners and decision-makers. It also includes a curated set of references and case studies that illustrate real-world impact and the strategic choices organizations make when adopting neural networks at scale. The discussion is anchored by ongoing advances in hardware acceleration, efficient inference techniques, and model governance that together define a practical blueprint for AI in 2025 and beyond. For readers seeking deeper dives, the embedded links to external analyses offer additional context on generative models, vision-language systems, and real-world deployment patterns across sectors such as healthcare, finance, education, and entertainment.

- Automated content generation and editing in media and marketing.

- Medical imaging analytics and decision-support tools for radiology and pathology.

- Autonomous systems and robotics for logistics and manufacturing.

- Voice assistants and multilingual translation improving global communication.

- Fraud detection, anomaly detection, and risk scoring in finance.

| Domain | Neural Network Use | Representative Technologies |

|---|---|---|

| Healthcare | Image analysis, diagnostic aids, and personalized treatment planning. | CNNs for imaging; diffusion models for synthesis; transformer-based NLP for notes. |

| Finance | Time-series forecasting, risk assessment, fraud detection. | RNNs/LSTMs for sequences; transformers for pattern discovery; anomaly detectors. |

| Media & Entertainment | Content generation, dubbing, and interactive storytelling. | Generative models; diffusion-based synthesis; language models for scripts. |

| Industrial & Robotics | Supply chain optimization, control systems, autonomous navigation. | Hybrid CNNs/transformers for perception; reinforcement learning for control. |

| Education | Personalized tutoring, assessment, and accessibility tools. | Adaptive models; multimodal interfaces; language understanding for feedback. |

Real-world examples illustrate how OpenAI, Google AI, and NVIDIA hardware meet the demands of production workloads. Consider how enterprises leverage AI insights and innovations to shape their timelines, or how CNNs power medical imaging workflows. For multilingual, multimodal scenarios, transformer-based models underpin chatbots, search, and content moderation, while cloud platforms provide the scale required to train and deploy these systems responsibly. The synergy between industry leadership and academic breakthroughs is visible in ongoing partnerships and open-source projects—each contributing to more capable, efficient, and fair AI. To access practical examples and case studies, review linked materials that trace how neural networks translate research into value across sectors.

Challenges, Ethics, and The Road Ahead for AI

Despite remarkable progress, neural networks encounter persistent challenges that shape the trajectory of AI development. Interpretability remains a central concern: many models operate as “black boxes,” making it difficult to explain decisions in high-stakes contexts such as healthcare or law. Researchers pursue methods to illuminate internal reasoning paths, quantify uncertainty, and provide reliable explanations for predictions. Energy efficiency has grown in importance as models scale; efforts to reduce training and inference costs—through efficient architectures, quantization, pruning, and hardware-level optimizations—are crucial for broad, responsible deployment. Data quality and bias also demand attention. Diverse, well-labeled data reduce systematic errors, while governance frameworks and audit processes help ensure that models behave ethically and meet regulatory expectations. Security considerations—such as robust defenses against adversarial inputs and safeguarding user privacy—are essential for maintaining trust in AI systems. This section surveys these concerns, highlighting practical strategies and policy-oriented perspectives that organizations are adopting in 2025 as they implement neural networks at scale. It also discusses the broader societal implications, including the potential for AI to reshape job markets, education, and creative industries, and how stakeholders—developers, researchers, business leaders, and policymakers—must collaborate to harness benefits while mitigating risks. The journey forward involves a balance of technical innovation, responsible stewardship, and transparent communication with users and communities affected by AI-enabled systems. In parallel, ongoing research in reinforcement learning, adaptive control, and multimodal AI continues to push the boundaries of what neural networks can accomplish, while forums, conferences, and journals disseminate findings that inform practice and policy. For readers seeking deeper context on the ethical and practical dimensions of 2025 AI, the included references point to discussions about safety, interpretability, and governance in real-world deployments.

- Explainability and interpretability strategies for complex models.

- Energy efficiency and sustainable AI practices in training and inference.

- Data governance, bias mitigation, and privacy-preserving techniques.

- Security concerns, including robustness to adversarial manipulation.

- Regulatory and societal implications of widespread AI adoption.

| Challenge | Impact | Mitigation Strategies |

|---|---|---|

| Interpretability | Limited insight into decision processes; trust concerns. | Model auditing, explainable AI, feature attribution methods. |

| Energy and Compute | High resource use; environmental and cost considerations. | Efficient architectures, quantization, pruning, hardware optimization. |

| Data Bias | Biased outcomes; fairness concerns across demographics. | Balanced datasets, bias testing, fairness-aware training. |

| Privacy | Exposure of sensitive information in training data or inferences. | Privacy-preserving training, differential privacy, access controls. |

| Security | Vulnerability to adversarial inputs and data manipulation. | Robust training, adversarial testing, secure deployment. |

Looking ahead, the AI community is actively exploring directions that promise safer, more capable systems. Innovations in capsule networks offer robustness to spatial transformations, while continued advances in reinforcement learning bring progress in autonomous control and decision-making under uncertainty. The ecosystem around DeepMind’s research strategies, the ongoing work at OpenAI, and the contributions from Meta AI and other leading groups inform not only technical trajectories but also governance and ethical guidelines that help steer deployment in ways that maximize public good. For readers seeking a broader perspective on the future of neural networks, a curated set of external resources is provided below, including analyses of recurrent architectures, convolutional network refinements, and the role of intelligent agents in complex environments. The practical takeaway is that responsible AI requires balancing ambition with accountability, making it essential to pair technical excellence with transparent ethics and governance bets. The path forward will likely emphasize efficiency, interpretability, and reliable operation across diverse settings, as researchers and industry partners continue to push the boundaries of what neural networks can achieve in 2025 and beyond.

- What are the core limitations of current neural networks, and how can we address them?

- How do transformers reshape the capabilities of language and multimodal AI?

- What governance practices are most effective for responsible AI deployment?

What fundamental components define a neural network?

A neural network consists of input data passed through layers of artificial neurons. Each neuron applies weights and biases, uses an activation function to introduce nonlinearity, and propagates its output to subsequent layers. Training adjusts these weights to minimize a loss function via backpropagation and optimization algorithms like Adam or SGD.

Why are activation functions essential in neural networks?

Activation functions introduce nonlinearity, enabling the network to model complex relationships. Without them, multi-layer networks collapse into a single linear transformation, limiting expressiveness. Common choices include ReLU, sigmoid, tanh, and GELU.

How do transformers differ from traditional CNNs or RNNs?

Transformers use self-attention to weigh the relevance of all input positions, enabling parallel processing and capturing long-range dependencies. This makes them highly effective for language tasks and multimodal data, often outperforming older architectures on large-scale datasets.

What role do cloud platforms play in training large neural networks?

Cloud platforms provide scalable compute, storage, and orchestration for distributed training. They enable researchers to experiment with bigger models, manage data pipelines, and deploy models with robust monitoring and governance.

How does 2025 AI balance performance with ethics and safety?

The field emphasizes explainability, fairness, privacy, and robust security. Researchers and organizations pursue transparent evaluation, bias mitigation, and governance frameworks to align AI systems with societal values while maintaining high performance.