En bref

- The power of Convolutional Neural Networks (CNNs) lies in learning hierarchical representations that transform raw image data into meaningful concepts, from edges to objects, with remarkable efficiency and scalability.

- Core ideas such as local receptive fields, parameter sharing, and spatial invariance underpin CNN success across vision tasks, enabling robust object recognition, segmentation, and scene understanding.

- In practice, CNNs are deployed with modern frameworks and hardware accelerators, including TensorFlow, PyTorch, and NVIDIA GPUs, often leveraging OpenCV for pre/post-processing.

- The field is evolving with directions like capsule networks, efficient CNN architectures, and edge AI, supported by major players from Google AI to AWS Machine Learning and Microsoft Azure AI.

- Real-world applications span healthcare imaging, autonomous systems, and large-scale visual search, frequently requiring careful data handling, augmentation, and transfer learning strategies.

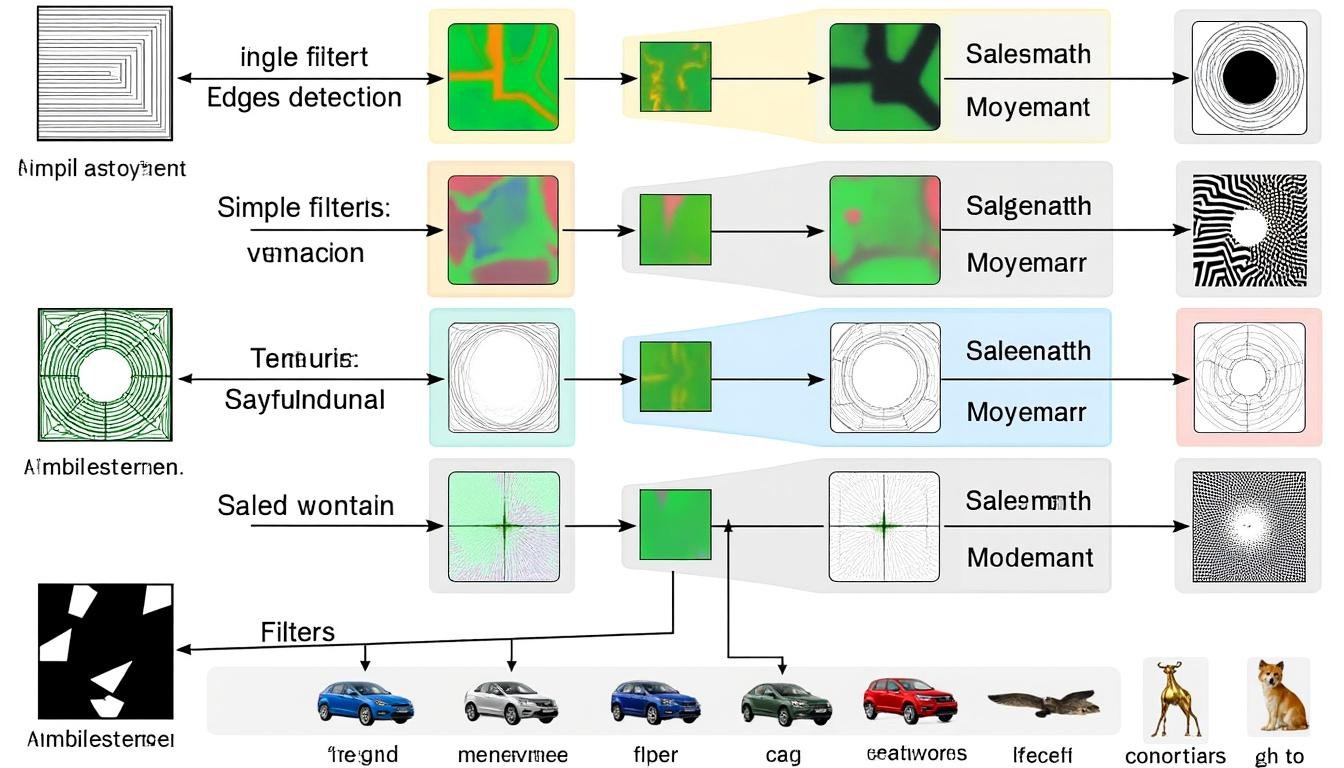

Convolutional Neural Networks (CNNs) have transformed how machines perceive the visual world. A CNN’s journey begins with simple filters that detect basic patterns—lines, textures, and edges—and progressively builds complex representations that can identify faces, animals, or scenes. This deepening hierarchy is not accidental: it mirrors how humans recognize objects by composing simple features into more abstract concepts. The practical impact is profound, enabling systems to classify images, locate objects, and even generate new visuals with remarkable fidelity. In 2025, CNNs sit at the crossroads of research and industry, integrated into production pipelines through frameworks like TensorFlow and PyTorch, while hardware accelerators from NVIDIA power large-scale training and inference. Open-source ecosystems, including OpenCV, provide tooling for data handling, augmentation, and deployment. The story advances with novel architectures, efficient training regimes, and applications that push CNNs beyond traditional image classification into robust scene understanding and interactive AI systems. For interested readers, exploring capsule networks as a frontier in neural architectures offers a complementary perspective on representing spatial hierarchies and pose information. See this capsule networks exploration for context on how alternative ideas are reshaping the field. The broader AI ecosystem, including Google AI, AWS Machine Learning, and Microsoft Azure AI, continues to standardize, scale, and democratize CNN-powered vision capabilities.

Exploring the Power of Convolutional Neural Networks: Foundations, Filters, and Visual Perception

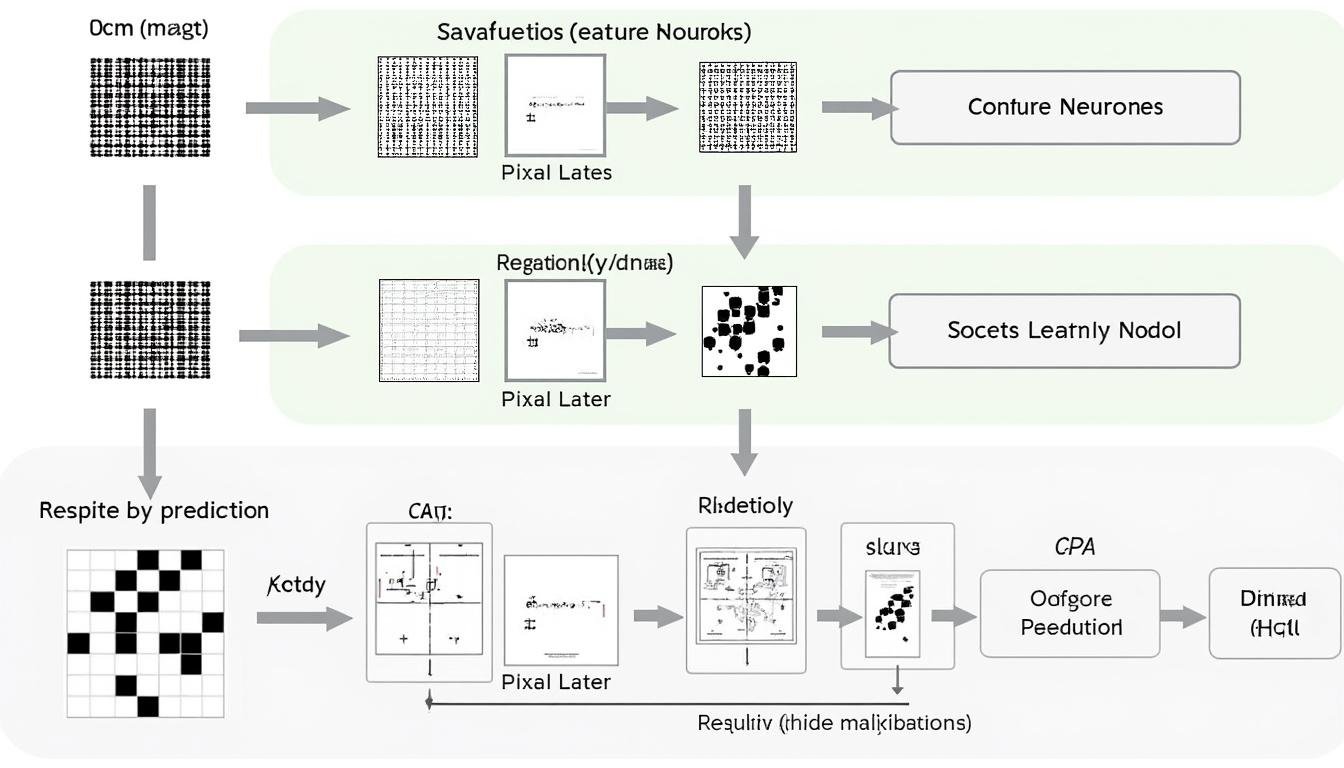

Convolutional Neural Networks (CNNs) represent a paradigm shift in how machines learn to see. At their core, CNNs exploit the idea that an image can be interpreted through localized patterns, discovered by filters trained to respond to particular visual cues. Over successive layers, these cues evolve from rudimentary edges to intricate textures and, eventually, to high-level semantic concepts such as objects or scenes. This multi-layered learning process is supported by a sequence of architectural primitives: convolutional layers that apply learnable filters, nonlinear activations, pooling operations that reduce spatial resolution, and, in many designs, normalization and skip connections that facilitate training. The combined effect is a model capable of recognizing complex visual patterns with impressive generalization across diverse datasets. In practice, CNNs are trained with large labeled datasets using stochastic optimization techniques that progressively tune the network’s parameters to minimize a loss function. The result is a model that can perform tasks ranging from image classification to precise object localization and even image synthesis, as seen in recent generative and perceptual tasks. The power of CNNs is amplified when integrated into end-to-end pipelines leveraging platforms like Keras, PyTorch, and TensorFlow, while hardware accelerators from NVIDIA enable scalable training on large datasets. In the real world, this synergy translates into faster iteration cycles, more robust models, and the ability to deploy CNN-based vision systems across cloud and edge environments. For practitioners, the journey begins with understanding local receptive fields and the principle of parameter sharing, which together explain why a relatively small set of filters can capture a wide array of visual features. An accessible way to appreciate this is to imagine filters that first detect edges along different orientations, then combine these edges to form simple shapes, and finally assemble these shapes into complex objects like faces or vehicles. This hierarchical feature learning is a hallmark of CNNs and a primary reason for their enduring relevance in computer vision. To ground the discussion, consider a practical example: a CNN that learns to identify cats in images. Early layers respond to fur texture and ear shape; middle layers combine these cues to form body outlines; deeper layers integrate these features into a high-level concept of a “cat.” This progression illustrates how spatial hierarchies enable robust recognition even under variations in pose, lighting, and background. As the field matures, researchers are exploring improvements that preserve spatial information more efficiently, reduce data and compute requirements, and facilitate transfer learning across domains. The literature and industry benchmarks consistently show CNNs delivering strong performance on tasks such as object classification, detection, and segmentation, often with well-charmonized pipelines that begin with data preprocessing, continue through feature extraction, and end with decision-making layers. For readers seeking deeper technical journeys, the exploration of capsule networks and other architectural innovations is one path toward more expressive representations without sacrificing practicality. For further context on contemporary practice, consult ongoing discussions in major AI labs and industry blogs, including open resources from Google AI, DeepMind, and OpenCV. The goal is to understand how CNNs generalize across tasks and how researchers and engineers translate theory into scalable, reliable systems. Edge deployments, cloud-based training, and hardware-aware designs are all part of a broader ecosystem that continues to evolve in 2025 and beyond.

| Aspect | What it does | Impact |

|---|---|---|

| Convolution | Applies learnable filters to local regions of the input | Extracts low-level features like edges |

| Activation | Introduces nonlinearity (e.g., ReLU) | Enables complex representations |

| Pooling | Downsamples feature maps | Reduces computation and provides invariance |

| Hierarchical learning | Stacks layers to build high-level concepts | From edges to objects to scenes |

- Key concepts: local receptive fields, parameter sharing, translation equivariance.

- Frameworks and tools: TensorFlow, PyTorch, Keras.

- Hardware and libraries: NVIDIA GPUs, OpenCV for data handling, and Caffe.

Beyond theory, practitioners balance several practical concerns when deploying CNNs: data quality, class imbalance, and label noise can dramatically influence learning outcomes. Transfer learning—pretraining on large datasets and fine-tuning on target tasks—often yields strong performance with limited labeled data. Regularization strategies such as dropout, weight decay, and batch normalization help models generalize to unseen data. In production, model choices are guided by latency, memory, and energy constraints, especially in edge or mobile contexts. The narrative of CNNs in 2025 thus blends foundational math with pragmatic engineering, ensuring models perform reliably in diverse environments. For those exploring the frontier of spatial representations, capsule networks offer a provocative direction: they aim to preserve pose and part-whole relationships, potentially enhancing robustness to viewpoint changes. See the capsule networks discussion linked above for a broader view on architecture alternatives. The broader ecosystem—spanning Google AI, AWS, Microsoft Azure AI, and academic collaborations—continues to mature, shaping best practices for data pipelines, model governance, and scalable inference.

Deep Dive: How CNNs Learn from Data

At training time, a CNN processes millions of image patches, adjusting filters to maximize a predictive objective. The convolution operation slides a filter across the image, computing a dot product at each spatial location. The result is a feature map that highlights where the pattern associated with the filter is detected. What makes CNNs powerful is the compositional nature of these maps: early layers capture simple cues like edges, corners, and textures, while deeper layers capture more abstract concepts such as shapes, object parts, and whole objects. This hierarchical growth enables a flexible representation that generalizes across variations in lighting, scale, and background. The design choices—kernel size, stride, padding, depth, and nonlinearity—control how much information is preserved at each stage and how the spatial structure is transformed. As a practical matter, engineers often begin with standard configurations (e.g., 3×3 kernels, stride 1, padding 1) to maintain spatial resolution while stacking more layers to deepen the network. Then they experiment with more aggressive pooling or strided convolutions to trade resolution for speed when needed. The pursuit is to find a balance between expressive power and computational efficiency, a balancing act that becomes increasingly nuanced as models grow larger or must operate in real-time on devices. This exploration is ongoing in both academia and industry, where researchers test novel activations, normalization schemes, and architectural motifs to improve gradient flow and convergence. In parallel, researchers document and benchmark progress using public datasets and standardized evaluation protocols, enabling fair comparisons across methods and enabling rapid iteration. To illustrate the real-world significance, consider how CNN-based systems power medical imaging assistance, autonomous driving perception stacks, and large-scale visual search engines. Projects like this are increasingly integrated with cloud-based services and hardware-accelerated pipelines, making CNN-based vision accessible to a broad range of users and applications. Teams also emphasize interpretability and traceability, particularly in high-stakes domains, which motivates ongoing research into explainable AI and visualization techniques that illuminate what the filters are detecting and why certain decisions are made. For further reading and practical implementations, explore references from major AI labs and industrial partners who publish tutorials and reference architectures that bridge theory and practice.

Exploring the Power of Convolutional Neural Networks: Architecture, Kernels, and Feature Learning in Practice

Architecture in CNNs defines how layers are arranged to extract and transform features from images. The kernel, or filter, is a small matrix that slides across the input to produce a feature map that highlights a specific pattern. Stride determines how far the filter moves at each step, while padding controls whether the borders are preserved or reduced. Depth—the number of stacked convolutional layers—governs the abstraction level the network can achieve. Together, these elements enable CNNs to learn a rich hierarchy of features. In practice, practitioners optimize architectural choices for datasets, hardware, and latency requirements. A typical objective is to maximize accuracy while keeping inference time within application constraints. The design space is large: networks can be shallow with wide layers or deep with many small kernels. The trend in modern CNNs is toward depth and architectural innovations that improve information flow, such as residual connections that help gradients travel through many layers without vanishing. In addition to architecture, preprocessing and augmentation play a critical role in successful CNN training. Techniques like random cropping, flipping, color jittering, and geometric distortions simulate diverse viewing conditions, helping the model generalize. When combined with transfer learning, a CNN can adapt prelearned features to new but related tasks with relatively modest data requirements. In this section, we examine core architectural choices through a practical lens, highlighting how data engineers select kernels, strides, and pooling schemes to balance bias and variance. They also consider hardware realities: the availability of NVIDIA GPUs, optimization through TensorFlow or PyTorch, and efficient deployment using OpenCV pipelines, all of which shape what is feasible in production. The synergy between algorithm design and software tooling is essential for turning research into reliable products. For instance, a CNN trained for industrial defect detection must maintain performance under varying lighting and material textures, a challenge that is addressed by carefully curated augmentation strategies and robust feature extraction. The field also benefits from community-driven resources and benchmarks that compare architectures on standard datasets, offering a shared vocabulary for progress. Readers interested in the latest architectural trends should examine how innovations like depthwise separable convolutions or attention mechanisms influence model efficiency and accuracy. The broader AI ecosystem, including Google AI, DeepMind, and Caffe, provides context and inspiration for practical implementations. For developers seeking hands-on guidance, tutorials and code repositories frequently illustrate how to configure kernel sizes, strides, and padding to achieve desired receptive fields and feature maps. The bottom line is that architecture and learning dynamics are inseparable: the way a network is built directly shapes how it learns and the kinds of patterns it can recognize. In this spirit, the chapter below presents a concrete view of how to reason about architecture choices, with a focus on real-world trade-offs and measurable outcomes.

| Concept | Definition | Common Choices |

|---|---|---|

| Kernel | Small matrix that slides over input to produce a feature map | 3×3, 5×5; depthwise variants |

| Stride | Step size of the filter movement | 1 or 2 in typical vision nets |

| Padding | Border handling to preserve or reduce spatial size | Same (padding kept), Valid (no padding) |

| Depth | Number of stacked convolutional layers | Many layers for feature hierarchy, with skip connections |

- Understand receptive fields to predict how much context a layer captures.

- Leverage transfer learning from large datasets to adapt to new tasks quickly.

- Balance latency and accuracy when deploying CNNs in production.

The practical takeaway is that architecture is not just a blueprint but a tool for shaping what the model learns. By choosing kernel sizes, strides, and padding thoughtfully, engineers control the spatial resolution of feature maps and the network’s capacity to represent complex patterns. The rising prominence of capsule networks offers an alternative approach to encoding pose and spatial relations, which could enhance robustness to viewpoint changes and occlusions. These ideas are actively discussed in the community and are shaping research directions in parallel with traditional CNNs. For ongoing learning resources, explore advanced tutorials and example projects from leading AI labs and community sites, including references from OpenCV and cloud AI platforms. For a broader context on architectural experimentation, the capsule networks article linked earlier remains a useful companion as you compare different strategies for spatial reasoning. The field’s momentum in 2025 continues to be driven by the need for models that are both accurate and deployable at scale across diverse environments.

Exploring the Power of Convolutional Neural Networks: Training, Regularization, and Robustness at Scale

Training CNNs combines data, computation, and careful optimization. It is not enough to have a powerful architecture; the learning process must be stable, repeatable, and scalable. This section examines practical strategies for training CNNs effectively, including data augmentation, optimization algorithms, regularization, and monitoring. A robust training regime starts with curated datasets that reflect the target domain and augmentations that simulate real-world variation. Techniques such as random cropping, color jittering, flipping, rotation, and noise perturbation expand the effective data distribution, helping the network generalize beyond the training samples. In 2025, data pipelines increasingly leverage cloud resources with distributed training, enabling researchers to scale up to millions of images and complex tasks. Frameworks like TensorFlow, PyTorch, and Keras offer modular, execution-efficient APIs that support gradient accumulation, mixed-precision training, and multi-GPU or multi-node setups. The result is faster convergence and the ability to explore larger models or more extensive hyperparameter sweeps. However, bigger is not always better: overparameterization can lead to longer training times and diminishing returns. Regularization techniques—including dropout, weight decay, and batch normalization—help mitigate overfitting and stabilize learning dynamics. In time-sensitive applications, practitioners often adopt early stopping rules and learning rate schedules that adapt to the complexity of the task and the size of the dataset. A well-tuned optimization strategy is critical: algorithms such as Adam and its variants, along with SGD with momentum, offer complementary benefits depending on the data regime, noise levels, and task difficulty. The interplay between optimization and architecture shapes how well a CNN learns to extract useful representations from data and how quickly it reaches high performance. The following practical guide outlines a typical training workflow and the decision points where choices influence final accuracy and efficiency. Panels of results are often summarized in tables and plots, illustrating how hyperparameters affect validation metrics and computing costs. The broader AI ecosystem, including AWS Machine Learning and Microsoft Azure AI, provides scalable environments to experiment with these workflows. For hands-on practice, consider trying a transfer learning recipe with popular CNN backbones on your domain-specific data, and monitor progress using standard benchmarks and visualization tools.

| Training Challenge | Mitigation | Outcome |

|---|---|---|

| Data scarcity | Augmentation and transfer learning | Better generalization with limited labels |

| Overfitting | Dropout, weight decay, data diversity | Improved validation performance |

| Slow convergence | Learning rate schedules, mixed precision | Faster training and stable gradients |

- Practice recipe: define dataset, apply augmentation, choose backbone, set optimizer (Adam or SGD with momentum), and schedule learning rate.

- Evaluation metric choices matter: precision, recall, mAP for detection tasks, or accuracy for classification.

- Monitoring is essential: track loss curves, validation performance, and resource usage to avoid hidden failures.

In production, training CNNs also involves considerations for reproducibility and governance. Data provenance, versioning of datasets and targets, and clear model cards help teams maintain transparency about model capabilities and limitations. The deployment path may include quantization, pruning, or distillation to reduce compute and memory footprints while preserving accuracy. Across sectors, teams leverage NVIDIA accelerators and cloud services to operationalize CNNs at scale. The convergence of hardware, software ecosystems, and best practices makes CNNs a practical choice for large-scale vision tasks—from medical image analysis to autonomous navigation. To deepen your understanding, you can explore tutorials that compare training regimes across frameworks and hardware, as well as case studies that illustrate how teams balance performance, cost, and reliability in real-world deployments.

Key operational tips:

- Use transfer learning when data are scarce.

- Favor data augmentation that simulates real-world variation.

- Choose optimization strategies aligned with data characteristics and compute constraints.

| Common Hyperparameters | Typical Values | Notes |

|---|---|---|

| Learning rate | 1e-3 to 1e-4 | Higher early; warm-up strategies often used |

| Batch size | 16–256 | Trade-off between memory and stability |

| Optimizer | Adam, SGD with momentum | Chosen based on dataset and task |

| Regularization | Dropout, weight decay | Controls overfitting |

Practical case studies and benchmarks

A practical case study might compare a baseline CNN trained from scratch against a pre-trained backbone fine-tuned for a medical imaging task. This scenario often demonstrates how transfer learning reduces the amount of labeled data required while maintaining high accuracy. In another study, researchers examine how different data augmentation techniques affect generalization on a satellite imagery dataset, highlighting the importance of domain-specific augmentations. Across industries, teams increasingly rely on cloud-based pipelines and hardware accelerators to train and deploy models at scale, coordinating with cloud services like AWS Machine Learning and Azure AI to provision resources, monitor training jobs, and manage model versions. The practical conclusions tend to favor robust data workflows, disciplined experimentation, and careful performance tracking to achieve reliable results. For readers seeking actionable resources, the capsule networks discussion (linked earlier) offers a complementary perspective on how alternative architectures handle spatial relationships, which can influence decisions about model design if pose and arrangement of parts are critical.

Exploring the Power of Convolutional Neural Networks: Real-World Applications and Case Studies

Deploying CNNs in real-world settings requires translating theoretical learning into tangible improvements. This section surveys the domains where CNNs have made measurable impact, including medical imaging, autonomous systems, and industrial inspection. The strength of CNNs lies not only in recognition accuracy but also in their ability to operate in diverse environments, tolerate variations in lighting and perspective, and function in near real-time when supported by hardware accelerators. A central theme is the tight integration of CNNs with practical data pipelines, cloud platforms, and edge devices. In medical imaging, CNNs assist radiologists by prioritizing suspicious regions in scans, enabling faster triage and improved diagnostic workflows. In autonomous systems, CNNs contribute to real-time scene understanding, object detection, and tracking, forming a critical layer of perception that interacts with planning and control modules. In manufacturing and agriculture, CNNs enable defect detection and crop monitoring, translating visual signals into actionable insights for quality assurance and precision farming. Across these and other sectors, practitioners rely on a suite of tools and services that enable efficient model development, training, and inference across cloud and edge environments. This includes deep integration with cloud AI services for scalable training, as well as on-device inference for low-latency decisions. A growing trend is the combination of CNNs with other modalities and architectures to build more capable systems, such as fusing visual signals with textual or sensor data to support multimodal AI tasks. The ecosystem driving these capabilities spans both mature industrial providers and cutting-edge research groups. For instance, developers frequently pair CNN-based vision with libraries and platforms such as Google AI, OpenCV, Microsoft Azure AI, AWS Machine Learning, and NVIDIA hardware to realize end-to-end solutions. The following table summarizes representative use cases, typical performance considerations, and example metrics.

| Use Case | Typical CNN Role | Key Metrics |

|---|---|---|

| Medical imaging | Lesion detection, segmentation, triage | Sensitivity, specificity, Dice coefficient |

| Autonomous driving | Perception, object detection, lane tracking | mAP, latency, FPS |

| Industrial inspection | Defect detection, quality control | Precision, recall, false positive rate |

| Augmented reality | Real-time scene understanding | Throughput, latency, user experience metrics |

Industry-scale deployments require robust data governance, reproducible training pipelines, and scalable inference. Companies often implement streaming data pipelines, automated model retraining, and continuous evaluation to keep models aligned with changing data distributions. In practice, engineers leverage platform-specific optimizations—such as NVIDIA CUDA kernels, TensorRT optimizations, and specialized inference graphs in frameworks like Keras—to meet stringent latency constraints. Edge AI initiatives further demand compact CNNs and quantization techniques to fit memory and compute budgets without sacrificing accuracy. For readers seeking practical inspiration, consider exploring benchmarked case studies that compare architectures under real-world constraints, and examine how teams adapt CNNs to domain-specific textures and structures. The capsule networks discussion remains a thought-provoking lens for evaluating whether pose-aware representations can provide robustness in dynamic environments where perspective changes are common.

Future Directions in CNNs: Capsule Networks, Efficiency, and Edge Intelligence

The trajectory of CNN research in 2025 points toward more expressive yet efficient architectures, with capsule networks offering one potential path to more robust spatial reasoning. Capsule theories argue that representing the pose and hierarchical relationships of object parts can improve generalization to novel viewpoints, occlusions, and compositional variations. While still an area of active exploration, capsule-based ideas have sparked renewed interest in how networks reason about spatial structure beyond traditional convolution. Alongside this, efficiency-driven innovations—such as depthwise separable convolutions, pruning, and model compression—aim to reduce the computational footprint of CNNs without sacrificing accuracy. These approaches are particularly relevant for edge intelligence, where devices with limited compute and energy budgets must perform reliable perception. The practical implication is a shift in how models are designed, trained, and deployed: architecture, training, and deployment must be conceived as an integrated pipeline that balances accuracy with latency and energy efficiency. In 2025, hardware platforms—ranging from desktop GPUs to embedded accelerators—enable increasingly ambitious CNN-driven products, while cloud providers offer scalable training and inference services to support rapid experimentation and deployment. The ecosystem of tools, libraries, and services continues to mature, with ongoing optimization across software stacks and hardware accelerators. For developers and researchers, a proactive approach combines architectural experimentation with a disciplined workflow for data, reproducibility, and governance. You can explore credible sources and tutorials from established AI labs and industry partners to stay current with trends in CNN design, optimization, and deployment. The capsule networks discussion linked earlier remains a valuable reference for understanding alternate mechanisms to represent spatial relationships.

| Direction | Focus | Trade-offs |

|---|---|---|

| Capsule networks | Pose-aware representations and part-whole relationships | Potential robustness vs. training complexity |

| Efficient CNNs | Pruning, quantization, depthwise convolutions | Lower latency and memory, possible accuracy impact |

| Edge AI | On-device inference with limited power | Balance of size, speed, and accuracy |

- Key research questions: Can capsule-inspired architectures consistently outperform traditional CNNs on viewpoint-variant tasks?

- Industry implications: How can edge deployments preserve reliability while reducing energy consumption?

- Platform considerations: What trade-offs arise when choosing between TensorFlow, PyTorch, or Keras for deployment on NVIDIA hardware?

As the field advances, practical integration with cloud and edge ecosystems remains essential. The use of Google AI, NVIDIA hardware, and AWS Machine Learning services demonstrates how organizations transform CNN research into scalable products. Meanwhile, OpenCV continues to provide practical tools for preprocessing and visualization that streamline real-world workflows. The ongoing dialogue around capsule networks and related concepts invites researchers to rethink how to represent and reason about spatial relations, with potential payoffs in areas like robotics and autonomous systems. For those who want to dive deeper into the latest experiments and case studies, the capsule networks article linked earlier is a useful starting point, and supplemental resources from industry leaders help bridge theory and practice in 2025 and beyond.

| Open Questions | Examples to Explore | Potential Impact |

|---|---|---|

| How to efficiently train capsule-inspired models | Benchmark datasets, ablation studies | Informs feasibility for production |

| Best practices for edge deployment | Quantization, pruning, hardware-aware design | Lower latency with minimal accuracy loss |

| Interpretability of spatial reasoning | Visualization, saliency, and part-based explanations | Increases trust and governance |

What makes CNNs particularly suited for image data?

CNNs exploit local patterns and hierarchical feature learning, enabling efficient representation of images through shared filters and spatially aware operations.

How do I decide between AlexNet-style architectures and modern deep CNNs?

Modern networks emphasize depth, skip connections, and efficiency, but the right choice depends on dataset size, computation, latency requirements, and deployment context.

Can capsule networks replace standard CNNs in practice?

Capsule networks offer potential benefits for pose-aware reasoning, but they are still an area of active research; hybrid approaches may provide pragmatic gains today.

Which tools should I learn for CNN deployment in 2025?

Familiarize yourself with TensorFlow, PyTorch, Keras, and hardware-accelerated toolchains from NVIDIA, complemented by OpenCV for data handling.

Where can I find practical examples and benchmarks?

Consult tutorials, official docs, and community benchmarks in AI labs and cloud providers’ documentation; example references include industry blogs and research papers.