En bref

- The 2025 robotics landscape blends AI-powered autonomy with industrial precision, enabling smarter factories, safer operations, and more capable consumer devices.

- Industrial players such as ABB Robotics, Fanuc, and KUKA Robotics are expanding collaborative automation while traditional pioneers evolve toward modular, adaptable systems.

- Consumer robotics—from vacuum robots to service bots—are becoming integrated into homes and public spaces, yet ethical considerations and workforce transitions require thoughtful governance.

- Advances in perception, learning, and decision-making—driven by NVIDIA AI acceleration, edge computing, and new neural architectures—are redefining what robots can understand and how they act.

- The dialogue around societal impact, safety, and governance blends insights from enterprises, researchers, and policymakers, calling for robust standards and responsible deployment.

The future of robotics sits at the intersection of engineering, data, and human-centered design. Robots are no longer merely programmed machines; they are increasingly autonomous agents capable of sensing, reasoning, and adapting to changing environments. This shift accelerates across sectors, linking industrial efficiency with everyday life, while inviting questions about safety, labor, and privacy. In 2025, a growing ecosystem of hardware, software, and services—ranging from Boston Dynamics’s mobile platforms to iRobot home assistants and DJI drones—illustrates a trend: robots are becoming more capable, more interconnected, and more embedded in daily decision-making. The following sections explore key threads shaping this trajectory, from factory floors to living rooms, and from data-driven perception to societal governance.

Autonomy and AI: Innovations Shaping the Future of Robotics and Its Impacts

Robotics is transitioning from predefined routines to adaptive, AI-assisted operation. The core driver is the integration of perception, reasoning, and control into compact, energy-efficient systems. A modern robot may combine high-precision actuators with tactile sensing, vision from cameras and depth sensors, and AI models that learn from experience. This combination enables tasks once thought too dynamic or dangerous for automation—industrial assembly lines that reconfigure themselves, service robots that tailor interactions to individual users, and autonomous machines that collaborate with humans in shared spaces. In practice, this means a shift in how work is organized, how safety is managed, and how value is created across sectors.

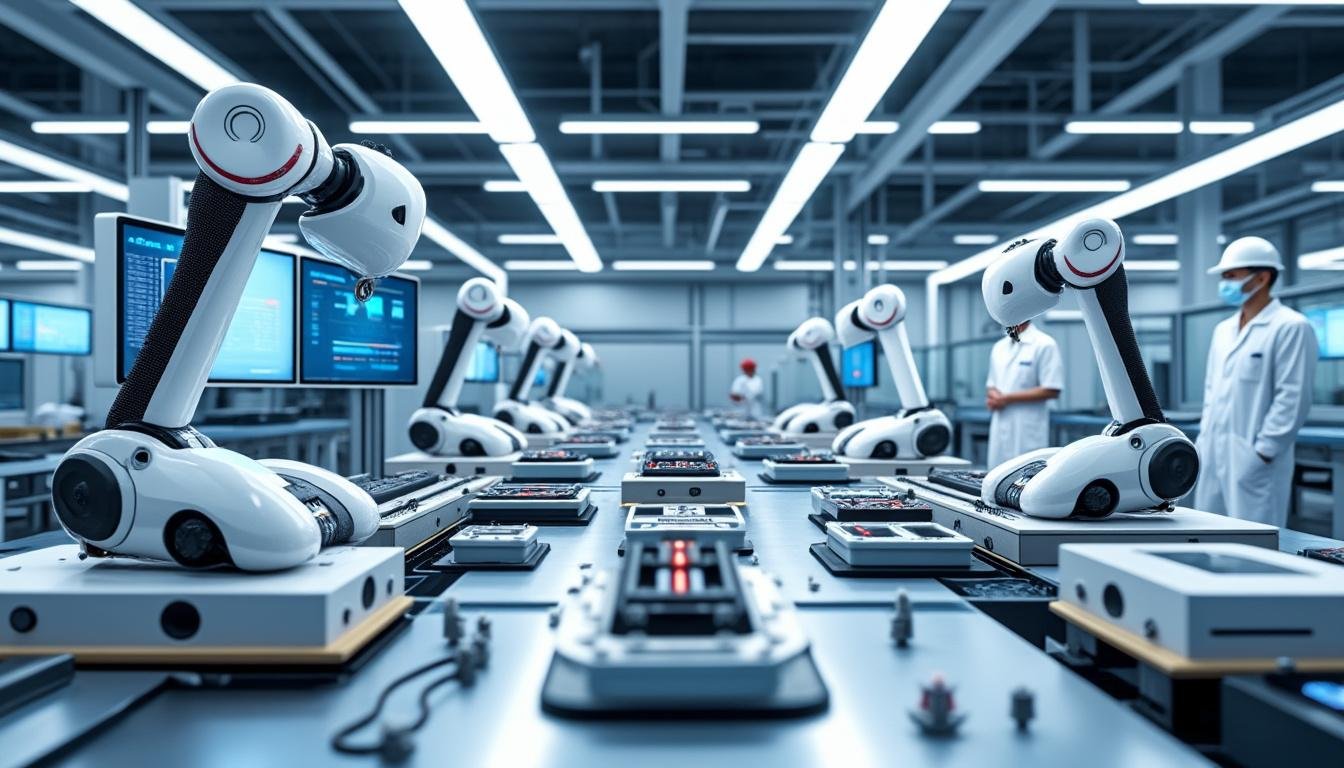

In workplaces, the trend toward cooperative robotics—often called cobots—has progressed far beyond simple payloads. ABB Robotics, Fanuc, and KUKA Robotics have pushed cobots to operate side-by-side with humans, supported by advanced sensing and collaborative safety features. These systems typically integrate modular grippers, force sensors, and programmable chains that allow rapid reconfiguration for different products. The result is a production line that can switch from one model to another with minimal downtime. For companies, the payoff includes shorter time-to-market, reduced human risk, and a more flexible resource base. The downside requires strong governance: monitoring AI decisions, ensuring explainability in automated decisions, and maintaining human-in-the-loop oversight when needed.

In the realm of perception and decision-making, breakthroughs in perception are converging with learning-based control. Vision systems are no longer constrained to simple color or edge detection; they incorporate depth sensing, 3D mapping, and object recognition in cluttered environments. This enables robots to identify objects, obstacles, and human intent. The practical implications are wide: warehouse robots that navigate crowded aisles, service robots that interpret social cues, and maintenance robots that detect anomalies in real time. For users, this translates into more intuitive interactions and safer autonomous behaviors, but it also raises questions about data privacy and the collection of behavioral signals in shared spaces. Reading the evolving literature and industrial deployments, one can see a trajectory toward more robust autonomy married to better safety guarantees and more transparent decision processes.

Key sub-topics to consider include:

- Adaptive control loops that adjust motion in response to changing payloads.

- Sensor fusion strategies that combine lidar, cameras, tactile data, andMap-based localization for robust navigation.

- Edge AI processing that minimizes latency and preserves data privacy by keeping critical inferences on device.

- Modular hardware and software stacks enabling rapid reconfiguration for new tasks.

- Human-robot collaboration principles that prioritize safety, trust, and clear accountability frameworks.

| Aspect | Current State (2025) | Impact on Industry | Representative Examples |

|---|---|---|---|

| Autonomy level | Partially autonomous in structured environments; increasing autonomy in controlled contexts | Reduced downtime, higher throughput, safer operations near humans | Industrial cobots on assembly lines; autonomous mobile robots in warehouses |

| Perception | Multisensor fusion enabling 3D understanding and scene awareness | Improved navigation, object handling, and safety margins | Vision-based grasping in manufacturing; depth sensing in service robots |

| Learning | Learning from simulation-to-reality transfers; online adaptation | Faster deployment of new tasks; better resilience to variability | Capsule networks; CNNs accelerating perception tasks |

| Safety and governance | Formal safety standards evolving; human-in-the-loop remains common | Trustworthy deployments; regulatory alignment | Collaborative safety protocols; transparent AI decisions |

Industries that exemplify these shifts include automotive manufacturing, logistics, and logistics-heavy consumer electronics, where ABB Robotics, Fanuc, and KUKA Robotics lead with scalable, modular architectures. For readers exploring sources outside traditional engineering, consider exploring broader AI governance and cognitive science perspectives linked here: Abductive logic programming and reasoning and Intelligence and cognition in humans and machines. These readings help contextualize how automated systems interpret evidence and make decisions.

Emerging themes in autonomy

There is a rising emphasis on modularity, safety, and interoperability across platforms. A family of robots designed with shared interfaces and standardized communication protocols can be swapped and upgraded with minimal downtime. This is not only a technical challenge but a business strategy: it lowers vendor lock-in and accelerates the adoption curve for small and medium enterprises. In practical terms, firms adopt Universal Robots-type collaborative arms for flexible production, alongside heavy-duty industrial arms from Fanuc or KUKA to handle high-load tasks. When a factory retools for a new product line, the entire line can shift swiftly, while maintaining strict safety standards—an outcome that resonates with the broader push toward sustainable, resilient manufacturing ecosystems.

To illustrate the economic and operational implications, consider the following list of factors shaping deployment in 2025:

- Initial capital expenditure versus long-term operational savings, including energy efficiency and maintenance

- Time-to-value from pilot projects to full-scale rollout

- Workforce retraining and transition programs to around upskilling employees

- Data governance policies governing sensor data and model updates

- Integration with existing IT/OT systems and ERP platforms

For readers seeking deeper dives into underlying theories, the inclusion of analogies from linear algebra foundations and capsule networks offers a helpful bridge to practical robotics. The overarching message remains clear: autonomy is not a solitary feature but a system property that emerges from hardware, software, data, and human collaboration.

Key takeaway: As autonomy deepens, robots become more capable teammates, yet responsible governance and thoughtful workforce strategies are essential to harness their benefits without compromising safety or trust.

Industrial autonomy in 2025: case studies

Across regions, multi-vendor deployments illustrate both the value and the complexities of scaling autonomous robotics. In some facilities, a single line uses a mix of ABB Robotics cobots and CLI/NVIDIA-powered perception for seamless production. In others, KUKA Robotics and Fanuc systems coordinate heavy-lifting tasks with centralized monitoring dashboards. The common thread is the push toward data-driven optimization: real-time diagnostics, predictive maintenance, and performance analytics that inform operational decisions beyond the shop floor. The broader implication is a shift from purely hardware-centric investments to software-enabled, end-to-end automation ecosystems.

As a reader, you may wonder how such trends translate to small businesses or startups. The answer lies in modular architectures and services that scale with demand. A small enterprise could begin with a Universal Robots cobot for simple assembly, then incrementally add AI-driven perception and fleet management tools as needs evolve. Importantly, these advances are complemented by credible safety frameworks and workforce training programs designed to minimize disruption while maximizing productivity.

Finally, it is worth noting the ethical and societal context. The deployment of autonomous systems must consider privacy, safety, and potential job displacement. Stakeholders—ranging from local regulators to industry associations—are actively debating how to balance innovation with protections for workers and communities. In this sense, the innovations described here are as much about governance as they are about gears and sensors.

Industrial Robotics in 2025: Innovations Driving Global Manufacturing and Logistics

Industrial robotics has progressed from isolated, repetitive tasks to integrated, intelligent systems capable of learning from operating environments. The redesigns emphasize modularity, connectivity, and resilience. This has a direct impact on how companies structure production, inventory, and distribution. A core driver is the need to reduce cycle times while maintaining quality and safety, especially in high-mix, low-volume contexts where product changeovers occur frequently. In practice, this means robots that can reprogram themselves with minimal human intervention, sensors that anticipate wear or failure, and cloud-connected analytics that optimize entire value chains. The converging forces of hardware improvements, software innovations, and better data governance are redefining what is possible in factory and warehouse settings.

Within this landscape, several brands have established leadership by offering robust platforms, reliable performance, and strong support ecosystems. ABB Robotics remains a frontrunner in industrial automation, known for scalable cobots, articulated arms, and supervisory control systems. Fanuc continues to push precision and speed in high-volume manufacturing, while KUKA Robotics emphasizes modular, configurable lines suitable for complex product families. On the collaborative side, Universal Robots and other lightweight cobots democratize automation for smaller teams, enabling fast ROI and iterative experimentation. The interplay between these players creates a diverse market where customers can mix and match components to suit unique production profiles.

A crucial part of the 2025 landscape is the software that binds hardware into an operating system for the factory. AI-powered planning, sensor fusion, and digital twins give managers confidence that their lines can adapt to demand fluctuations. The adoption of cloud-based analytics complements on-site computing, enabling continuous improvement without sacrificing data sovereignty. Investors and operators alike look for interoperability and security, with standards and certifications guiding safe, scalable deployments. The resulting ecosystem is more than a collection of machines; it is an integrated, intelligent network that extends from procurement to fulfillment, with real-time feedback loops guiding decisions at all levels of the organization.

In the context of education and workforce development, the 2025 industrial robotics trend emphasizes upskilling: engineers learn to program adaptive control, data scientists collaborate with maintenance teams, and technicians gain fluency in cyber-physical systems. The education-to-employment pipeline is essential for sustaining momentum and ensuring that human labor grows alongside automation. For stakeholders, this means sustained investment in training, apprenticeship programs, and industry-academia partnerships that translate research into practical capabilities on the factory floor.

Representative case studies highlight how ABB Robotics, Fanuc, and KUKA Robotics are enabling end-to-end automation with transparent maintenance supports. In parallel, consumer-facing companies such as NVIDIA-accelerated edge devices are powering more capable perception stacks on factory floors, while Omron sensors contribute to safer, more reliable operation. For readers looking to deepen their understanding, several authoritative articles and datasets are linked in the resources below:

Further reading: Top business and finance trends for 2025 and Foundations of linear algebra in industrial AI.

Industry snapshot

| Area | Technology Spotlight (2025) | Key Benefits | Leading Players |

|---|---|---|---|

| Collaborative robots | Low-cost cobots with safety-rated monitored stops | Faster onboarding; safer human-robot collaboration | Universal Robots; ABB; KUKA |

| Industrial arms | High-speed, precision arms for assembly and welding | Improved yield; reduced cycle times | Fanuc; KUKA |

| Sensor ecosystems | Sensor fusion and predictive maintenance | Lower downtime; better asset life management | Omron; NVIDIA-enabled sensors |

| Analytics and planning | Digital twins and cloud-based optimization | End-to-end visibility; faster scenario testing | ABB; NVIDIA |

To explore more, you may consult additional materials on the broader AI and systems perspective, including:

Real-world deployments: lessons learned

In practice, deploying industrial robotics requires careful planning around safety, maintenance, and integration. In several high-midelity facilities, robotics upgrades were paired with continuous improvement programs that engaged shop-floor personnel as co-designers. This collaborative approach fosters a culture of experimentation—where teams test new configurations, measure impact, and iterate quickly. The outcome is not merely a more automation-driven operation, but a more resilient organization that can weather demand swings, supply chain disruptions, and product complexity. The story of 2025 is one of harmonizing speed with reliability, and artful coordination of people and machines.

For those who want to dive deeper into mechanistic underpinnings, the following resources offer complementary insights: Convolutional neural networks and robotics perception and Linear algebra foundations for robotics.

Consumer Robots and Everyday Life: From iRobot to Household AI Assistants

Consumer robotics sits at the nexus of convenience, safety, and privacy. In 2025, robots in homes and public spaces do more than perform repetitive chores; they learn user preferences, adapt to routines, and provide contextual assistance. The household robot market includes vacuum cleaners, window cleaners, social robots, and specialized devices designed for elder care or education. Companies like iRobot and SoftBank Robotics have refined their product lines, focusing on reliability, ease of use, and meaningful human-robot interactions. Meanwhile, drone platforms from DJI are expanding into delivery, inspection, and creative applications, illustrating how consumer and professional robotics overlap in the marketplace. The 2025 landscape also sees broader adoption of consumer robots in hotels, hospitals, and shopping centers, where service robots support staff and improve guest experiences while adhering to privacy and safety guidelines.

Beyond devices, the consumer robotics ecosystem includes app ecosystems, cloud services, and developer tools that enable customization, automation of routines, and data-driven personalization. The consumer space raises distinct concerns around data collection, consent, and user agency. Responsible deployment involves transparent data practices and clear signals for when a robot is collecting information, as well as robust safety features to prevent accidental harm. The interplay of design, ethics, and technology shapes how people perceive and interact with robotic assistants on a daily basis, influencing trust, acceptance, and ongoing adoption.

In 2025, notable lines of progress are visible in how robots understand social cues, manage energy, and coordinate with humans and other devices. The effort to create intuitive interfaces—such as voice, gesture, or simple on-device controls—reduces friction and accelerates adoption. This matters for household economies, as robots contribute to time savings and improved quality of life. However, it also requires a careful balance of convenience with privacy safeguards and clear user consent. As households increasingly rely on intelligent helpers, the need for reliable updates, secure data practices, and ongoing customer support becomes more important than ever.

Key features driving consumer robotics today include:

- Adaptive cleaning and maintenance routines tailored to home layouts

- Social and assistive capabilities to support aging-in-place and education

- Remote sensing and monitoring for safety and energy efficiency

- Seamless integration with smart home ecosystems and mobile apps

- Durable design and user-friendly maintenance workflows

In addition to the household arena, consumer robotics intersects with entertainment and content creation. Drones from DJI demonstrate how aerial robotics complements imaging, surveying, and creative production. The convergence of AI and robotics in the consumer space prompts ongoing discussions about ethics, safety, and the responsibility of manufacturers to provide clear disclosures and controls for users. For readers seeking broader context, the following articles offer complementary perspectives on cognition and perception in intelligent systems: Convolutional neural networks for vision in robots and Human-computer interaction dynamics.

Key brands and devices to watch: iRobot robotic vacuums, SoftBank Robotics social robots, and DJI for aerial robotics form a triad of consumer capabilities that continue to evolve with AI and sensor technology. In practice, a modern home robot suite might include floor-cleaning bots, a social companion, a drone for home security, and smart assistants that help manage daily routines, all coordinated through a unified app and cloud services.

Privacy, safety, and the user experience

As robots become more embedded in daily life, privacy and safety move from afterthoughts to design imperatives. Manufacturers implement on-device processing to limit data leaving devices, alongside user-friendly privacy dashboards that let individuals control what is collected and retained. The user experience hinges on intuitive interactions—natural language, gestures, and context-aware responses—that reduce friction and increase usefulness. Ethical considerations also include ensuring that service robots respect human autonomy and do not attempt to substitute critical decision-making in sensitive situations.

To broaden the discussion, see how ASI frontiers relate to consumer robotics, and how perception technologies intersect with user interfaces in everyday devices.

AI Integration in Perception, Navigation, and Manipulation: The Learning Robots Era

The convergence of perception, planning, and manipulation is revolutionizing how robots interpret the world and act within it. Perception now includes robust object recognition, depth estimation, semantic understanding, and real-time scene analysis. Navigation systems rely on multi-sensor fusion to map environments, predict dynamic changes, and maintain safe operation near people and obstacles. Manipulation—grasping, assembling, and manipulating diverse objects—has advanced through improvements in gripper design, tactile sensing, and learning-based control. Together, these capabilities enable robots to perform complex tasks with higher success rates and fewer human interventions. The learning loop—observe, plan, act, and learn—drives continual improvement, enabling robots to adapt to new environments and tasks without extensive reprogramming.

At the heart of this era are modern AI accelerators and neural architectures that enable on-device inference and fast learning from limited data. NVIDIA and other AI stack providers play a critical role by delivering hardware-accelerated inference that makes real-time perception and control feasible on practical platforms. In addition, research into robust representations—such as capsule networks and advanced CNNs—offers improved generalization and faster adaptation to unseen objects or tasks. These advances translate into practical outcomes: robots that can pick unfamiliar objects of varying shapes, navigate unfamiliar rooms, and collaborate with humans in unstructured environments. The result is a new generation of robots that are not only precise but also perceptive and adaptable, able to function in homes, workplaces, and outdoor settings with increasing reliability.

To deepen your understanding, consult studies and articles on capsule networks and linear algebra as foundational elements for robust 3D perception and control: Capsule networks and linear algebra in robotics. These perspectives illuminate how numerical representations and structured learning drive practical robotic capabilities.

Examples of perception and action in 2025 include autonomous drones for inspection and search tasks, factory forklifts that coordinate with humans and other robots, and home care robots that adapt to household layouts while maintaining safety boundaries. Such examples illustrate the promise and challenges of creating machines that can truly understand complex environments and respond gracefully to human needs.

For more on how humans interact with intelligent systems, see HCI dynamics and ASI frontiers.

Ethics, Economy, and Society: The Responsible Deployment of Robotic Technologies

The rapid advance of robotics raises essential questions about the future of work, safety, privacy, and governance. As robots increasingly participate in decision-making processes—whether in manufacturing, healthcare, or consumer services—societal implications come to the forefront. Policymakers, researchers, and industry players must collaborate to establish cross-cutting standards that ensure safety, accountability, and fairness. This involves not only technical safeguards (such as fail-safe mechanisms, explainable AI, and secure data practices) but also social measures (like retraining programs, income diversification, and equitable access to automation’s benefits). In 2025, the conversation expands to include ESG considerations, workforce transition planning, and the ethical deployment of autonomous systems in public spaces and critical sectors.

Economically, robotics accelerates productivity while reshaping job roles. The adoption of automated systems often accompanies new opportunities in design, programming, maintenance, and data science. Yet there are risks of displacement in routine and manual tasks, especially without proactive retraining initiatives. Therefore, responsible deployment includes clear communication with workers, opportunities for upskilling, and pathways to new employment that leverage the strengths of both humans and machines. In addition, the governance of data generated by robots—sensor data, user interactions, and operational metrics—requires careful handling to protect privacy and ensure transparency. Stakeholders must align on governance frameworks that balance innovation with rights and protections for individuals and communities.

Incorporating the perspectives of diverse sources—ranging from AI ethics literature to practical safety standards—helps frame robust approaches to responsible robotics. For instance, exploring how abductive reasoning informs inference under uncertainty (see linked resources) and how human cognition interfaces with intelligent agents can illuminate how robots reason in ambiguous environments. See the following references for broader context:

- Abductive logic programming and robotics reasoning

- Cognition in humans and machines

- HCI dynamics and robotics interfaces

- Frontiers of artificial superintelligence

- Business and finance trends for 2025

As with any powerful technology, the success of robotics in 2025 and beyond will depend on stewardship. Companies such as Boston Dynamics and SoftBank Robotics pursue safety-forward designs, while NVIDIA and Omron contribute to robust sensing and reliable operation. The broader ecosystem—comprising drones from DJI, collaborative arms from Universal Robots, and a range of industrial bots—offers immense potential when guided by thoughtful policy, ethical considerations, and continuous learning.

For readers seeking practical takeaways, consider how governance, workforce planning, and education will evolve in response to robotics advancements. The future invites collaboration among engineers, business leaders, educators, and policymakers to craft environments where innovation thrives while protecting people and communities. The conversation is ongoing, and the stakes are high—yet the potential benefits in safety, efficiency, and quality of life are equally profound.

FAQ

What is the defining distinction between traditional robots and autonomous robots in 2025?

Autonomous robots operate with AI-driven perception and decision-making, enabling self-directed action in dynamic environments, whereas traditional robots follow pre-programmed sequences with limited adaptability.

Which companies are leading in industrial cobots and why?

ABB Robotics, Fanuc, KUKA Robotics, and Universal Robots lead due to a mix of reliable hardware, modular architectures, strong safety features, and comprehensive ecosystem software that supports rapid deployment and maintenance.

How does AI affect safety and governance in robotics?

AI enhances safety through better perception, planning, and error handling, but requires governance to ensure transparency, accountability, and privacy—especially when robots operate around people or collect data in public or semi-public spaces.

What role do perception and learning play in household robots?

Perception enables robots to recognize objects and understand scenes; learning allows them to adapt to new tasks and environments with less manual programming, improving effectiveness and user experience in homes.