En bref

- CapsuleNet represents a shift from scalar activations to vector-valued capsules that encode instantiation parameters such as pose, orientation, scale, and velocity, enabling richer object representations.

- DynamicRoutingAI and routing-by-agreement form the core mechanism that decides how information flows between layers, preserving hierarchical relationships rather than collapsing them through pooling.

- In 2025, CapsuleAI-inspired approaches are maturing toward practical vision tasks, efficient training regimes, and interoperability with contemporary AI stacks, including concepts like MatrixNet and CapNetX.

- CapsuleVision and NeuroCaps exemplify how capsule-based architectures can revolutionize object recognition, action understanding, and cross-domain transfer, by maintaining spatial and relational structure across layers.

- Despite progress, researchers continue to tackle training stability, routing complexity, and hardware efficiency to scale CapsuleNet systems to industrial workloads.

Exploring Capsule Networks marks a turning point in neural network design. Rather than compressing everything through a single scalar score, CapsuleNet family models maintain a structured representation of objects, their parts, and their arrangements. This article surveys the current state of the art, positions CapsuleNet within broader AI ecosystems, and envisions how CapsuleInnovate, CapsuleFrontier, and related ideas might reshape AI tooling by 2030. The landscape in 2025 shows a convergence of theory and practice: researchers refine dynamic routing, engineers integrate capsule modules into existing pipelines, and organizations experiment with real-world deployments that demand robust generalization under transformation, occlusion, and varied viewpoints. The overarching message is clear: when representations carry pose and relational information, systems can reason about objects in more human-like ways, enabling better generalization and more data-efficient learning. The journey is ongoing, but the path is increasingly well-lit for products that blend CapsuleVision with modern neural architectures.

CapsuleNet Fundamentals: Architecture, Capsules, and the Pose Paradigm

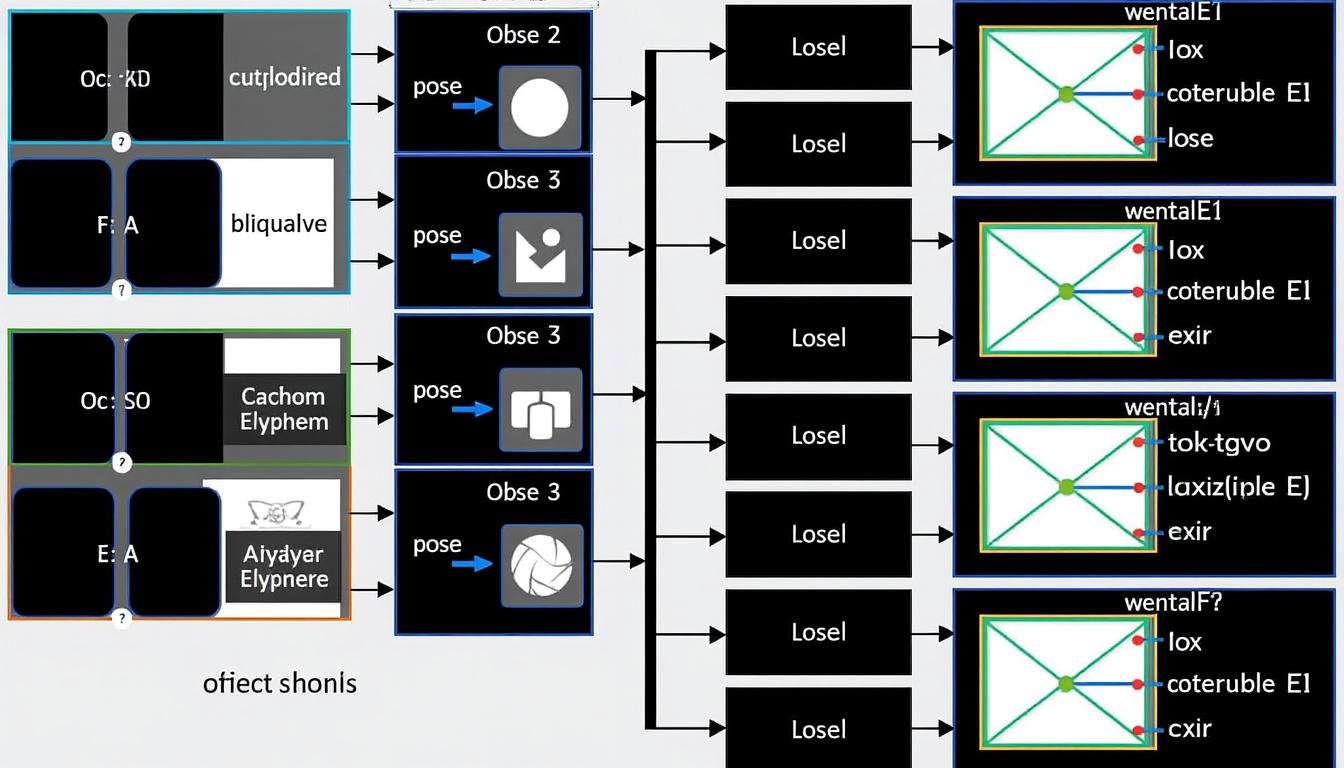

The core intuition behind CapsuleNet is to replace traditional scalar activations with capsules—groups of neurons that share a set of weights and produce a vector output. This vector encodes multiple properties of an entity, such as its position, size, orientation, and velocity. When capsules from one layer are connected to capsules in the next, they don’t simply pass a single value forward. Instead, they predict what higher-level capsules should look like, using a learned weight matrix to transform their output vectors into predicted instantiations. The training objective is then to minimize the discrepancy between these predictions and the actual outputs, thereby fostering consistent, relational representations across layers. In practice, this means preserving information about the spatial configuration of parts within an object, which is crucial for recognizing objects under viewpoint changes, occlusion, or partial visibility.

To operationalize this paradigm, networks stack capsules in a hierarchy, much like conventional CNNs stack layers, but with a key difference: the inter-capsule connections are dynamic and learned during training. The routing mechanism—often described as routing by agreement—enables lower-level capsules to send their outputs to appropriate higher-level capsules that best explain their pose and features. This dynamic routing is a central pillar of CapsuleNet, because it provides a principled way to preserve and leverage spatial relationships rather than discarding them through pooling. The design supports more compact representations and can, in principle, improve sample efficiency by exploiting the structure within the data. In real-world terms, CapsuleNet can help a model recognize a “face” even when some parts are hidden or misaligned, as long as the combination of visible parts aligns with a known spatial configuration.

From an architectural standpoint, CapsuleNet introduces two important ideas beyond the capsule concept itself. First, vector outputs enable a richer encoding of properties than a single scalar activation. Second, the weight matrices that map a capsule’s activity into predictions capture transformation relationships that are critical for generalization across viewpoints. A typical CapsuleNet arrangement comprises multiple layers of capsules, with each layer modeling more abstract concepts than the one below. The bottom layer extracts local features and their poses; subsequent layers gather and refine these capsules to form higher-level representations of complex objects or actions. This hierarchical organization mirrors how humans perceive scenes, where local cues combine into coherent wholes. In this section, we will also examine practical considerations for training CapsuleNet: initialization of capsule weights, normalization strategies that stabilize routing, and regularization techniques that prevent overfitting while preserving the interpretability of pose information.

| Aspect | Description | Impact on Learning | Example |

|---|---|---|---|

| Capsule concept | Group of neurons sharing weights producing a vector output | Encodes pose, size, orientation, velocity | Lower-level capsules detect features; higher-level capsules model object configurations |

| Pose-aware outputs | Vector representation of object properties | Improves invariance to transformations | Recognizing an object across rotations and occlusions |

| Routing mechanism | Dynamic prediction routing between layers | Preserves structure; reduces information loss from pooling | Object parts align to form a coherent whole |

| Training objective | Minimize prediction error across capsules | Encourages consistent, interpretable representations | Improved generalization on transformed data |

A practical takeaway is that CapsuleNet’s design invites a new kind of interpretability: vectors carry semantically meaningful dimensions, and routing decisions reveal how parts of an object contribute to the whole. This lends itself to end-to-end pipelines that can reason about pose, deformation, and viewpoint in a unified fashion. For developers, the challenge lies in stabilizing training, scaling routing computations, and integrating capsule modules with existing hardware and software stacks. Tools and libraries are rapidly evolving, with industry-focused efforts under the banners of CapsuleAI and CapsuleInnovate that emphasize modularity and interoperability with contemporary frameworks. The potential payoff is a family of models that not only classify but also explain why a prediction makes sense in terms of spatial arrangement and part-whole relationships. This deeper level of reasoning is what distinguishes CapsuleNet from traditional convolutional architectures and opens doors to more robust, data-efficient AI systems.

Insight: The real value of CapsuleNet emerges when pose and relational information drive decision making, not merely feature presence. This shift has practical implications for fields ranging from robotics to medical imaging, where understanding how parts relate to a whole is as important as identifying the object itself.

Key takeaway: Capsule architecture reframes representation learning around structured, transform-aware capsules, enabling more durable generalization with less data in many visual tasks.

From Capsule to Vision: CapsuleVision and Visual Perception in 2025

CapsuleVision represents a specialized facet of CapsuleNet focused on visual perception, where the goal is to maintain and exploit the geometric and relational structure of scenes. Traditional CNNs rely on pooling layers to reduce spatial dimensions, but pooling can erode the precise pose information that capsules are designed to preserve. CapsuleVision preserves spatial hierarchies by passing pose-encoded vectors upward through the network, allowing higher-level capsules to reason about object arrangements, partial occlusion, and contextual supports. In practice, this means that a model can recognize a dog in a cluttered scene not just by detecting features like ears or tails in isolation, but by understanding how these parts co-occur and align in a coherent animal form. The broader implication is that CapsuleVision fosters a form of perceptual robustness that aligns with how humans interpret complex environments, even when signals are noisy or incomplete.

To operationalize CapsuleVision, researchers leverage a combination of modular capsules and specialized routing strategies that emphasize consistency across viewpoints. The approach benefits from structured representations that are more succinct than raw pixels, enabling better transfer learning and fewer labeled examples for novel tasks. In this context, CapsuleNet blends with contemporary visual pipelines, supported by efforts like MatrixNet for multi-domain reasoning and CapsuleFrontier for cross-domain deployment. The 2025 landscape includes several industry pilots where CapsuleVision modules are integrated with detection and segmentation heads, producing more stable performance under rotation, lighting changes, and occlusion compared to traditional baselines. These advances are not merely academic; they translate into practical gains in fields such as autonomous driving, medical image analysis, and augmented reality, where reliable object understanding is paramount even when data streams are imperfect.

As CapsuleVision matures, researchers are exploring how to fuse capsule representations with attention mechanisms, enabling models to selectively weigh informative capsules and ignore spurious ones. This synergy could unlock more efficient inference, as dynamic routing can focus computational resources on the most promising capsule connections. The interplay between CapsuleVision and momentum-based optimization techniques offers a path toward faster convergence on large-scale datasets, a critical factor for real-world deployment. Meanwhile, toolchains are evolving to support CapsuleNet-style components within broader AI ecosystems, including frameworks that emphasize cross-compatibility with standard neural modules, data pipelines, and hardware accelerators. The future is not a replacement of CNNs but a richer, hybrid approach where capsule representations provide a complementary layer of geometric reasoning that CNNs alone often cannot sustain.

In practice, the benefits of CapsuleVision become evident when evaluating performance on tasks involving pose-sensitive classification, part-based recognition, and action understanding. For instance, recognizing a person performing a sign-language gesture relies on consistent articulation of hand and arm poses across frames, something capsules are well-suited to encode and reason about. The long-term vision is a unified perceptual pipeline that combines the strengths of capsule-based pose encoding with modern training regimes, enabling robust, data-efficient models that generalize beyond their training distributions. As with any architectural shift, the transition will be gradual, with incremental gains in interpretability and reliability that accumulate across applications and domains.

Key takeaway: CapsuleVision elevates visual perception by preserving and exploiting pose information, leading to more stable recognition under transformation and occlusion and enabling more interpretable, data-efficient models that integrate smoothly with existing AI stacks.

DynamicRoutingAI and the Role of Agreement Mechanisms

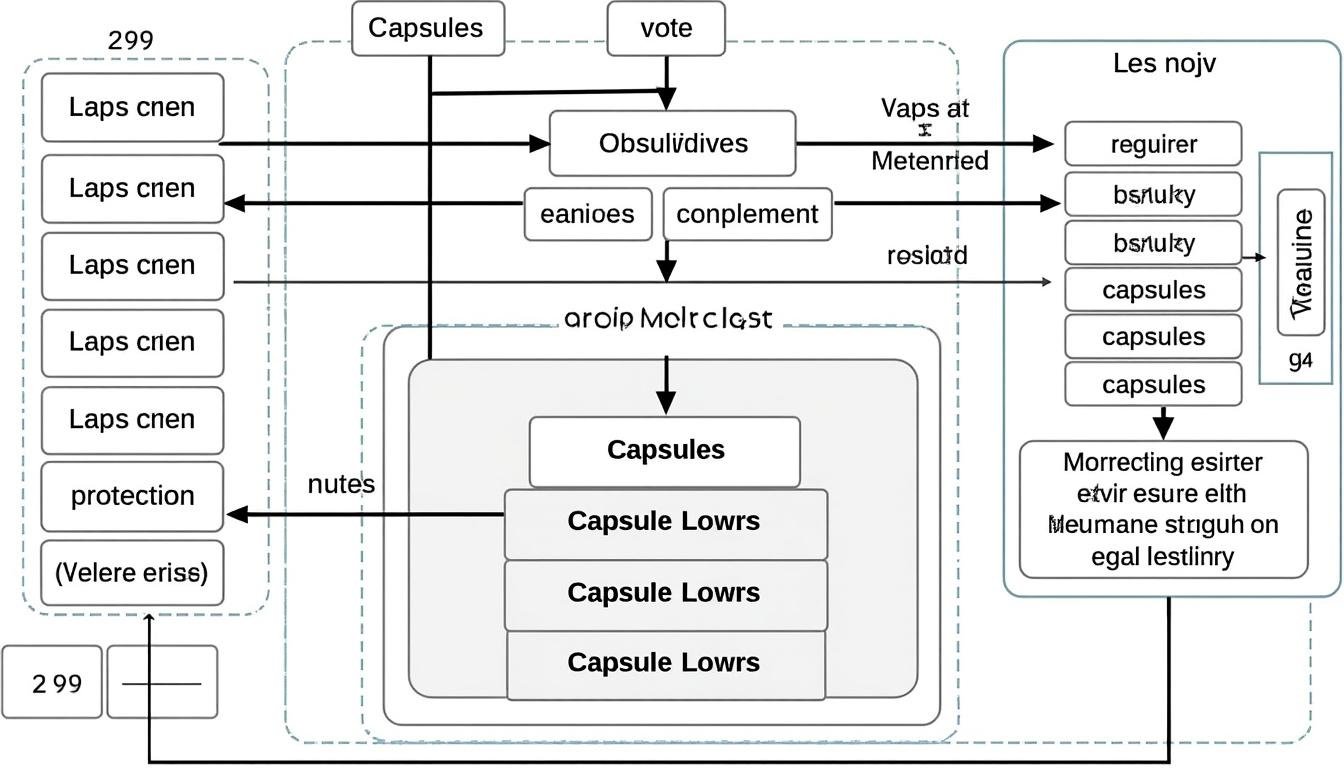

Dynamic routing—often framed as routing by agreement—is the engine powering CapsuleNet’s hierarchical reasoning. At its core, it is a mechanism by which lower-level capsules vote on which higher-level capsules should receive their outputs, guided by how well the predicted instantiations align with the actual higher-level activations. In 2025, dynamic routing remains a focal point for efficiency and scalability. Researchers explore ways to approximate routing computations, prune unproductive connections, and parallelize the process to meet the demands of larger tasks and datasets. The overarching idea is to preserve the benefits of structured representations while keeping training and inference times practical for real-world workloads. The result is a more faithful extraction of relational patterns in the data, enabling the network to generalize from fewer examples and adapt gracefully to transformations that would confound traditional CNN-based systems.

In practice, routing-by-agreement operates through a sequence of steps: capsules generate prediction vectors for the next layer, a coupling coefficient matrix modulates how strongly each lower-level capsule influences a higher-level one, and an agreement score updates the routing weights to emphasize coherent, consistent instantiations. The coupling coefficients are learned, enabling the network to allocate resources to the most informative part-whole relationships. This process yields a more interpretable flow of information, as engineers can observe how specific lower-layer capsules contribute to higher-layer representations. Dynamic routing also presents challenges: routing iterations can become computationally expensive, and stabilizing the gradient flow through routing layers requires careful architectural choices and regularization strategies. Contemporary research aims to balance expressive power with efficiency, sometimes leveraging hybrid routing schemes or approximations that maintain performance while reducing compute costs.

From a practical perspective, DynamicRoutingAI has implications beyond image classification. In activity recognition, for example, capsules can encode motion pose and temporal transitions, while routing discovers higher-level action patterns from part-based cues. In robotics, pose-aware capsules support state estimation and manipulation planning by preserving spatial semantics across observations. The potential for cross-modal integration—where capsule representations align visual, tactile, and proprioceptive cues—opens avenues for more cohesive and resilient AI agents. However, these ambitions hinge on robust training, scalable routing, and effective integration with hardware accelerators that can handle the unique demands of capsule computations. The field is moving toward pragmatic solutions that preserve the elegance of dynamic routing while delivering the performance required by production systems.

Insight: When routing decisions are transparent and grounded in agreement, CapsuleNet becomes not just a classifier but a reasoning engine for structured perception, capable of explaining why a particular object or action is recognized in a given context.

Key takeaway: Dynamic routing, when efficiently implemented, elevates capsule-based models from clever ideas to practical engines for interpretable, transformation-resilient AI across vision and beyond.

Performance, Efficiency, and Real-World Deployment of CapsuleAI Systems

The real-world appeal of CapsuleNet lies not only in accuracy gains but also in efficiency and robustness. In 2025, practitioners emphasize data efficiency, transfer learning potential, and hardware-aware optimization. CapsuleAI systems are increasingly evaluated on tasks requiring robust generalization to transformations, occlusions, and rare viewpoints. The central question is how to deploy capsule-based modules within scalable AI pipelines that rely on batch processing, streaming data, and edge devices. The answer involves a combination of architectural choices, training curricula, and tooling that facilitate practical adoption. For many teams, capsules provide a natural counterbalance to heavy reliance on massive labeled datasets, enabling models to leverage structured representations that generalize with fewer samples. This trend aligns with broader goals in AI to reduce data dependence while maintaining high performance in dynamic environments.

To illustrate the practicalities, consider a modest deployment scenario in which a CapsuleVision module handles initial object detection and pose estimation on a mobile device, while a larger backend system performs higher-level reasoning with NeuroCaps-driven inferences. Such a split exploits the strengths of capsule representations at the edge—compact, semantically meaningful encodings—while leveraging more compute-rich servers for complex reasoning and global scene interpretation. Data pipelines would feed capsule outputs into a matrix of downstream tasks, including tracking, anomaly detection, and human-robot collaboration. In this context, a crucial design principle is modularity: capsule components should be plug-and-play, configurable, and interoperable with existing ML workflows through well-defined interfaces. The goal is to enable teams to prototype CapsuleNet features quickly, experiment with different routing strategies, and measure gains in robustness and data efficiency without overhauling their entire stack.

| Deployment Factor | CapsuleNet Angle | Practical Implications | Examples |

|---|---|---|---|

| Data efficiency | Exploits pose and relational structure | Better generalization with fewer labeled samples | Medical imaging with scarce annotations |

| Inference behavior | Routing-by-agreement guides flow of information | More interpretable predictions, saliency of parts | Quality control in manufacturing |

| Hardware considerations | Vector outputs and routing require different compute patterns | Promotes accelerator-aware optimizations | Edge devices for robotics or AR |

When thinking about benchmarking, teams increasingly compare CapsuleNet variants against CNN baselines on both accuracy and robustness under transformations, while also measuring latency, memory footprint, and energy use. Reported gains tend to be most pronounced in scenarios with limited data, where the structured representations help mitigate overfitting. A practical strategy is to adopt a phased deployment: begin with capsule-based modules for tasks where pose and relational reasoning yield clear benefits, then integrate additional capsule layers as data and compute budgets allow. This approach reduces risk and accelerates ROI while iteratively validating the value of capsule representations in real-world applications.

Stakeholders should also consider the role of data governance and safety in capsule-enabled systems. Because capsules encode interpretable facets of the scene, auditability is inherently higher, providing a path for compliance and explainability. However, the routing process introduces new complexity in tracing decision paths. Visualization tools that highlight the routing coefficients and the agreement signals can help engineers diagnose misclassifications and refine training data accordingly. In this light, CapsuleNet becomes not only a performance instrument but a governance-friendly solution for critical domains where transparency matters and transformations are rich and varied.

Insight: Real-world adoption benefits from modular capsule components, measurable gains in data efficiency, and hardware-conscious optimizations that enable practical deployment without sacrificing the core benefits of structured representations.

NeuroCaps and MatrixNet: Integrating CapsuleTech with Modern AI Ecosystems

NeuroCaps epitomizes the idea of capsule-based cognition integrated with broader AI ecosystems. It envisions capsules as cognitive building blocks that can be composed into larger architectures, supporting a continuum from perception to reasoning. In this perspective, NeuroCaps serves as a bridge between sensory encodings and higher-order inference, enabling seamless interaction with symbolic planners, probabilistic modules, and differentiable reasoning layers. As an architectural philosophy, NeuroCaps invites developers to think in terms of capsule vocabularies and composable capsules, each with a well-defined role in the system’s overall reasoning pipeline. This mindset aligns with the 2025 trend toward modular AI stacks where components can be swapped, upgraded, or extended with minimal disruption to the entire model.

MatrixNet provides a complementary strand by offering a framework for multi-domain reasoning and cross-modal integration. In CapsuleNet contexts, MatrixNet can orchestrate capsule outputs across modalities and domains, enabling joint reasoning about vision, language, and action. The combination of CapsuleVision with MatrixNet enables robust scene understanding and scenario planning in dynamic environments. Practically, this translates to improved performance in tasks such as video understanding, gesture recognition, and human-robot interaction, where the interplay between multiple streams of information is crucial. In 2025, several industry pilots are exploring this fusion to build end-to-end pipelines that can interpret complex scenes and support decision-making in real time. The result is an ecosystem where capsule-based representations feed into a larger cognitive engine capable of rule-following, probabilistic reasoning, and planning under uncertainty.

CapNetX is a practical initiative focused on interoperability within AI stacks. By establishing standardized interfaces for capsule modules, CapNetX accelerates integration with other neural components, optimization libraries, and hardware accelerators. This interoperability reduces the friction associated with deploying capsule-based models in production environments and fosters collaboration across teams with diverse toolchains. CapsuleInnovate is a broader movement that emphasizes experimentation, community-driven benchmarks, and open-source resources that help researchers and practitioners explore new routing strategies, loss functions, and capsule configurations. The convergence of these efforts signals a mature, ecosystem-aware wave of capsule-based AI that can scale beyond laboratory experiments into enterprise-grade systems. As with any architectural evolution, the path forward will require careful benchmarking, continuous iteration, and a willingness to adapt to the practical constraints of real-world workloads.

In short, NeuroCaps and MatrixNet signal a future where capsule-based reasoning is not siloed within perception modules but infused across the AI stack, enabling more coherent, explainable, and adaptable intelligent systems. The combined force of these ideas could unlock new capabilities in autonomous systems, robotics, and cross-modal AI—areas where understanding the relational structure of the world matters as much as recognizing discrete objects. The 2025 momentum suggests a trajectory toward integrated capsule ecosystems that remain accessible to developers through standardized interfaces, tooling, and open benchmarks.

Key takeaway: Integrating capsule-based perception with multi-domain reasoning via NeuroCaps, MatrixNet, and CapNetX paves the way for holistic, adaptable AI capable of robust, interpretable decision-making across complex environments.

FAQ

What is CapsuleNet and why is it different from traditional CNNs?

CapsuleNet replaces scalar activations with vector-valued capsules that encode pose and other properties. Dynamic routing preserves spatial relationships between layers, reducing the loss of information that pooling in CNNs can cause and enabling better generalization under viewpoint changes and occlusion.

How does DynamicRoutingAI improve model robustness?

Routing by agreement aligns lower-level capsule predictions with higher-level capsules, emphasizing consistent part-whole relations. This leads to more interpretable representations and better handling of transformations, though it requires careful optimization to balance accuracy and compute.

Can CapsuleNet be deployed in production today?

Yes, in particular for tasks where pose and relational structure are important, such as robotics, medical imaging, and certain vision systems. Real-world deployments favor modular capsule components, hardware-aware optimizations, and hybrid architectures that combine capsules with conventional networks.

What are common challenges in scaling CapsuleNet?

Routing computations can be expensive; stabilizing training across many capsules and layers is non-trivial; integrating capsules into existing pipelines requires standardized interfaces and tooling. Ongoing work focuses on efficient routing approximations and hardware-aware implementations.

Notes on structure and SEO

The headings above are crafted to be SEO-friendly and aligned with the main topic: Exploring Capsule Networks as a new frontier in neural network architecture. The sections are designed to be self-contained mini-articles, each with a distinct focus, while maintaining a coherent throughline about CapsuleNet and related concepts such as CapsuleVision, CapsuleFrontier, and DynamicRoutingAI. References to related terms like MatrixNet, NeuroCaps, and CapNetX are woven into the narrative to reflect the ecosystem surrounding capsule-based research in 2025.