En bref

- Prioritize a culture that embraces continuous learning, collaboration with AI, and ethical considerations across the entire organization.

- Build a scalable data and AI infrastructure that integrates seamlessly with existing ecosystems from Microsoft, Google Cloud, and AWS while leveraging specialized platforms like NVIDIA for acceleration and OpenAI for generative capabilities.

- Define a clear AI adoption roadmap with governance, KPIs, and risk controls to maximize return on investment (ROI) and maintain stakeholder trust.

- Invest in responsible AI practices, including fairness, transparency, accountability, and data privacy, to navigate regulatory and societal expectations in 2025.

- Realize value through concrete use cases, measurement, and iterative improvements, supported by case studies and industry benchmarks.

In 2025, artificial intelligence has evolved from a promising technology to a core operating model for modern businesses. The competitive landscape has shifted: organizations that treat AI as a strategic asset—rather than a separate IT project—outperform those that attempt to bolt it on later. Across sectors, AI is reshaping how work gets done, from automating routine tasks to augmenting decision-making with predictive insights and intelligent automation. The journey requires more than buying a tool; it demands a holistic transformation that touches people, processes, data, and governance. This article lays out essential steps for businesses to embrace the age of AI, with concrete paths, real-world examples, and practical guidance aligned to the realities of 2025. It highlights the importance of partnerships with technology leaders such as Microsoft, Google Cloud, IBM Watson, Amazon Web Services, Salesforce, NVIDIA, OpenAI, Oracle, SAP, and Adobe, and it points to a growing ecosystem of resources that can accelerate adoption.

AI-Driven Culture and Talent: Training the Workforce for the Age of AI

As artificial intelligence becomes more capable, the first and most enduring investment a business can make is in its people. AI is not merely a technology; it is a new way of working that requires new skills, mindsets, and collaborative practices. The best organizations in 2025 treat training as a continuous, strategic program rather than a one-off workshop. They design learning journeys that blend hands-on projects, internal mentorship, and access to external expertise, so employees can move from basic literacy to practical mastery of AI-enabled workflows. This section explores how to create an AI-ready culture, including concrete steps, exemplars, and the organizational mechanisms that sustain momentum over time.

Key to this transformation is a dual emphasis on upskilling and re-skilling. Upskilling focuses on enabling frontline workers to work more effectively with AI assistants, chatbots, and decision-support tools. Re-skilling targets roles that AI is likely to automate or augment, ensuring that employees can transition into more strategic tasks such as data interpretation, model governance, and human-in-the-loop design. An effective program blends formal training—online courses, bootcamps, and university partnerships—with experiential learning: project sprints, hackathons, and cross-functional problem solving. The aim is to cultivate a culture where experimentation is celebrated, failures are treated as learning opportunities, and ethical considerations are embedded in every pilot.

To operationalize this, organizations should establish a clear progression map for AI competencies, spanning literacy, literacy-plus, and expert levels. A practical approach is to develop role-based learning tracks aligned to actual job activities. For example, customer service teams can graduate from scripted interactions to dynamic, AI-assisted conversations that handle escalating issues with context-aware responses. Data stewards, product managers, and developers should gain proficiency in data governance, model evaluation, and deployment best practices. A robust program includes governance structures that allocate accountability and rewards for AI-enabled outcomes, ensuring that learning translates into measurable improvements.

In this section, we examine examples and case studies that illuminate what a successful AI-training program looks like in practice. A mid-sized manufacturing firm recently launched an AI literacy initiative that combined micro-learning modules with a hands-on “AI sandbox.” The result was a notable uptick in employee engagement, faster problem solving, and a reduction in manual data entry errors. In the financial services sector, a large bank rolled out a role-based AI-competency framework that linked training to promotions and performance reviews, reinforcing the value of AI capabilities in daily work. Such experiences illustrate that training is not a cost center but a strategic investment that unlocks compound value over time.

To support the learning journey, consider partnerships with major platforms and ecosystems. Microsoft, Google Cloud, IBM Watson, Amazon Web Services, and Adobe offer comprehensive training ecosystems, while Salesforce helps align AI skills with customer-centric outcomes. NVIDIA accelerates practical learning through hardware-conscious labs, and Oracle, SAP, and OpenAI provide domain-specific capabilities that speed up real-world deployments. For deeper reading and perspectives, these external resources offer valuable context on AI’s evolving role in business:

Is AI the New Electric Revolution or More Like the Invention of the Telephone?,

Top Soundtracks to Enhance Your Creativity While Writing AI Blogs,

Blogging Revolutionized: Harnessing the Power of AI.

- Define AI literacy goals by role and business outcome

- Create an AI sandbox with controlled data and guardrails

- Establish a cross-functional AI governance council

- Link training to measurable business metrics

- Invest in diverse talent pipelines and inclusive learning environments

| Aspect | Impact on Business | Example Initiative | Owner |

|---|---|---|---|

| Skills | Improved problem solving, faster decision-making | AI literacy curriculum | HR / L&D |

| Culture | Experimentation with ethical guardrails | AI ethics learning tracks | Senior leadership |

| Governance | Clear accountability and risk management | AI governance committee | Compliance / CTO |

| Measurement | Quantified ROI and impact | AI KPI dashboard | COO / Analytics |

Designing a Learning Journey

To make training effective, design a learning journey that moves learners from curiosity to mastery. Begin with foundational literacy—what AI can and cannot do, common terms, and the ethical implications. Progress to practical application—how to use AI tools in daily tasks, interpret outputs, and incorporate human oversight. Finally, elevate with mastery modules—model governance, data ethics, responsible experimentation, and cross-team collaboration. The journey should be time-bound yet flexible, allowing people to learn at their own pace while maintaining a sense of momentum across departments.

In practice, this means creating short, certificate-bearing modules that participants can complete in 15–30 minutes, followed by longer, project-based assignments that require collaboration with data scientists and domain experts. Integrating real-world pilots—such as automating a customer inquiry workflow or generating insights from a data lake—helps embed learning in daily routines. Moreover, leadership must model the behavior: openly discuss AI pilots, celebrate safe experimentation, and reinforce ethical decision-making.

Key takeaways for this section are:

- Training must align with business outcomes, not just technology capabilities.

- Learning should be experiential, collaborative, and iterative.

- Ethical considerations must be central to every training module.

- Leadership visibility accelerates adoption and trust.

| Learning Stage | Activities | Metrics | Timeline |

|---|---|---|---|

| Foundational | e-learning, reading groups, micro-lessons | Completion rates, knowledge checks | 0–3 months |

| Applied | projects, pilots, and shadowing | Pilot outcomes, time-to-value | 3–9 months |

| Mastery | governance, ethics, advanced tooling | Governance readiness, risk metrics | 9–18 months |

Architecting a Scalable AI Infrastructure: From Data to Deployment

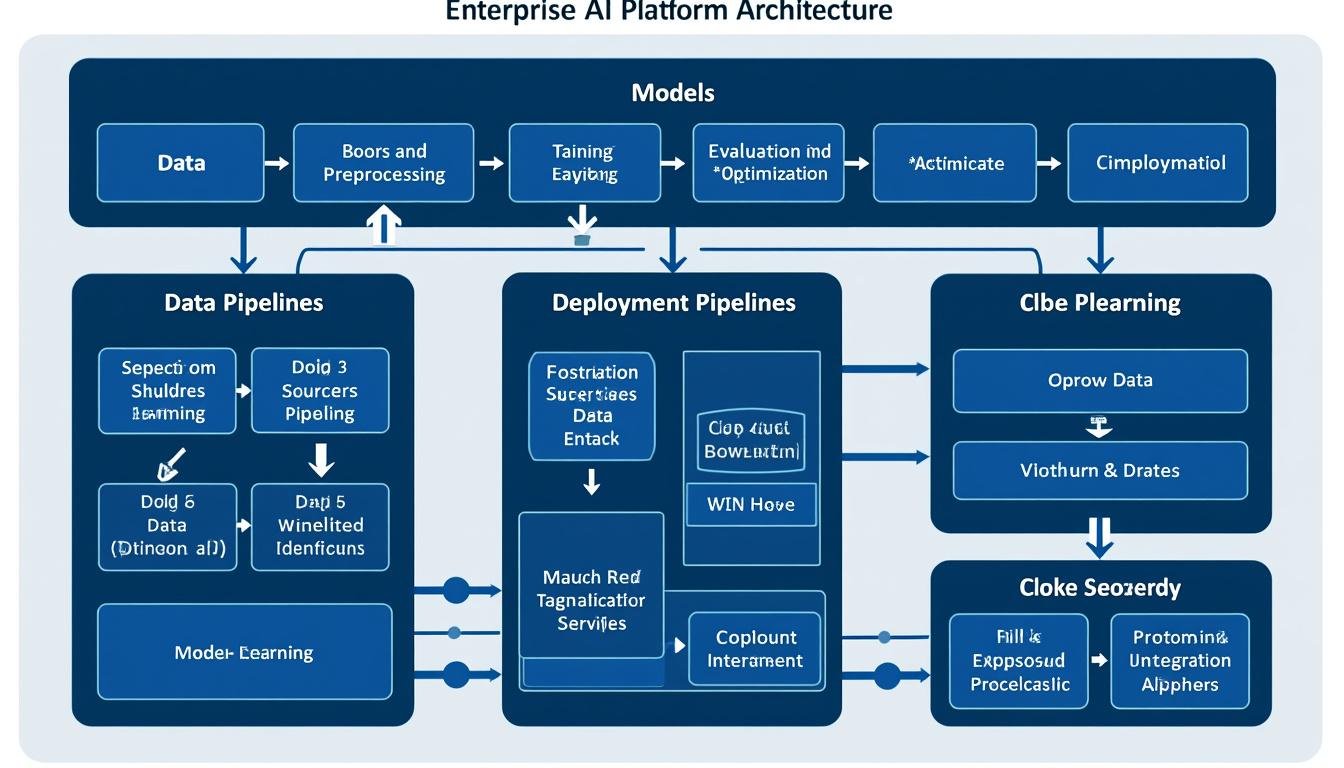

Success in AI hinges on a robust, scalable infrastructure that turns data into reliable, deployable models. An architecture designed for speed, security, and governance enables rapid experimentation while protecting sensitive information and complying with evolving regulations. The 2025 landscape favors hybrid and multi-cloud approaches, where providers such as Microsoft, Google Cloud, and AWS offer complementary capabilities. This section dissects how to build and operate an AI-ready platform, covering data strategy, model governance, deployment pipelines, and security controls.

One reality is that data quality and accessibility drive AI outcomes more than any single algorithm. Organizations must define data ownership, establish data catalogs, implement data lineage, and ensure privacy by design. For instance, customer data may span CRM systems, ERP records, and external signals from social and transactional channels. A unified data fabric with strong access controls reduces duplication, accelerates model training, and improves reproducibility. The right infrastructure supports both traditional analytics and modern generative AI, enabling teams to mix structured data with unstructured content such as text, images, and audio.

From a deployment perspective, modern AI ecosystems emphasize continuous integration and continuous delivery (CI/CD) for AI—often called MLOps. A well-structured pipeline includes data ingestion, feature engineering, model training, evaluation, deployment to production, monitoring, and retraining loops. Real-time inference may rely on edge devices or cloud-hosted services, depending on latency requirements and data sovereignty needs. Collaboration with leading platform providers—Microsoft Azure, Google Cloud, IBM Watson, Oracle Cloud, and SAP—helps align data, governance, and operations with enterprise standards. NVIDIA accelerates model training and inference, while OpenAI provides state-of-the-art models for rapid capability scaling.

To illustrate practical steps, consider a midsize retailer aiming to optimize pricing, demand forecasting, and customer support. The data strategy should integrate point-of-sale data, loyalty programs, inventory feeds, and website interactions. The architecture must support both batch and streaming analytics, enabling hourly demand signals and real-time price adjustments. A deployment plan might start with a pilot in a single category, then gradually extend to the entire catalog, ensuring that monitoring detects drift, bias, or degradation. This approach reduces risk and demonstrates early ROI to stakeholders.

In terms of governance, establish a model registry, versioning, and approval workflows for production models. Security controls should enforce least privilege access, encryption at rest and in transit, and regular audits. Compliance considerations vary by industry but typically include data privacy, consent management, and explainability requirements, especially in regulated sectors. A practical example is using synthetic data for initial experimentation to minimize exposure of sensitive information while validating pipeline integrity.

To deepen understanding, explore these resources on AI deployment and strategy:

Could Superhuman AI Pose a Threat to Human Existence?,

OpenAI Unveils GPT-4: A Pivotal Advancement in AI.

| Layer | Key Components | Examples/Tools | Owner |

|---|---|---|---|

| Data Layer | Data sources, catalogs, lineage | CRM, ERP, data lake, data mesh | DataOps |

| Model Layer | Training, evaluation, versioning | Feature stores, registries, drift detection | ML Engineering |

| Deployment Layer | CI/CD, serving, monitoring | CI/CD pipelines, real-time APIs | Platform Engineering |

| Security & Compliance | Access, encryption, audits | IAM, DLP, governance dashboards | Security / Compliance |

Data Strategy and Accessibility

Data is the fuel of AI momentum. Without a robust data strategy, AI efforts stall, regardless of promising models. A practical data strategy begins with data mapping: identify critical data domains, map data producers and consumers, and establish data quality metrics. Implement a data catalog that makes datasets discoverable, with clear owners and usage policies. Data lineage is essential to trace how data moves from source to model, which supports debugging, auditing, and regulatory compliance.

Organizations should invest in data virtualization and data fabric capabilities to enable agile access across departments while preserving governance. In the 2025 context, the interplay between governance and experimentation is critical: teams can run experiments with synthetic data or de-identified datasets, reducing risk while preserving the opportunity to learn. Cloud providers offer managed data services that simplify ingestion, transformation, and analysis, but successful enterprises also build internal data literacy so teams know how to interpret results, understand model outputs, and avoid misuse.

Examples and best practices include implementing a centralized feature store to reuse data across models, adopting data drift monitoring to catch performance changes, and using data contracts to formalize expectations between data producers and AI teams. The emphasis is on making data accessible to the right people, at the right time, with appropriate controls. As a result, teams can accelerate experimentation and deployment while maintaining trust and accountability.

- Establish clear data ownership and stewardship roles

- Implement data catalogs, lineage, and quality metrics

- Use synthetic data cautiously to prototype and test

- Leverage scalable, secure data platforms from major cloud providers

| Data Readiness | Practice | Tooling | Impact |

|---|---|---|---|

| Discovery | Cataloging datasets and owners | Data catalogs, metadata management | Faster onboarding |

| Quality | QA checks, data profiling | Profilers, validators | Less model drift |

| Governance | Access controls, contracts | IAM, policy engines | Compliance and trust |

Strategic AI Adoption: Roadmaps, Metrics, and Governance

Adopting AI at scale requires a clearly defined strategy, a measurable roadmap, and strong governance. The strategic framework should articulate where AI adds the most value, the sequence of capabilities to build, and the expected business outcomes. In 2025, leading organizations align AI initiatives with corporate strategy, tying the initiative to customer value, operational efficiency, and risk management. A practical roadmap enumerates stages from discovery and pilot to production and maturity, with explicit milestones and decision gates.

Key governance components include a centralized AI steering committee, role-based accessibility, and transparent explainability for model decisions that affect customers or employees. Ethical guardrails, fairness checks, and data privacy controls must be embedded in every stage of development and deployment. The governance model should define who can approve data use, who is responsible for model reliability, and how incidents are escalated and remediated. It’s essential to maintain an auditable trail that can be reviewed by regulators or stakeholders.

Measuring success requires both leading and lagging indicators. Leading metrics track inputs and process health—data quality, model coverage, rate of experimentation, and time-to-value for AI pilots. Lagging metrics capture outcomes such as revenue uplift, cost savings, customer satisfaction, and reduced time-to-resolution for support inquiries. A balanced scorecard approach helps executives connect AI activities to strategic priorities. The table below presents a concise view of common metrics across stages of AI maturity.

To illustrate practical scenarios, a financial services firm might measure the impact of an AI-driven fraud-detection pilot by monitoring the false-positive rate, detection accuracy, and impact on customer trust. A manufacturing company could track yield improvements, maintenance cost reductions, and changeover times. Linking KPIs to business outcomes ensures leadership maintains visibility into ROI while teams stay focused on delivering tangible value.

When it comes to cloud ecosystems and platform capabilities, alignment is crucial. Microsoft Azure, Google Cloud, IBM Watson, Amazon Web Services, and Oracle Cloud offer complementary strengths such as data services, model hosting, and governance tools. This collaboration supports a robust roadmap that scales from pilot to enterprise-wide adoption. OpenAI’s models can augment productivity in creative, customer-facing, and analytical workflows, while Salesforce, SAP, and Adobe provide domain-specific capabilities for CRM, ERP, and creative content generation.

Reading and reference points for governance and strategy include these resources:

Celebrating Excellence in Text-to-Image AI (2023) and

OpenAI GPT-4: Evolution in AI.

| Stage | Activities | KPIs | Owner |

|---|---|---|---|

| Discovery | Pilot scoping, use-case catalog | Number of vetted use-cases, regulatory readiness | Strategy / PMO |

| Pilot | Prototype development, ROI analysis | ROI, time-to-value | Product / Data |

| Production | Scaled deployment, governance enforcement | Uplift, reliability, ethical compliance | IT / Legal |

Roadmap Design and ROI Tracking

A practical roadmap translates strategic intent into concrete programs. Start with a curated list of high-value use cases, prioritized by potential impact and feasibility. For each use case, define a hypothesis, a success criterion, and a minimal viable product that can demonstrate value quickly. Track ROI with a simple model that accounts for cost savings, revenue impact, and intangible benefits like improved customer satisfaction and risk reduction. Use a dashboard that presents ongoing performance against milestones, with triggers for iteration or scale-up.

Governance should be instantiated early, with roles clearly defined: a Chief AI Officer or equivalent sponsor, data stewards, model validators, and a security/compliance lead. Ethical impact assessments should become part of the project intake process, not an afterthought. The key is to build trust through transparency: explainable AI, auditable data lineage, and robust incident response plans. When stakeholders see that AI initiatives deliver measurable value while adhering to ethical standards, resistance declines and adoption accelerates.

The next step is to align with ecosystem partners who can accelerate execution. Large platforms from Microsoft, Google Cloud, IBM Watson, Amazon Web Services, Oracle, SAP, and Adobe provide a spectrum of capabilities—from data services and model hosting to industry-specific workflows. Leveraging these ecosystems, including NVIDIA accelerators for training and inference, helps organizations realize faster time-to-benefit. Expand the collaboration with AI research centers and academic partnerships to stay ahead of the curve and to validate new approaches with independent benchmarks.

For further context and perspectives on AI adoption in a competitive market, consider these resources:

Bing’s Struggle in the AI Landscape,

Understanding AI NPCs—the Future of Interactive Characters in Gaming.

- Define clear milestones tied to business value

- Establish executive sponsorship and cross-functional teams

- Implement governance and ethical risk assessment from day one

- Measure ROI with a balanced set of financial and non-financial metrics

| Milestone | Expected Outcome | Timeline | Owner |

|---|---|---|---|

| Pilot to Production | Stable, governance-compliant deployment | 6–12 months | CTO / PMO |

| Value Realization | Quantified ROI and customer impact | 12–24 months | Finance / Sales |

Ethical AI and Responsible Innovation: Building Trust and Compliance

Ethics are not a checkbox but a core design principle for AI-enabled organizations. Responsible AI means designing models and systems that are fair, transparent, and accountable to the people they serve. In 2025, regulatory scrutiny around data privacy, bias, explainability, and accountability continues to intensify, and businesses that codify ethical practices tend to outperform those that delay. This section outlines how to embed ethics into every AI initiative—from initial concept to production and beyond.

Beyond compliance, ethical AI is about trust—trust with customers, employees, partners, and regulators. It means providing clear explanations for decisions that affect people, offering channels to contest outcomes, and implementing robust risk controls to prevent unintended harm. An effective ethical framework includes bias detection, impact assessments, and a governance protocol that requires human oversight where appropriate. It also requires careful data handling, consent management, and transparent data usage disclosures.

Practical steps involve creating an AI ethics charter that outlines guiding principles, such as fairness, accountability, and privacy by design. Establish a cross-disciplinary ethics council with representation from product, legal, compliance, engineering, and customer support. Implement ongoing bias audits, model monitoring for drift, and explainability tools that enable stakeholders to understand how a decision was reached. In customer-facing AI, provide recourse mechanisms for disputes and ensure accessibility for all users.

Ethical AI demands alignment with broader societal values. Companies collaborating with cloud providers, including Microsoft and Google Cloud, can leverage their governance frameworks and compliance certifications to reinforce responsible practices. Demonstrating ethical alignment is not only about risk management; it is a competitive differentiator—customers prefer vendors they believe will protect their interests and respect their rights.

Readers can explore related perspectives on ethics, AI risk, and safety in the broader industry discourse:

Could Superhuman AI Pose a Threat to Human Existence?,

Text-in-Image AI Awards (2023).

| Ethical Area | Practice | Tools | Owners |

|---|---|---|---|

| Fairness | Bias audits, diverse data | Fairness toolkits | ML/Equity |

| Transparency | Explainability, documentation | Explainability dashboards | Product / Compliance |

| Privacy | Data minimization, consent | Data governance platforms | Security / Legal |

Ethics in Action: Real-World Scenarios

Ethical AI is not abstract; it manifests in concrete scenarios. For example, in customer service, AI can triage issues while preserving human oversight for sensitive cases. In hiring, AI recommendations must be audited to avoid biased patterns that could disadvantage certain groups. In finance, model risk management demands robust validation, explainability, and compliance with regulations. Each scenario requires explicit triggers for human intervention and continuous monitoring to detect drift or adverse outcomes.

To implement these principles, organizations should couple risk management with culture. Build a learning loop that encourages employees to report concerns, challenges, and unintended consequences. Create transparent incident response plans that describe how issues will be investigated and resolved, and publish summaries of lessons learned to maintain trust across the organization.

- Establish ethics charter and governance processes

- Deploy monitoring and explainability tools for all critical models

- Provide clear recourse channels for customers and employees

- Engage external audits and independent reviews periodically

| Scenario | Controls | Impact | Lessons |

|---|---|---|---|

| Customer Support | Human-in-the-loop for escalations | Improved satisfaction, reduced errors | Always retain human oversight |

| Hiring | Bias auditing, diverse datasets | Fairer outcomes | Continuous monitoring required |

Realizing Value: Case Studies, Maturity, and the Path Forward

Ultimately, AI is a strategic engine that compounds value when adopted deliberately and iteratively. A mature AI program delivers measurable business outcomes—improved customer experiences, higher efficiency, and stronger competitive positioning—while maintaining ethical integrity and governance. This final section presents a practical view of how to progress along a maturity curve, with examples, metrics, and organizational changes that help sustain momentum through the mid-to-long term.

Many organizations begin with targeted use cases that demonstrate clear ROI, such as chatbots for common inquiries, automated invoice processing, or demand forecasting for inventory optimization. As confidence grows, they broaden the scope to more complex domains, including AI-assisted product design, personalized marketing, and autonomous decision support in operations. The move from pilot projects to enterprise-wide adoption requires aligning incentives, scaling data infrastructure, and refining governance processes.

In 2025, partnerships with leading technology vendors remain essential. Microsoft, Google Cloud, IBM Watson, Amazon Web Services, Salesforce, NVIDIA, OpenAI, Oracle, SAP, and Adobe provide a rich ecosystem of tools, platforms, and best practices that reduce time-to-value and increase reliability. These collaborations enable organizations to test, deploy, and scale AI with a higher degree of confidence. The broader business landscape is also shaped by the advent of more accessible AI models and platforms, which democratize AI capabilities and accelerate adoption across lines of business.

Real-world exemplars include retailers enhancing demand sensing with AI, manufacturers implementing predictive maintenance, and financial institutions deploying AI-driven risk analytics. Each case demonstrates that value emerges not from a single breakthrough but from disciplined execution across people, processes, and technology. The path forward is a cycle of learning, iteration, and governance that grows stronger as data assets mature and AI literacy expands. The result is an organization that can anticipate customer needs, respond rapidly to market shifts, and sustain competitive advantage as AI capabilities continue to evolve.

For readers seeking further context or inspiration, explore these resources:

Understanding AI NPCs: The Future of Interactive Characters in Gaming,

Display AI Art: Transform Your Walls with Digital Creativity.

| Value Channel | Example Impact | Measure | Owner |

|---|---|---|---|

| Customer Experience | Personalized interactions, faster resolution | NPS, CSAT, resolution time | CX / IT |

| Operations | Automation, efficiency gains | Throughput, cost per unit | Operations |