As artificial intelligence continues to evolve, the language of machines—how they understand, generate, and transform human communication—has become a central organizing principle for research and industry alike. By 2025, AI language systems have moved beyond shuffling words to mapping meanings, intents, and nuanced styles across diverse domains. This article explores the architecture, learning mechanisms, and real-world ecosystems that shape how machines decode language, the terminologies that practitioners rely on, and the ethical considerations guiding their deployment. It blends core concepts with concrete examples, industry trends, and practical references to keep readers oriented in a rapidly changing landscape.

- Key trend: language-enabled AI is now integrated into cloud platforms and developer toolchains, enabling scalable deployment across industries.

- Core idea: transformers and context-aware models turn vast text data into actionable understanding, not merely word replacement.

- Practical takeaway: cross-company collaborations and open ecosystems accelerate innovation while exposing new governance challenges.

- Reality check: tools from OpenAI, DeepMind, NVIDIA, IBM Watson, AWS AI, Google AI, Microsoft Azure AI, Anthropic, Hugging Face, and Cohere are shaping standard practices.

- Forward look: responsible AI, bias mitigation, and interpretability remain central to achieving trustworthy language systems.

Foundations of AI Language and Learning in 2025: From Data to Understanding

Artificial intelligence language capabilities sit at the intersection of data, computation, and human intention. By 2025, the field has coalesced around the principle that large-scale data—curated, diverse, and representative—serves as the substrate for models to learn patterns, syntax, and semantics. But true language understanding goes beyond memorizing co-occurrences; it requires building internal representations that map words to concepts, contexts, and goals. This shift has been accompanied by a growing emphasis on evaluation that captures comprehension, reasoning, and usefulness in real tasks rather than surface-level fluency alone.

At the operational level, most language models rely on neural networks trained with optimization objectives that balance predictive accuracy with generalization. The training pipeline typically involves three stages: pretraining on massive unlabeled text, fine-tuning on target domains or tasks, and continual learning updates that keep models current with new information. Early demonstrations of impressive text completion gave way to more sophisticated capabilities—summarization, translation, dialogue, code generation, and reasoning—driven by richer datasets and architectural innovations. In practice, this evolution has produced systems that can adapt their tone, deduce user intent, and maintain coherence over long interactions. Within major AI ecosystems, the work of OpenAI, DeepMind, NVIDIA, IBM Watson, AWS AI, Google AI, Microsoft Azure AI, Anthropic, Hugging Face, and Cohere has helped set benchmarks and integration patterns that others adopt and adapt across industries.

One foundational idea is the concept of context windows: the amount of prior text a model can consider when predicting the next token. Expanding this window improves coherence but requires more memory and efficient attention mechanisms. The attention mechanism—core to transformer architectures—lets the model weigh different parts of the input differently, enabling nuanced interpretation of syntax, semantics, and discourse relations. The result is not just fluency but the ability to follow a thread of argument, resolve pronouns, and maintain consistency across turns in a conversation. This evolution is especially visible in multilingual settings, where models must align semantics across languages while preserving style and intent.

Paralleling these technical advances is a parallel development in governance and safety. As language models become more capable, stakeholders demand transparency around data provenance, training objectives, and potential biases. Responsible AI practices now emphasize guardrails, auditing, and human oversight in high-stakes applications—such as healthcare, finance, and law. These trends are reflected in policy discussions, industry standards, and the practical deployment decisions that product teams make every day. For readers curious about the lexicon, a growing body of accessible terminology explains the core ideas behind model architectures, training pipelines, and evaluation frameworks. If you want to explore the terminology more deeply, consider resources like Understanding the jargon: a guide to AI terminology and Understanding the language of artificial intelligence for a structured glossary.

In this section, we have embedded a practical table to frame the essential concepts that underpin AI language systems. The table highlights terms, definitions, and concrete examples, with emphasis on how each concept plays out in 2025’s real-world deployments. This map helps connect abstract ideas to tangible products. For deeper context, you can consult industry sources and company blogs from OpenAI, DeepMind, and the broader ecosystem that aggregates best practices across experiments and production environments. The discussion also notes how companies deploy these capabilities in consumer products, enterprise tools, and research platforms, often using cloud-native services to scale language features efficiently.

Key terms to know in this foundational domain

- Tokenization and embeddings

- Transformers and attention mechanisms

- Pretraining, fine-tuning, and continual learning

- Context windows and memory efficiency

- Evaluation metrics that capture reasoning and usefulness

| Term | Definition | 2025 Example |

|---|---|---|

| Token | A unit of text (word, subword, or character) used as the input to a model. | Decomposing a sentence into subwords to handle rare terms like “OpenAI” or “Cohere” across languages. |

| Embedding | A vector representation that encodes semantic meaning for words or tokens. | Capturing synonyms and contextual similarity between “language” and “linguistics” in a document. |

| Transformer | A neural network architecture that uses self-attention to model dependencies across input sequences. | Modeling long-range dependencies in a multi-turn conversation or a long article. |

| Attention | A mechanism that weighs different parts of the input to focus on the most relevant information. | Focusing on context when resolving pronouns in a narrative. |

| Fine-tuning | Adapting a pre-trained model to a specific task or domain with task-specific data. | Tailoring a general language model for customer support in banking or healthcare. |

Subsection: The Industrial Context and Adoption Patterns

In practice, the 2025 landscape shows a rapid diffusion of language capabilities into enterprise tooling, developer platforms, and consumer services. Companies like OpenAI and Google AI lead API ecosystems that enable developers to build language-powered features with minimal setup, while the underlying hardware accelerators from NVIDIA enable real-time inference at scale. The synergy between model capability and infrastructure expands opportunities in areas such as customer support automation, content generation, and data extraction from unstructured sources. In parallel, major cloud providers—AWS AI, Microsoft Azure AI, and IBM Watson—offer governance layers, safety tooling, and auditing capabilities that help businesses deploy language models responsibly. For readers seeking real-world context, consider exploring the broader landscape through analyst reports and practitioner blogs that compare capabilities across ecosystems, including those from Understanding the language of artificial intelligence.

Industry case studies frequently highlight how organizations integrate language models to streamline operations, improve decision-making, and unlock new business models. For example, teams leveraging large-scale language tools in OpenAI-backed research pipelines have demonstrated improved reproducibility in scientific text generation, while enterprises using NVIDIA-accelerated deployment pipelines report lower latency and higher throughput for multilingual customer interactions. The practical takeaway is clear: the success of language-enabled AI hinges on aligning model capabilities with domain knowledge, data governance, and user experience design. See related discussions on the best ways to interpret AI outputs and to connect language understanding with actionable intelligence in industry resources linked elsewhere in this article.

References and further reading:

– Understanding the intricacies of neural networks: a deep dive into modern AI. Understanding the intricacies of neural networks

– A glossary of AI terminology to reinforce the foundations for practitioners and curious readers alike. Demystifying AI terminology

The next sections shift from foundational concepts to the architectural engines that enable language processing, including how transformers encode context and how models reason about meaning in real time. The journey continues with a closer look at NLP tasks and evaluation methods that measure what matters to users and operators.

AI Model Architectures for Language: Transformers, Encoding, and Context

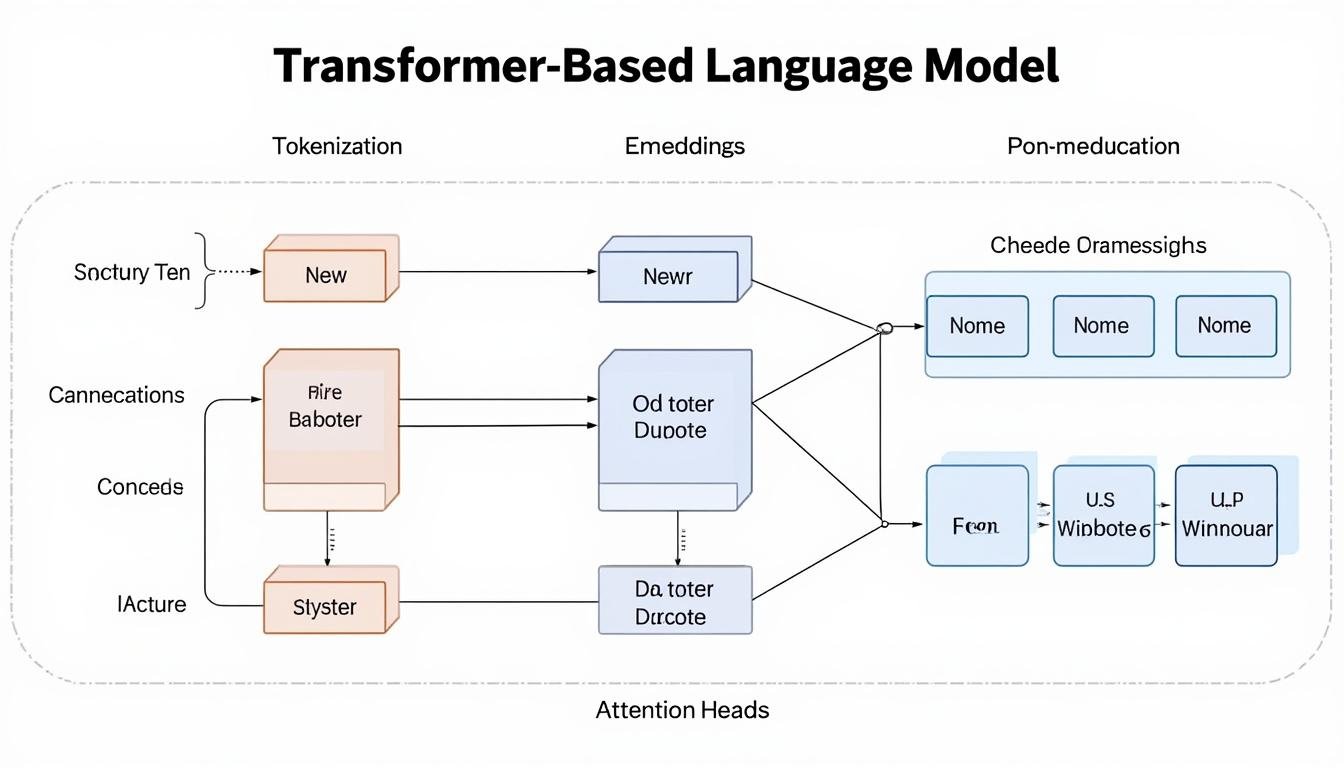

Between 2020 and 2025, transformer-based architectures redefined the capabilities and scalability of AI language systems. The essential insight is that attention mechanisms enable models to consider all parts of the input with varying weights, rather than relying solely on fixed-length representations. This shift allows models to capture syntax with precision while maintaining semantic coherence across longer passages. In practice, transformers power both encoder-decoder configurations used for tasks like translation and generation, and decoder-only models designed for autoregressive text synthesis. The distinctions matter when engineers decide how to apply these models to specific problems, such as real-time chat, document summarization, or code completion.

Two core ideas underpin practical deployments: first, the way input is structured and consumed—tokenization strategies, positional encodings, and attention patterns; second, how models are trained to generalize from data to new domains—pretraining objectives, alignment with human preferences, and fine-tuning against domain-specific corpora. The interplay between these factors determines not only accuracy but also reliability, controllability, and the ability to avoid undesirable outputs in sensitive contexts. In 2025, the ecosystem around model architectures has matured to include efficient training regimes, quantization for edge devices, and hybrid systems that combine learned components with rule-based or symbolic reasoning for safety guarantees and interpretability.

In this section, the practical implications for developers and researchers are clear: architecture choices shape latency, memory usage, and the kinds of tasks a model excels at. For instance, encoder-decoder configurations are particularly useful for translation and content editing, while autoregressive decoders shine in creative writing and code generation. The aural and visual modalities increasingly intersect with language as models learn to align textual outputs with images or audio signals, enriching user experiences across applications. The following table delineates the main architectural blocks, their roles, and representative examples from the field in 2025.

| Architectural Block | Role | Practical Example |

|---|---|---|

| Encoder | Transforms input into a latent representation that captures linguistic structure and context. | Sentence comprehension in a virtual assistant, extracting intent from user queries. |

| Decoder | Generates the output sequence, often conditioned on encoder representations or prior tokens. | Autoregressive text generation for drafting emails or drafting code. |

| Attention | Weights input tokens to focus on relevant information when producing outputs. | Maintaining coherence in long-form responses by linking antecedents and pronouns. |

| Positional Encoding | Imparts a sense of order to tokens, enabling the model to understand sequence structure. | Grasping the temporal flow of a narrative in summarization tasks. |

| Pretraining Objective | Defines the learning signal used to train the model on broad language patterns. | Masked language modeling or next-token prediction on large multilingual corpora. |

In practical terms, practitioners frequently encounter a spectrum of design choices, such as whether to use a pure encoder-decoder stack or a decoder-only architecture for generation. The decision hinges on latency requirements, the need for controllability, and the desired interaction mode with users. For teams building production-grade language features, considerations around safety, bias, and governance are inseparable from performance metrics. The role of hardware accelerators—especially from NVIDIA—and optimized software stacks from cloud providers is to keep training and inference scalable while reducing the energy footprint of large-scale language workloads. You can explore how these choices play out in industry reports and product notes across major players including OpenAI, DeepMind, and The Power of Action Language.

To illustrate concrete progress, consider how a typical enterprise uses an encoder-decoder model for multilingual customer support. The system ingests a user’s query in one language, encodes the semantic intent, and decodes it into a contextually accurate response in the same or another language, maintaining tone and customer service goals. In 2025, this workflow is integrated into customer platforms via cloud services from Google AI and Microsoft Azure AI, with governance supported by IBM Watson and partner solutions from Hugging Face and Cohere. For an accessible overview of how architectures translate into real-world features, the article Understanding the language of artificial intelligence provides practical context and glossary references.

Further exploration: for an accessible visual primer on transformer architectures, check out introductory videos from AI education channels and the ongoing debates about model interpretability and safety.

Natural Language Processing and Semantic Decoding: From Tokens to Meaning

Natural Language Processing (NLP) sits at the core of AI language systems, focusing on how computers understand, interpret, and manipulate human language. In 2025, NLP has matured into a suite of tasks that encompasses parsing, translation, sentiment analysis, summarization, question answering, and dialogue management. Each task has its own evaluation metrics, datasets, and best practices for deployment. The field’s practical value rests on combining linguistic insight with statistical learning to produce outputs that align with user intent, domain constraints, and ethical standards. The result is a practical pipeline that can be integrated into enterprise workflows, consumer apps, and research platforms.

One of the most powerful shifts in NLP is the move from surface-level text processing to semantically grounded decoding. Rather than treating words as discrete symbols, modern systems map language into rich representations that capture semantics, pragmatics, and discourse structure. This enables models to perform tasks such as summarizing a legal contract with emphasis on obligations and risk, translating a technical document with domain-specific terminology preserved, or answering questions that require multi-hop reasoning across documents. The practical impact is a more helpful and trustworthy interface between humans and machines, with outputs that reflect deeper understanding rather than rote pattern matching.

The following table presents common NLP tasks alongside typical evaluation metrics and a brief example of how they are used in 2025 deployments. The entries are framed to help practitioners and decision-makers compare approaches across different ecosystems, including those from major AI platforms and research groups. For readers seeking a glossary of AI terms, the linked articles in this section offer accessible explanations and examples. The goal is to connect conceptual understanding with concrete outcomes you can observe in real-world products.

| NLP Task | What It Means | Common Metrics |

|---|---|---|

| Machine Translation | Converting text from one language to another while preserving meaning and style. | BLEU, METEOR, TER |

| Sentiment Analysis | Determining the emotional tone or opinion expressed in text. | Accuracy, F1, AUC |

| Summarization | Creating concise representations that retain essential information and structure. | ROUGE, BLEU, SQuALITY |

| Question Answering | Providing direct answers to questions based on given texts or knowledge bases. | Exact Match (EM), F1 |

| Dialogue Management | Maintaining coherent, context-aware conversations with users over multiple turns. | Perplexity, Human Evaluation, Dialog Success Rate |

In practice, NLP tasks are often supported by evaluation suites and benchmarks that reflect real-world usage. For developers, the challenge is to balance accuracy with latency, memory usage, and safety considerations. The field also increasingly emphasizes alignment with human values, ensuring that language outputs are not only correct but also appropriate for the user context. This is where governance and human-in-the-loop systems play a vital role, combining automated evaluation with human oversight to prevent biased or harmful outputs. For readers seeking a deeper glossary, the article Understanding the language of artificial intelligence provides a concise reference to terms discussed here and elsewhere in this guide.

To illustrate practical application, consider how an enterprise might deploy a multi-task NLP pipeline that translates internal documentation, analyzes sentiment in customer feedback, and generates executive summaries for leadership. This multi-faceted approach benefits from a unified data strategy, shared representations, and standardized evaluation to ensure consistency across tasks. Advanced NLP leverages tooling from leading AI platforms, including OpenAI, Google AI, and Hugging Face, while staying mindful of governance and privacy considerations in regulated industries. For additional context, explore the article on understanding AI terminology for a compact glossary of terms that underpin these tasks.

In addition to text processing, a growing set of multimodal capabilities blends language with vision, audio, and other signals. This expansion enables applications like image-grounded descriptions, audio-aware chat, and interactive assistants that respond to user actions in a more natural and intuitive way. For insights into these cross-modal trends, browse resources and case studies linked throughout this article and in related sections.

AI Ecosystems and Terminology for 2025: Platforms, Partners, and Open Ecosystems

The AI landscape in 2025 is characterized by a vibrant ecosystem that blends cloud platforms, research institutions, and open-source communities. Large technology companies, including Microsoft Azure AI, Google AI, AWS AI, IBM Watson, and NVIDIA, provide scalable infrastructure, model hosting, safety tooling, and governance frameworks. Meanwhile, research-driven initiatives from OpenAI and DeepMind push the boundaries of what’s possible, and open-source communities like Hugging Face and Cohere foster collaboration and rapid iteration. The result is a dynamic balance between proprietary platforms that offer robust production-grade capabilities and open ecosystems that accelerate experimentation, sharing, and responsible development.

To navigate this landscape, teams often rely on a combination of cloud-native services, community-driven libraries, and vendor-specific best practices. The synergy between these elements accelerates time-to-value for language-enabled solutions, from prototypes to production deployments. The field also emphasizes careful consideration of data governance, model governance, and bias mitigation as integral components of architecture and product design. For readers seeking practical, up-to-date references, several curated resources and term glossaries are widely used to align vocabulary across teams and industries. A featured glossary and terminology guide can be found in the linked articles, including the straightforward explainer on AI terminology and the in-depth glossary of language-related AI terms.

Key ecosystems and players to watch in 2025 include:

- OpenAI and DeepMind driving research frontiers and APIs for developers

- NVIDIA powering the hardware backbone for training and inference at scale

- IBM Watson and AWS AI offering enterprise-grade governance and safety tooling

- Google AI and Microsoft Azure AI enabling seamless integration with productivity suites and cloud services

- Anthropic, Hugging Face, and Cohere fostering open collaboration, model sharing, and community experimentation

| Platform | Focus | Typical Use Cases |

|---|---|---|

| OpenAI | Generative models, API-based integration, alignment research | Text generation, coding assistants, chatbots |

| Google AI | Search-quality language models, multilingual capabilities, tooling | Translation, content understanding, enterprise integrations |

| Microsoft Azure AI | Cloud-native ML services, governance, enterprise security | Model hosting, compliance-ready deployments, AI for business processes |

| AWS AI | Scalable inference, data pipelines, analytics integration | Model training at scale, NLP pipelines, real-time inference |

| IBM Watson | Industry-specific solutions, governance, explainability | Healthcare, finance, regulated sectors |

In addition to the big players, the ecosystem thrives on a network of alliances and communities. The following links provide accessible overviews and glossaries to help practitioners and executives align on terminology and strategy:

- Understanding the jargon: AI terminology

- Understanding the language of artificial intelligence: Key terminology

- Understanding the language of AI

- Outpainting and creative AI techniques

- Top AI-powered apps for entrepreneurs (2025)

For a concise exploration of neural networks, you can consult the in-depth guide linked above and the glossary of AI terms referenced throughout this section. These resources help teams standardize language, measure progress, and communicate effectively with stakeholders. The next section turns to the ethical and practical implications of language-enabled AI, focusing on governance, safety, and future prospects.

Ethics, Regulation, and The Future of AI Language

The rapid maturation of AI language systems raises important questions about safety, fairness, accountability, and governance. In 2025, the field has moved beyond purely technical performance toward responsible deployment across diverse contexts. Ethical considerations include mitigating bias in training data and outputs, ensuring transparency about model limitations, and designing systems that respect user privacy and data ownership. Regulators, industry groups, and corporate governance bodies are increasingly active in defining standards for risk assessment, red-teaming practices, and auditability. The practical aim is to enable AI language technologies to deliver value while minimizing harm, particularly in high-stakes domains such as healthcare, finance, and public policy.

From a business perspective, responsible AI involves building guardrails into product design, maintaining clear lines of accountability, and providing users with understandable explanations of how outputs are generated. This approach is complemented by ongoing research in model interpretability, adversarial robustness, and feedback-based alignment with human preferences. The interplay of technical innovation and governance shapes how organizations deploy language models, the levels of automation they permit, and the safeguards they implement to preserve user trust. For readers seeking broader context, the article Understanding the language of AI terminology provides essential vocabulary for discussions about ethics, risk, and governance in AI systems.

To illustrate practical governance patterns, many teams implement a layered approach to safety. This includes filter-based content controls, policy-driven response modes, and human-in-the-loop review for uncertain or sensitive outputs. The goal is to strike a balance between enabling powerful capabilities and protecting users from harm. In corporate environments, these controls are often integrated with enterprise risk management, data governance frameworks, and regulatory compliance programs. The landscape is dynamic, with ongoing debates about transparency, algorithmic accountability, and the responsible sharing of models and data across organizations. As you navigate this space, the following references offer practical guidance and case studies from leading AI platforms and researchers, including Anthropic, Hugging Face, and Cohere.

For readers who want to connect governance with practice, consider reviewing example policies and best practices in the linked resources below. These include glossaries and guides designed to help teams reason about risk, safety, and ethical considerations in AI systems. The broader objective is to make AI language technologies trustworthy, explainable, and aligned with human values while still delivering transformative value in business, science, and daily life. To close with a practical example: a company might integrate an AI language assistant across its customer journey, but with explicit disclosure about model-generated content, a robust feedback loop for errors, and an escalation path for sensitive issues.

| Key Considerations | Practical Outcome | |

|---|---|---|

| Bias Mitigation | Assess data sources, monitor outputs, apply debiasing techniques | More fair and representative responses across user groups |

| Transparency | Explain outputs, reveal limitations, provide model provenance | User trust and informed usage decisions |

| Safety Guardrails | Content filters, human oversight, escalation protocols | Prevention of harmful or inappropriate outputs |

| Privacy and Data Governance | Data minimization, access controls, audit trails | Compliance with regulations and user privacy protections |

| Accountability | Clear ownership, auditability, external governance reviews | Responsible deployment with traceable decision-making |

Connecting governance to practice requires ongoing education and collaboration across teams. Resources that explain AI terminology, like the glossary linked in earlier sections, help ensure everyone speaks a common language when discussing risk, safety, and ethics. For readers who want a compact, practical overview of AI terms and their implications for governance, the linked glossary entries offer accessible explanations and examples.

In closing, the AI language landscape in 2025 is marked by a convergence of technical prowess, practical deployment, and principled governance. The field continues to move toward systems that are not only powerful and versatile but also transparent, fair, and controllable. As you explore the resources and case studies referenced throughout this article, you’ll gain a clearer sense of how language-enabled AI can be harnessed responsibly to create value while safeguarding people and ecosystems. For further reading, the following links provide concrete, implementable guidance and up-to-date terminology:

- The power of action language shaping communication and behavior

- Understanding neural networks in modern AI

- Guide to AI terminology

- Outpainting and expansion techniques

- Guide to AI terminology (language-focused)

FAQ

What is the core difference between pretraining and fine-tuning in AI language models?

Pretraining teaches broad language patterns from large, diverse datasets; fine-tuning tailors the model to a specific task or domain using task-specific data, often increasing performance and relevance in that domain.

Why is the attention mechanism so central to language models?

Attention allows models to weigh different parts of the input differently, enabling long-range dependencies and nuanced understanding, which improves coherence, context retention, and interpretability in many tasks.

How do governance and safety practices affect practical deployment of AI language systems?

Governance introduces guardrails, auditing, and human oversight to ensure outputs are safe, fair, and compliant with regulations, balancing innovation with risk management.

What resources help teams stay current with AI terminology and best practices?

Glossaries, industry reports, vendor documentation, and community-driven tutorials—such as those linked throughout this article—provide a shared vocabulary and up-to-date guidance.