En bref

- The launch of GPT-4o marks a pivotal expansion of OpenAI’s multimodal capabilities, enabling real-time interactions across text, audio, and vision with unprecedented speed and fluidity.

- GPT-4o, nicknamed for its omni-modality, integrates diverse inputs within a single neural network, delivering faster responses and broader applicability than its predecessors.

- Industry impact spans healthcare, education, customer service, and creative industries, with meaningful partnerships and ecosystem growth involving Microsoft, Google, Nvidia, Meta, IBM, Anthropic, Amazon Web Services, DeepMind, Cohere, and more.

- Economic and safety dimensions are balanced through improved efficiency (lower API costs) and reinforced guardrails, while tokenization and multilingual capabilities broaden accessibility across languages and regions.

- Practical demonstrations and real-world use cases suggest a future where AI assistants blend seamlessly into daily professional life, transforming workflows, learning, and communication.

OpenAI’s announcement arrives in a moment when AI technologies are maturing toward more natural human-computer interactions. GPT-4o expands beyond text to embrace audio and vision without sacrificing linguistic fluency or reasoning depth. The “o” in GPT-4o stands for omni, signaling a unification of modalities within a single model architecture. In practical terms, this means a user can speak, show an image, and read a response—all in a cohesive conversation. The performance benchmarks align GPT-4o with or surpass GPT-4 Turbo on English text and code while delivering substantial gains on vision and audio understanding, all at roughly half the API cost. The result is not merely faster or smarter responses; it is a more natural, context-aware dialogue that spans multiple senses. As organizations explore deployment, the impact ripples across enterprise software, cloud platforms, and developer ecosystems, inviting collaborations with major players such as Microsoft, Google, AWS, Nvidia, and IBM, among others. This convergence creates a multi-vendor landscape where OpenAI drives interoperability and innovation through APIs, tooling, and shared standards. For readers following the AI economy, the GPT-4o roll-out signals both a technical milestone and a strategic invitation to reimagine what intelligent assistance can mean in daily work and life.

GPT-4o Emergence: Multimodal Breakthrough and Real-Time Responsiveness

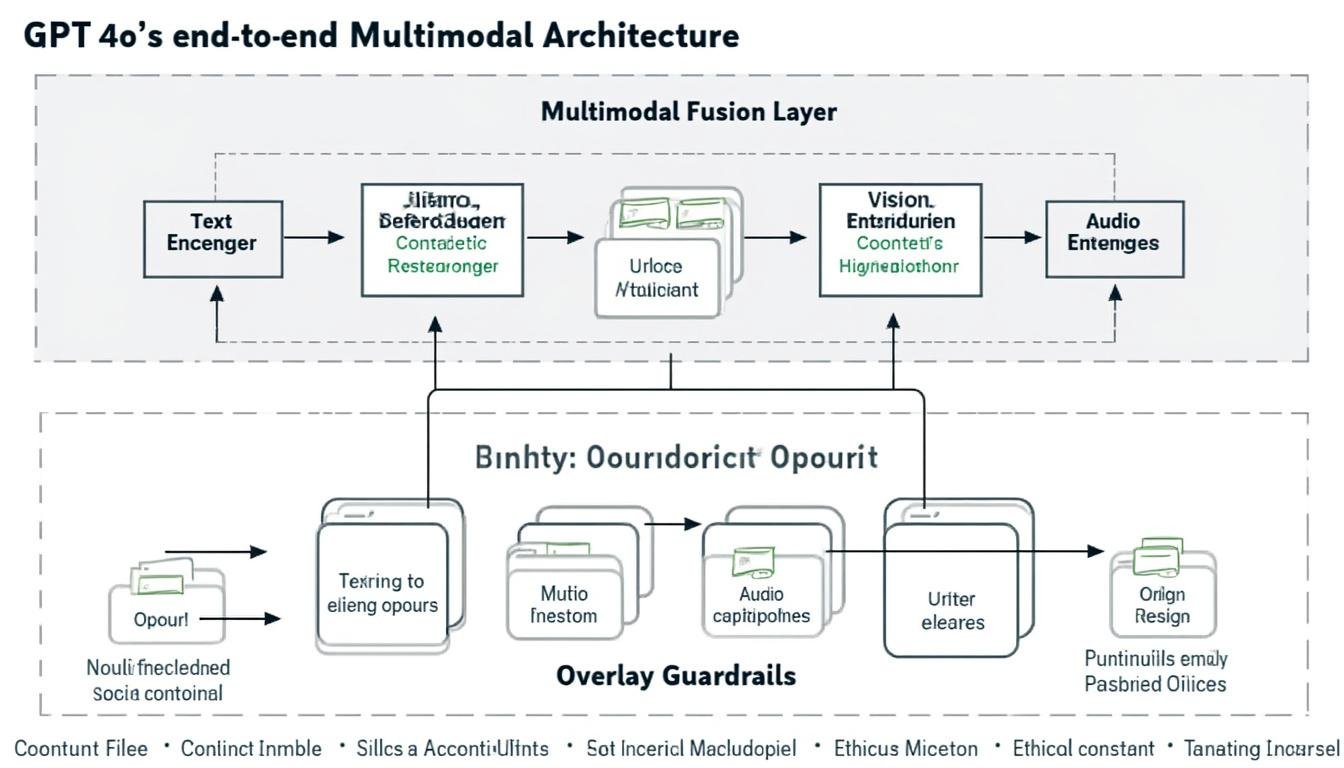

The emergence of GPT-4o represents more than an incremental upgrade; it is a deliberate reconfiguration of how artificial intelligence processes, understands, and produces information across channels. The core advancement is end-to-end training across text, audio, and visual inputs within a single neural network, enabling unified reasoning and output generation. This shift eliminates the rigid pipelines that previously separated voice, image, and text, reducing latency and preserving richer context. In real-world terms, a customer-service bot can listen to a caller, read a support ticket, and analyze a screenshot concurrently, delivering a solution that reflects a holistic understanding of the situation. The underlying architecture is designed to retain and leverage cross-modal context, which improves accuracy, reduces misinterpretation, and supports more naturalistic interactions. The open ecosystem surrounding GPT-4o—featuring collaborations with Microsoft, Anthropic, Google, and Nvidia—helps translate the technology into scalable business solutions, from enterprise chatbots to educational platforms. See how the model’s real-time capabilities translate into tangible outcomes in the sections that follow, where practical demonstrations and use cases are explored in depth.

Unified Multimodal Processing: From Theory to Practice

The unification of text, audio, and images within one model is more than a clever trick; it is a practical design choice that preserves context across modalities. This means a spoken sentence and a written paragraph can be reconciled in a single pass, reducing information loss and enabling more coherent responses. In addition, the model’s tokenizer optimization lowers token usage for many languages, translating into faster processing and lower costs. The effects are measurable: in non-English language tasks, performance improves markedly, and the model achieves a balance between linguistic nuance and cross-modal reasoning that previous generations struggled to match. In business terms, organizations can deploy a single API to handle diverse interaction modes, simplifying integration and accelerating time-to-value. This convergence is not an isolated capability; it builds on and complements existing AI platforms from major cloud providers and hardware vendors, creating a robust ecosystem for developers and enterprises alike. The practical implications for Microsoft and AWS users include streamlined deployment pipelines, improved translation and accessibility features, and richer analytics from multi-channel conversations.

| Feature | GPT-4o | Predecessors (GPT-4 / GPT-3.5) | Business Impact |

|---|---|---|---|

| Modalities | Text, Audio, Vision | Text only; limited audio | Expanded use cases (translation, assistive tech, media analysis) |

| Latency (audio) | As low as 232 ms | Higher, with separate pipelines | Real-time conversation, better customer experiences |

| Cost | Up to 50% lower API costs | Higher due to multiple pipelines | Lower TCO for enterprise deployments |

| Output Quality | Expressive audio, nuanced text, robust visuals | Text-centric, limited audio/visual fidelity | More natural interactions; broader audience reach |

- Real-time multimodal interactions enable use cases like live translation with visual context and income-ready dashboards for executives.

- Voice and emotion detection enable adaptive responses in customer service, education, and therapy-support tools.

- Unified processing reduces integration complexity across platforms such as OpenAI blogs and official docs.

Beyond the technical novelty, the practical implications are clear: developers can build richer assistants, educators can craft immersive learning experiences, and healthcare professionals can collaborate with AI that understands spoken language, patient notes, and medical imagery in a single conversation. The efficiency gains—especially in non-English contexts—help democratize access to cutting-edge AI tooling across regions and industries. For enterprises, this means reduced development overhead, faster prototyping, and the ability to scale multimodal flows across customer channels. As with any transformative technology, responsible deployment and safety controls are essential to ensure accurate interpretation of inputs, appropriate handling of sensitive data, and avoidance of bias in cross-cultural contexts.

Real-Time Multimodal Capabilities: Text, Audio, and Vision in Action

Practically, GPT-4o’s real-time multimodal capabilities unlock a spectrum of applications that meld everyday interaction with sophisticated analysis. In healthcare, clinicians can discuss patient data while the model analyzes radiology images in the background, offering preliminary interpretations and suggested next steps. In education, students can pose a math problem verbally, upload a diagram, and receive a step-by-step explanation accompanied by illustrative visuals. The potential for live translation and transcription across languages helps bridge language barriers in multinational teams, conferences, and customer-support centers. In creative industries, storytellers can compose scenes while AI provides audio cues, generate synthetic characters, or suggest visual compositions that align with the narrative arc. The synergy across modalities enhances both productivity and creativity, enabling users to accomplish tasks previously requiring multiple separate tools. This section uses concrete scenarios to illuminate how different sectors can leverage GPT-4o for measurable outcomes, while acknowledging the need for governance and safety.n

- Healthcare: multi-channel triage, image-assisted diagnosis, patient communication in multiple languages

- Education: interactive tutoring, visual problem-solving, multilingual materials

- Customer Service: omnichannel support combining chat, voice calls, and image-based troubleshooting

- Entertainment: dynamic storytelling with audience-driven inputs

- Accessibility: live captions, sign-language style visual summaries, and real-time translations

| Use Case | Inputs | Outputs | Impact |

|---|---|---|---|

| Real-time translation | Text, audio | Text, audio, captions | Faster cross-lingual communication in global teams |

| Visual-assisted explanations | Images, text | Annotated images, step-by-step guides | Enhanced understanding in education and medical imaging |

| Voice-enabled tutoring | Speech, screen content | Vocal responses, on-screen hints | Personalized learning experiences at scale |

In alignment with the broader AI ecosystem, OpenAI’s work on multimodal AI intersects with major technology players and cloud platforms. OpenAI collaborates with Microsoft to embed GPT-4o capabilities into enterprise software and Azure services, while Google and Anthropic contribute to safety research, standards, and interoperability. The model’s ability to interface with cloud-accelerated inference and edge devices has implications for Amazon Web Services customers seeking scalable, compliant AI deployments. On the hardware side, NVIDIA’s GPUs and related software stacks remain central to delivering low-latency inference and efficient training, while AI-native data processing pipelines benefit from partnerships with Meta, IBM, DeepMind, and Cohere in research and production environments. Industry observers note how such collaborations help accelerate adoption by providing robust tooling, governance frameworks, and diverse benchmarks. For further context on how these ecosystems are shaping AI tooling, consider reading about innovative AI tools and software solutions and comparing GPT-4o to earlier models in related analyses: Exploring Innovative AI Tools and Software Solutions and GPT-3.5 vs GPT-4o: The Money-Making Machine.

Economic and Industry Impact: From Healthcare to Education

The economic implications of GPT-4o are pronounced, driven by improved performance, broader multimodal reach, and lower per-transaction costs. In finance and operations, the consolidation of text, audio, and visual processing within a single model reduces the need for separate AI stacks, cutting development time and enabling faster go-to-market for AI-powered products. For instance, enterprises can deploy a unified conversational agent across customer support channels—chat, phone, and email—without maintaining parallel pipelines for each modality. The downstream effects include faster issue resolution, higher customer satisfaction scores, and a measurable reduction in operational overhead. These gains are particularly relevant for large-scale deployments across global operations where multilingual translation, real-time transcription, and image-based troubleshooting features unlock new revenue streams and service capabilities. The technology, while powerful, must be deployed with clear governance and compliance measures to manage privacy, data handling, and bias. As OpenAI and its ecosystem partners move toward broader adoption, the conversation around responsible innovation gains prominence alongside economic benefits.

- Healthcare providers can use GPT-4o to synthesize patient records, interpret medical images, and deliver patient-facing explanations in multiple languages.

- Educational platforms can offer adaptive, multimodal lessons that combine spoken language, written content, and visuals to accommodate diverse learning styles.

- Customer-service organizations can standardize omnichannel experiences, boosting efficiency while maintaining high quality of service.

- Content creators can design interactive experiences where audiences influence the narrative in real time, expanding engagement opportunities.

- Cloud providers and hardware vendors co-create optimized deployment paths, enabling cost-efficient, scalable AI at scale.

| Sector | Multimodal Advantage | Examples | Expected Outcomes |

|---|---|---|---|

| Healthcare | Simultaneous text, audio, and image analysis | Radiology reviews, patient communication, telemedicine | Faster diagnostics and more personalized care |

| Education | Interactive visuals integrated with language | Real-time problem-solving, language learning, accessibility | Improved engagement; reduced attainment gaps |

| Enterprise IT | Unified AI workflows across channels | Omnichannel support, automated documentation, compliance tasks | Lower TCO; higher productivity |

For readers following industry shifts, a key takeaway is that the AI ecosystem is becoming a multi-vendor alliance rather than a single-vendor monopoly. OpenAI’s strategic engagement with cloud providers and hardware companies creates a durable platform for AI-powered services that can scale across regions and industries. The conversation around OpenAI’s role in shaping this ecosystem continues to evolve, with ongoing coverage of how major players such as Microsoft, Google, and AWS contribute to capabilities, safety, and deployment best practices. See related analyses discussing AI tooling and conversational experiments with GPT-4o: Engaging in Conversation with GPT-4o Using an Invented Language and GPT-4o the AI Comedian: Humorous Tales.

Technical Architecture and Safety: End-to-End Training and Guardrails

The technical backbone of GPT-4o embodies a shift from multi-pipeline processing to a single, end-to-end trained system that can reason across text, audio, and image streams. This architectural choice helps preserve context, reduce latency, and improve the fidelity of outputs, particularly in cross-modal tasks. Tokenization improvements contribute to more efficient encoding of languages with complex scripts, such as Gujarati and Hindi, reducing the token footprint by factors that translate into faster inferences and lower operational costs. While the gains are substantial, they are matched by a rigorous approach to safety and governance. OpenAI has embedded guardrails across all modalities, including data filtering during training, post-training behavior refinements, and specialized systems to manage voice outputs. The safety framework is not static; it evolves through continuous red-teaming, user feedback, and formal evaluations that identify and mitigate potential risks. This dynamic approach aims to minimize bias, preserve user privacy, and ensure robust handling of sensitive content in multilingual contexts. As AI systems become more capable, the balance between capability and responsibility becomes central to long-term adoption by enterprises and public institutions.

- End-to-end training across text, audio, and images enables unified context handling.

- New safety layers address voice outputs, profanity filtering, and multi-speaker settings.

- Continuous evaluation and red-teaming help identify and remediate vulnerabilities before wide release.

- Improved multilingual tokenization expands access without sacrificing precision.

| Safety Layer | Purpose | Examples | Impact |

|---|---|---|---|

| Data Filtering | Prevents harmful content from training data | Curated datasets, bias auditing | Reduces model amplification of harmful patterns |

| Voice Output Guardrails | Prevents unsafe or inappropriate vocal expressions | Context-aware tone control, profanity detection | Safer user interactions in voice modes |

| Post-Training Fine-Tuning | Refines behavior based on user feedback | Human-in-the-loop assessments; red-teaming | Improved alignment with user intent |

From a governance perspective, the safety architecture of GPT-4o is designed to scale with deployment. Enterprises considering integration benefit from clearer boundaries around data handling, privacy, and regulatory compliance. The model’s expansion into non-English languages also raises considerations around cultural context and bias that the safety framework seeks to address through diverse evaluation cohorts and multilingual testing. The broader AI landscape, including players like Meta and IBM, contributes to a parallel track of safety research and standardization. As a practical matter, developers should implement guardrails and monitoring dashboards to ensure that multimodal capabilities are used responsibly and transparently. This is not only about risk reduction; it is also about building trust with users who rely on AI for critical decisions in healthcare, education, and public services. For those seeking deeper technical perspectives on GPT-4o’s architecture and safety mechanisms, the following article series offers a rigorous, research-oriented look, connected to the broader ecosystem: GPT-4o Safety and Expressiveness and Multilingual and Multimodal Interaction Study.

Industry Ecosystem and Partners: OpenAI, Microsoft, Google, and Beyond

The ecosystem around GPT-4o is shaped by a network of collaborations, integrations, and co-developed capabilities with leading tech ecosystems. OpenAI’s collaboration with Microsoft continues to anchor enterprise adoption through Azure integrations, productivity tools, and enterprise-grade governance. This synergy accelerates time-to-value for organizations seeking scalable AI-powered workflows, while ensuring compliance and security alignment with enterprise IT environments. In parallel, the broader tech landscape—featuring Google, Amazon Web Services, Nvidia, Meta, IBM, and DeepMind—contributes to a vibrant research and deployment ecosystem. Each partner brings complementary strengths: cloud infrastructure, model deployment optimization, hardware acceleration, research into safety and alignment, and industry-specific use cases. The net effect is a dynamic market where developers can access robust APIs, optimized tooling, and cross-platform interoperability. For developers and teams evaluating GPT-4o, the pathway often involves prototyping in sandbox environments, validating multilingual and multimodal scenarios, and then scaling through cloud partners that provide governance, data residency, and performance tuning. Readiness for real-world deployment frequently hinges on factors such as latency, reliability, data privacy, and cost control, all of which are areas where the OpenAI ecosystem continues to invest.

- OpenAI and Microsoft collaborate on Azure integrations, enterprise tooling, and governance.

- Google, Anthropic, and Cohere contribute to safety research, benchmarking, and multilingual capabilities.

- AWS, Nvidia, Meta, IBM, and DeepMind extend the hardware, software, and research ecosystems for scalable deployment.

- Independent developers benefit from a rich API marketplace, sample apps, and open datasets to accelerate experimentation.

For readers seeking real-world narratives and demonstrations of GPT-4o in action, several case studies and creative explorations are available. Examples include articles on conversational experiments with invented languages, AI-driven storytelling, and comparisons between GPT-3.5 and GPT-4o as a basis for monetization strategies. Explore these perspectives to understand how multimodal AI evolves from theory to practice: Invented Language Conversations with GPT-4o and GPT-4o as a Comedian: Humorous Tales.

FAQ

What sets GPT-4o apart from GPT-4 and GPT-3.5?

GPT-4o introduces real-time, end-to-end multimodal processing that unifies text, audio, and vision in a single neural network. It delivers faster response times, enhanced multilingual capabilities, and more expressive outputs, especially in audio. This design reduces latency from separate pipelines and broadens use cases across industries.

How can organizations begin deploying GPT-4o safely and cost-effectively?

Start with prototype deployments on trusted cloud platforms, implement guardrails for data privacy and content safety, and use tokenization improvements to optimize costs. Validate multilingual performance and cross-modal reliability in controlled pilots before scaling. Leverage ecosystem partners like Microsoft and Nvidia for deployment acceleration and governance tooling.

What industries are most likely to benefit first from GPT-4o?

Healthcare for imaging and multilingual patient communication; education for immersive, multimodal lessons; customer service for omnichannel interactions; and media/creativity for interactive, audience-driven experiences. The shared advantages include faster workflows, improved accessibility, and richer user engagement.

How does GPT-4o handle safety and bias across languages and modalities?

Safety is embedded across data curation, model alignment, and specialized guardrails for voice outputs. Continuous red-teaming and user feedback cycles identify risks and guide updates. Multilingual testing and diverse datasets help reduce language-specific biases, while governance tools support compliant deployment across regions.