résumé

In 2025, the AI landscape is defined by a growing vocabulary that blends foundational concepts with real-world deployments. This guide navigates key terms—from data, models, and learning paradigms to the ethical and operational considerations that accompany modern AI systems. Readers will discover how terminology translates into practice across prominent platforms and vendors such as OpenAI, Google AI, DeepMind, IBM Watson, Microsoft Azure AI, Amazon Web Services AI, NVIDIA AI, Hugging Face, DataRobot, and Anthropic. The aim is not merely to memorize words, but to connect terms to tangible use cases, governance frameworks, and decision-making processes in today’s technology ecosystem.

Brief

This article is structured into five in-depth sections, each exploring a facet of AI terminology with concrete examples, case studies, and practical tips for practitioners, leaders, and students. Expect rich explanations, searchable definitions, and actionable guidance that reflects the AI developments shaping industry in 2025. Throughout, terms are linked to current contexts—cloud platforms, research labs, and industry deployments—so readers can relate vocabulary to what they actually see in the market, from predictive analytics to responsible AI governance. References to industry players such as OpenAI, Google AI, and NVIDIA AI illustrate how terminology surfaces in tools, services, and partnerships across the AI stack. For deeper dives, you can follow the linked articles and resources embedded in the text.

En bref

- Terminology spans data, models, training, evaluation, deployment, and governance.

- Practical contexts connect terms to platforms like OpenAI, Google AI, and NVIDIA AI.

- Ethics and transparency are integral to understanding modern AI practice, not afterthoughts.

- Cross-industry relevance means terms appear in finance, healthcare, manufacturing, and digital media.

- Curated links and case studies provide pathways to deepen knowledge beyond definitions.

Foundations of AI Terminology: From Data to Models

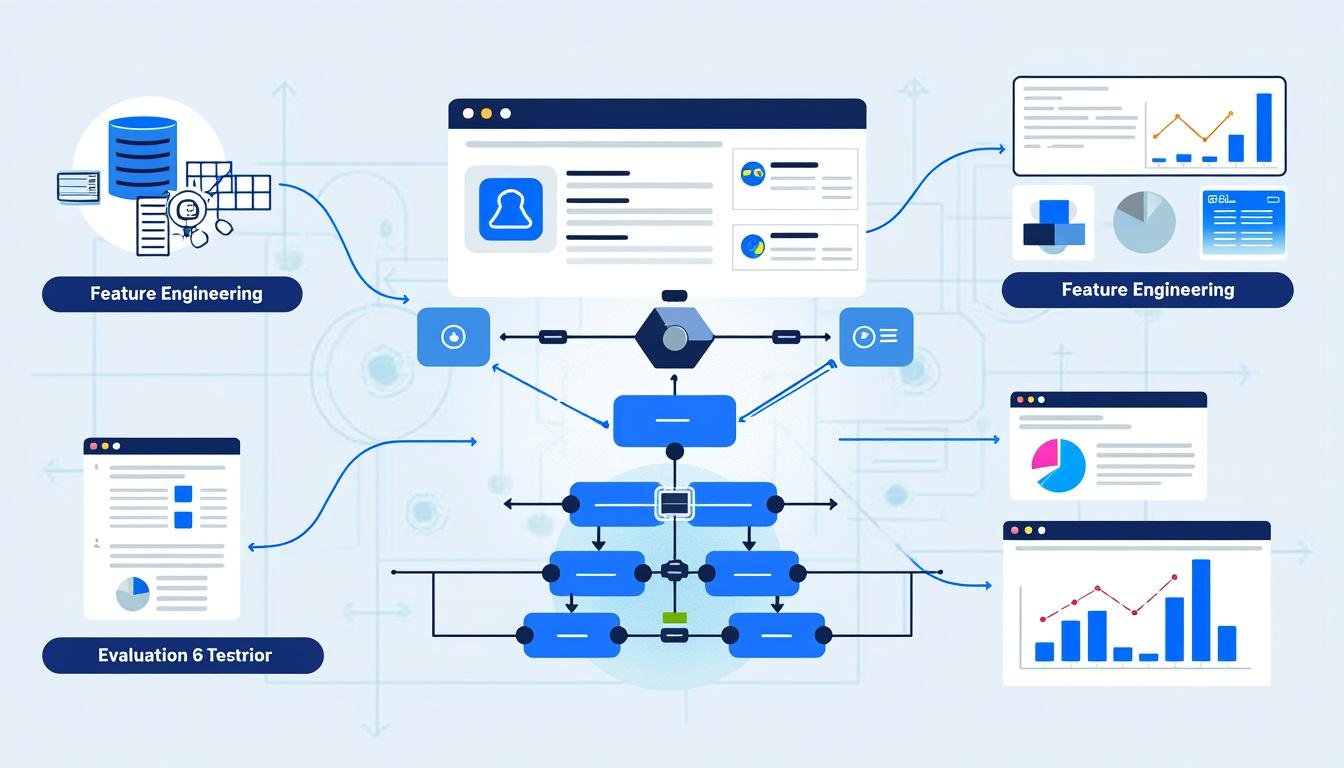

In the realm of artificial intelligence, terms coevolve with practice. The basic axis runs from data and features to models that learn from those data, producing predictions, decisions, or recommendations. A solid grasp of this axis helps professionals parse proposals, audits, and vendor claims with greater confidence. At the core, data represents measurements or observations collected from real-world processes, sensors, or human input. Features are the attributes extracted from raw data that feed learning algorithms. This extraction process, often automated, transforms messy, high-dimensional inputs into a form that is more amenable to pattern discovery. The quality of data and feature engineering directly influences the performance of any subsequent model.

Beyond data and features lie the models, which are mathematical or statistical constructs that map inputs to outputs. In practice, models may take many shapes: from linear models that capture straightforward trends to complex deep neural networks capable of recognizing subtle patterns in unstructured data such as images or text. The choice of model architecture is guided by task requirements, data availability, and computational constraints. In 2025, pre-trained models and transfer learning have become ubiquitous, enabling practitioners to adapt general-purpose foundations to specialized tasks with less labeled data than before. This shift has important implications for practitioners who must understand not only model capabilities but also biases and failure modes that can emerge when applying broad architectures to specific contexts.

To navigate this landscape effectively, it helps to view AI terminology through a few recurring families of terms that surface across literature and vendor documentation. The first family centers on learning paradigms such as supervised, unsupervised, semi-supervised, and reinforcement learning. Each paradigm represents a different relationship between data, labels, and feedback. A second family concerns evaluation metrics—accuracy, precision, recall, F1 score, ROC-AUC, perplexity, BLEU, or human-in-the-loop evaluations—that quantify how well a model performs on a given task. A third family covers deployment concerns—inference efficiency, latency, scalability, and monitoring for drift or anomalous behavior. Finally, governance terms such as explainability, transparency, and fairness define the expectations for how models justify their outputs and how risk is managed in production.

In practice, teams continually translate these terms into workflows: data pipelines, feature stores, model registries, evaluation dashboards, and monitoring systems. The landscape is shaped by the actions of influential players and communities, including OpenAI and Google AI, which push breakthroughs in model capabilities, as well as DeepMind and Anthropic, which emphasize alignment and safety. The ecosystem also features platforms that accelerate development and deployment, such as Microsoft Azure AI, Amazon Web Services AI, and NVIDIA AI tooling, alongside frameworks from Hugging Face that democratize access to state-of-the-art architectures. To ground these concepts, consider a real-world scenario: a retail company builds a predictive maintenance model using historical sensor data. Data quality, feature engineering, and careful selection of a learning paradigm determine whether the model delivers reliable maintenance alerts or sporadic false positives. The model’s lifecycle—from training to deployment to monitoring—illustrates how terminology translates into value and risk management.

Below is a compact table that anchors the principal terms introduced in this section, with a quick reference to what they mean and how they appear in practice.

| Term | Definition | Practical Context |

|---|---|---|

| Data | Raw measurements or observations used to train models. | Sensor logs, transaction records, images, text corpora. |

| Features | Attributes extracted from data that enable learning. | Normalized sensor readings, word embeddings, pixel intensities. |

| Model | Mathematical structure that maps inputs to outputs. | Linear regression, convolutional networks, transformer models. |

| Supervised Learning | Learning from labeled examples to predict outcomes. | Image classification, sentiment analysis with labeled data. |

| Unsupervised Learning | Infers structure from unlabeled data. | |

| Clustering, dimensionality reduction. | Customer segmentation, anomaly detection without labels. | |

| Reinforcement Learning | Learning by interacting with an environment to maximize rewards. | Game playing, robotics, optimization under uncertainty. |

In this section, readers gain a vocabulary map that ties terms to concrete concepts and workflows. The landscape is dynamic, with ongoing advancements from OpenAI and Google AI redefining what is possible and how quickly. As you become proficient with these foundations, you’ll be better equipped to assess proposals, engage with vendors, and structure projects that emphasize data quality, appropriate modeling choices, and robust governance. For a deeper dive into broad AI terminology, you may explore resources like Understanding the Language of Artificial Intelligence and The Lexicon of Artificial Intelligence.

Key subtopics: learning paradigms and model evaluation

The learning paradigm chosen for a project shapes not only data requirements but also the way success is measured. In supervised learning, labeled examples teach the model to map inputs to desired outputs, a process often augmented by cross-validation and test sets to ensure generalization. In unsupervised learning, the absence of labels pushes algorithms to uncover latent structure—think clustering customers by behavior patterns or reducing dimensionality to visualize complex data. Reinforcement learning introduces an agent that learns through trial and error, guided by reward signals, a framework highly relevant for dynamic decision-making tasks such as robotic control or strategy games. Evaluation metrics provide the yardstick by which performance is judged, including accuracy, F1 score, ROC-AUC for classification tasks, perplexity for language models, or BLEU scores for translation. These metrics are subject to bias, thresholds, and context; thus, practitioners must design evaluation protocols that reflect real-world use and user expectations.

In practice, a successful project blends data quality, feature engineering, and an appropriate learning paradigm with rigorous evaluation. The decisions made in the early stages—data collection strategies, labeling quality, and the choice of model architecture—have long-lasting consequences for bias, fairness, and interpretability. Industry players like IBM Watson and Anthropic emphasize alignment and safety alongside capability, while cloud platforms such as Microsoft Azure AI and Amazon Web Services AI provide end-to-end pipelines that support teams through the entire lifecycle from data ingestion to monitoring. The practical takeaway is clear: terminology is a bridge between theoretical constructs and real-world outcomes. By understanding the terms, you can design better experiments, communicate clearly with stakeholders, and implement governance practices that reduce risk while enabling innovation.

Related reading can illuminate the terminology further. For example, see discussions on AI terminology and jargon, which connect language to practice in accessible ways: Understanding the Jargon: A Guide to AI Terminology and Understanding the Language of Artificial Intelligence. The evolving vocabulary continues to be shaped by industry milestones and research breakthroughs across OpenAI, Google AI, and beyond.

Practical takeaway: Start with a data-centric mindset, define clear evaluation criteria, and select learning paradigms aligned with your data realities and business constraints. This approach minimizes overpromising and anchors your team in a robust terminology framework.

Table: principal terms and quick references

| Term | Context | Why it matters |

|---|---|---|

| Data | Any information used for training or evaluation. | Quality drives model performance and trust. |

| Feature | Processed attributes that feed models. | Good features simplify learning and improve accuracy. |

| Model | Mathematical mapping from inputs to outputs. | Choice of architecture affects capability and bias. |

| Supervised | Learning from labeled examples. | Clear targets accelerate learning, but labeling cost matters. |

| Reinforcement Learning | Learning via interaction with an environment. | Well-suited for sequential decision tasks with delayed rewards. |

Additional links for context and expansion:

Transition to core algorithms

With a solid grounding in data and models, the next step is to understand the learning paradigms that drive AI systems. The main families—supervised, unsupervised, semi-supervised, and reinforcement learning—offer different mechanisms for extracting value from data, each with its own set of trade-offs and use cases. As the industry grows more interconnected, you’ll often hear about transfer learning, fine-tuning, and prompt engineering, which enable rapid adaptation of large models to specific tasks. The practical implication is that teams must articulate not only what model to build, but how to adapt and maintain it over time, given evolving data and user feedback. Vendors such as OpenAI, Google AI, and Anthropic continually refine these approaches, providing developers with robust tooling to accelerate experimentation while enabling governance and safety controls.

Core Algorithms and Learning Paradigms in AI

Modern AI stands on a portfolio of algorithms and learning paradigms that collectively empower a broad range of applications. The breadth of these approaches reflects the diversity of data, tasks, and performance criteria encountered in real-world settings. This section surveys the primary families—supervised learning, unsupervised learning, semi-supervised learning, and reinforcement learning—while also touching on less traditional but increasingly relevant methods such as self-supervised learning and generative modeling. The intent is to equip readers with a clear taxonomy that can guide project planning, vendor selection, and risk assessment in 2025. Each paradigm is illustrated with examples, practical caveats, and pointers to notable platforms and players in the AI ecosystem, including IBM Watson and NVIDIA AI, which provide hardware-accelerated and software-enabled pathways for experimentation and deployment.

Supervised learning relies on labeled data to map inputs to outputs. This approach remains the backbone of many business tasks, from fraud detection to healthcare diagnostics. A critical factor is the labeling quality and the representativeness of the labeled set. The consequences of biased labeling are real: biased models can amplify existing disparities if not carefully managed. In contrast, unsupervised learning seeks to uncover latent structure in unlabeled data. Clustering and dimensionality reduction are classic techniques that help organizations discover patterns such as customer segments or semantic groupings in documents. Semi-supervised learning lies between these extremes, leveraging a small labeled subset to guide learning on a larger unlabeled corpus, a strategy that often reduces labeling costs while preserving performance. Reinforcement learning emphasizes sequential decision-making, where an agent learns policy by interacting with an environment and receiving feedback signals or rewards. This paradigm shines in robotics, game playing, and operational optimization where the right sequence of actions yields long-term benefits.

To connect theory with practice, consider how cloud providers facilitate these paradigms. For instance, Microsoft Azure AI and Amazon Web Services AI offer managed services for training and deployment, while specialized research ecosystems from DeepMind and Anthropic advance safety and alignment in increasingly capable models. Critical evaluation metrics—such as accuracy, precision, recall, F1 score, and area under the ROC curve for classification tasks—are essential in comparing models and communicating performance to stakeholders. For researchers and engineers, a practical playbook includes robust data governance, careful feature engineering, and explicit consideration of bias, fairness, and interpretability throughout the model lifecycle. The goal is to balance capability with responsibility, ensuring models deliver meaningful value without compromising user trust or safety.

In this section, we highlight a compact table summarizing paradigms, typical tasks, and representative examples in 2025. This serves as a quick reference for those who want to align terminology with tangible outcomes in projects or procurement conversations.

| Paradigm | Core Idea | Representative Tasks |

|---|---|---|

| Supervised | Learning from labeled pairs to map inputs to outputs | Image classification, medical image analysis, fraud detection |

| Unsupervised | Discovering structure from unlabeled data | Clustering, anomaly detection, dimensionality reduction |

| Semi-supervised | Combines limited labels with large unlabeled data | Text classification with few labeled samples, semi-supervised segmentation |

| Reinforcement Learning | Agent learns via interaction to maximize cumulative reward | Robotics control, game-playing, autonomous systems |

| Self-supervised | Creates supervision from the data itself | Masked language modeling, contrastive learning, representation learning |

As you consider these paradigms, remember that the practical deployment often requires a blend of approaches, domain-specific adjustments, and thoughtful instrumentation for monitoring. Notable AI platforms—such as Hugging Face for accessible transformer models, DataRobot for automated machine learning, and enterprise-grade systems from IBM Watson and AWS—provide pipelines that help teams implement these paradigms with governance, auditability, and scalability. For readers who want a broader tour of the field, a set of curated readings and tutorials is available through linked resources, including deep dives into language models, vision systems, and multimodal architectures.

Practical takeaway: Map your task to a learning paradigm, ensure label quality where required, and design evaluation schemes that reflect real-world use. In addition, engage with knowledge bases and communities maintained by the AI ecosystem, and keep an eye on safety and alignment research from leading labs and organizations to anticipate future regulatory and ethical expectations.

Open access resources and vendor whitepapers can provide quick orientation on terminology. For example, you can explore terminology glossaries and fetch a concise overview from the links noted earlier, which connect theoretical terms to practical applications across AI terminology guides and AI Lexicon.

Key takeaway: Terminology serves as a shared language to align expectations among data scientists, business stakeholders, and customers while guiding responsible and effective AI deployments.

Subsection: learning paradigms and evaluation mechanics

Understanding the interplay between data quality, labels, model choice, and evaluation criteria is crucial for project success. In 2025, the boundaries between paradigms are increasingly permeable thanks to transfer learning, fine-tuning of large models, and prompt-based systems that adapt to a wide array of tasks with minimal task-specific data. Practitioners must be careful to distinguish capabilities from guarantees: a model might perform well on benchmark metrics but fail in deployed environments due to distribution shift or unseen bias. This awareness informs governance practices that require continuous monitoring, bias audits, and transparent reporting. Vendors and platforms such as Google AI, OpenAI, and Microsoft Azure AI offer tooling to help teams implement these practices at scale, while Hugging Face fosters community-driven sharing of models and evaluation datasets. The journey from data to deployed AI is iterative, with all terminology serving as a compass to navigate risk and opportunity.

For readers seeking to connect terminology with a broader ecosystem, consider exploring additional resources that unpack AI concepts from multiple perspectives, including practical tutorials on intimidating terms and their real-world meanings. The journey involves mapping theory to practice, while staying mindful of ethical implications and governance requirements that are growing more prominent in today’s deployments.

Models, Tools, and Platforms Shaping the Landscape

The AI landscape around 2025 is characterized by a constellation of platforms, models, and tooling ecosystems that accelerate development and scale performance. This section surveys notable players and how their contributions intersect with terminology. Vendors and communities not only supply models but also provide abstractions—APIs, pipelines, and governance dashboards—that translate terminology into deployable capabilities. Among the major actors, familiar names such as OpenAI and Google AI frequently push capabilities forward, while enterprises rely on cloud-native services from Microsoft Azure AI and Amazon Web Services AI to operationalize models at scale. For on-device and edge scenarios, hardware-accelerated ecosystems from NVIDIA AI intersect with software tooling to enable real-time inference. Across this spectrum, Hugging Face and DataRobot serve as hubs for community models, automated ML workflows, and model evaluation resources. The ultimate objective is to translate dense academic terminology into actionable configuration, deployment, and governance decisions that align with business goals and user expectations.

In practical terms, consider how an enterprise might choose between a hosted API from OpenAI or a custom model built with libraries from Hugging Face. Decision factors include latency requirements, data privacy constraints, cost structures, and governance needs. A retailer using IBM Watson or Anthropic for safety-focused language tasks may rely on alignment tools that reduce conversation risks, while a manufacturing company might lean on Microsoft Azure AI for integrated monitoring, telemetry, and automated ML pipelines. The merging of terms with tangible tools is evident in daily development workflows, where a table of terms becomes a configuration checklist: model names, endpoints, evaluation metrics, and drift monitoring thresholds are all expressed through a shared vocabulary that engineers, data scientists, and business leaders can act upon.

To ground these ideas, the following table provides a snapshot of platform strengths and typical use cases as of 2025. It reflects how terminology translates into concrete decisions about architecture, data handling, and governance across the AI landscape.

| Platform | Strengths | Typical Use Cases |

|---|---|---|

| OpenAI | Advanced natural language capabilities, API access | Text generation, chatbots, content reasoning |

| Google AI | Scale, research depth, interoperability | Search-integrated AI, multimodal tasks |

| DeepMind | Research-first approach, safety and alignment | Strategic decision-making, game-like simulations |

| IBM Watson | Industry-grade governance, explainability | Healthcare analytics, enterprise decision support |

| Microsoft Azure AI | End-to-end pipelines, enterprise security | Model deployment, monitoring, MLOps integrations |

| Amazon Web Services AI | Scalability, data lake integrations | Batch training, real-time inference in cloud |

| NVIDIA AI | Hardware acceleration, optimized training | Large-scale training, real-time inference |

| Hugging Face | Community models, accessibility | Model discovery, rapid experimentation |

| DataRobot | Automated ML workflows, governance | Business analytics, rapid prototyping |

| Anthropic | Alignment-focused safety features | Responsible AI, user-facing assistants with guardrails |

In this section, the emphasis is on how terminology informs tool choices and implementation patterns. For readers seeking deeper context, the following sources offer essential perspectives on AI tooling and terminology as used by industry leaders: Power of Action Language, and Understanding the Language of AI. Industry players such as OpenAI, Google AI, DeepMind, and IBM Watson frequently publish updates that nuance terminology and its application in real-world projects. This ecosystem also includes hardware and software ecosystems from NVIDIA AI and Microsoft Azure AI, which bridge theory and production through optimized environments.

Key takeaway: Terminology is not abstract jargon but a practical map; it guides design decisions, risk assessments, and collaborative communication across teams and partners. When you read a proposal or a case study, use the vocabulary to check alignment between claimed capabilities, data handling, and governance controls. For further exploration, you can consult AI vocabulary and technical glossaries linked above, along with vendor resources describing how to operationalize terminology in scalable architectures.

Enables practical reading: a quick reference to core vendor ecosystems that frequently surface in 2025 discussions includes AI terminology and guide and Choosing the Right Course of Action.

Transition note: The next section dives into governance, ethics, and responsible AI, tying terminology to regulatory and organizational expectations that shape how models are built and deployed in the real world.

Ethics, Transparency, and Responsible AI in Practice

The rapid growth of AI capabilities elevates the importance of ethics, transparency, and accountability. In 2025, organizations increasingly adopt governance frameworks that translate abstract principles into concrete policies, audits, and technical controls. This section examines how terminology is operationalized to address bias, fairness, explainability, safety, privacy, and accountability. Real-world deployments must navigate trade-offs between model performance and societal impact, and terminology serves as a scaffold for communicating these trade-offs to stakeholders, regulators, and end-users. The integration of governance into the AI lifecycle is not optional; it is a core design criterion that informs data collection practices, model selection, and monitoring strategies across industries—from healthcare and finance to retail and manufacturing. Vendors such as IBM Watson, Anthropic, and OpenAI have dedicated safety and governance programs, while cloud platforms provide integrated tools for policy enforcement and auditing. The literature and practice emphasize transparency, traceability, and human oversight as essential components of responsible AI.

One guiding frame is the triad of fairness, explainability, and robustness. Fairness focuses on minimizing biases that lead to unequal outcomes across protected groups. Explainability seeks to elucidate how a model reaches a decision, which is critical when models affect high-stakes domains like credit, hiring, or medical treatment. Robustness emphasizes resilience to data shifts, adversarial inputs, and noisy environments. The integration of these aspects requires a blend of data governance, model auditing, and user-centric design. In practice, teams establish checklists that include data provenance, bias audits, model cards, and dashboards for monitoring drift and performance degradation. The ethical considerations are not confined to laboratories; they become part of procurement discussions, vendor contracts, and internal risk management protocols. The collaboration between researchers and industry practitioners—through labs like DeepMind and the safety initiatives at Anthropic—shapes how terminology translates into governance artifacts that guide decisions about deployment, updates, and discontinuation.

Practical examples illustrate how terminology influences governance in real-world settings. A financial institution using AI for credit scoring must define fairness metrics and implement explainability tools to comply with regulatory expectations. A healthcare provider deploying diagnostic assistance must ensure privacy protections and minimize biases that could affect patient outcomes. An online platform deploying content moderation models must balance free expression with the protection of users from harmful content, supported by transparency about moderation policies and model behavior. These scenarios underscore how the terminology map informs policy decisions, technical controls, and stakeholder communications.

The domain is continually evolving. The OpenAI policy and Anthropic safety research illustrate how terminology shifts as safety and alignment concerns gain prominence in product design. Meanwhile, enterprise-grade offerings from Microsoft Azure AI and IBM Watson provide governance features such as model registries, lineage tracking, and monitoring dashboards to operationalize responsible AI. The evolving vocabulary thus reflects both technical advances and the governance infrastructure that ensures AI serves users ethically and safely.

Key action items for practitioners include establishing a formal bias and fairness assessment plan, documenting model explainability features, and building a governance board to oversee deployments. For readers seeking further reading, the linked resources and glossaries provide additional perspectives on how to operationalize terminology within governance programs, with case studies and practical checklists to support decision-making in 2025 and beyond.

Ethical checklists and governance artifacts

- Bias dashboards and fairness metrics in model evaluation

- Model cards and risk assessments for each deployment

- Explainability reports for high-stakes decisions

- Data provenance records and privacy impact analyses

Table: governance dimensions and example controls

| Dimension | Controls/Artifacts | Examples |

|---|---|---|

| Fairness | Bias audits, fairness metrics | Disparate impact analysis, counterfactual evaluation |

| Explainability | Model cards, SHAP/LIME explanations | Feature contribution explanations for loan decisions |

| Privacy | Data minimization, differential privacy | Protected data handling, privacy-preserving inference |

| Safety | Alignment tests, guardrails | Content moderation policies, harmful output detection |

Case references and links provide concrete guidance on responsible AI practices. For a broader view of terminology tied to governance and policy, check resources such as the AI jargon guide and related discussions, which bridge conceptual terms with governance actions. Also, consider how platforms like Microsoft Azure AI and Google AI integrate governance capabilities into their services, enabling organizations to implement safety and transparency controls at scale.

Key takeaway: Ethical and governance considerations are inseparable from AI terminology. The vocabulary you use to discuss risk, accountability, and control shapes the design of policies, the selection of tools, and the governance posture of your organization.

Practical Reading of AI Terminology for 2025 and Beyond

For professionals who want to stay current, this section distills how to read AI terminology in a rapidly changing landscape. The pace of advancement means terms can gain new meaning as technology, policy, and market demands evolve. A practical approach is to build a living glossary within your team, anchored to a handful of trusted sources and updated regularly as models and governance requirements mature. An important aspect of this process is understanding not only the definitions, but their implications for deployment, risk, and user experience. In addition to formal glossaries, developers and decision-makers should engage with vendor documentation, white papers, and community-driven resources that reflect real-world usage across industries. The interface between terminology and practice becomes more tangible when you see how terms map to concrete actions—how to label data effectively, how to instrument evaluation, and how to design governance reviews that reflect stakeholder concerns.

One practical pathway is to explore open resources that connect AI terminology to concrete tasks, such as language models, computer vision, and multimodal systems. A robust reading list can include a mix of foundational texts and current analyses that address how terminology is used in product development, evaluation, and governance. For example, the linked articles discuss how to interpret and apply terminology in business contexts, and how to leverage cloud platforms to operationalize AI while maintaining governance and safety standards. It’s helpful to track updates from OpenAI, Google AI, DeepMind, and Anthropic to understand evolving definitions and new categories as the field advances.

To facilitate ongoing learning, a curated set of concrete resources is offered here. Readers can access practical guidance through these links, which tie terminology to actionable insights and examples: AI terminology guide (key terms), AI lexicon overview, and Exploring the world of computer science. Additionally, learning pathways and decision-making frameworks are outlined in Choosing the right course of action.

Table: recommended resources and how to use them

| Resource | Focus | How to use |

|---|---|---|

| Glossaries and Jargon Guides | Definitions of core terms, common confusions | Glossary-first approach before deep dives |

| Vendor Documentation | Platform-specific terminology and best practices | Use as a reference during design and procurement |

| Case Studies | Applied terminology in real projects | Informs risk management and governance plans |

| Industry Reports | Trends in AI terms and regulatory context | Strategic planning and policy alignment |

Enriching the reading experience, you’ll encounter the explicit mentions of major players and platforms that consistently shape terminology in 2025, including OpenAI, Google AI, DeepMind, IBM Watson, Microsoft Azure AI, Amazon Web Services AI, and NVIDIA AI. Each term you encounter can be linked to examples from these ecosystems, reinforcing the practical relevance of vocabulary in real-world deployments. For a broader cultural and historical context, consider how AI terminology mirrors the evolution of computation, data science, and human-machine collaboration across decades.

Strong finish: A disciplined, practice-oriented approach to terminology—paired with ongoing learning—helps organizations stay aligned with rapid technological change while maintaining safety, fairness, and accountability in AI deployments. The vocabulary is a living instrument; use it to shape strategy, governance, and everyday work across teams.

FAQ section below provides quick clarifications on common questions about AI terminology and usage.

What is the difference between data and features?

Data are the raw inputs (numbers, text, images) collected from real-world processes. Features are representations derived from data that make learning easier for models. Feature engineering transforms raw data into more informative signals for the model.

Why is explainability important in AI?

Explainability helps users and stakeholders understand how a model makes decisions, which is critical for trust, debugging, and regulatory compliance. It often involves techniques that reveal feature contributions or model decisions in context.

How do learning paradigms affect project planning?

The choice of supervised, unsupervised, semi-supervised, or reinforcement learning shapes data labeling needs, evaluation metrics, and deployment strategies. Projects should align paradigm choice with data availability, task requirements, and governance goals.

What role do platforms like OpenAI and Google AI play in terminology?

They drive terminology through new models, APIs, and safety guidelines. Their documentation, research papers, and product announcements help practitioners understand current definitions, capabilities, and governance expectations.