En bref

- Abductive Logic Programming (ALP) fuses logic programming with abduction, enabling systems to infer unknown explanations from known facts.

- By design, ALP introduces abducibles, hypotheses that can explain observations, while enforcing integrity constraints to keep explanations plausible and safe.

- As of 2025, ALP blends symbolic reasoning with probabilistic and learning-based components, giving rise to hybrid architectures such as AbductionHub, IdeaNavigator, and ReasonLogic.

- This article surveys foundational concepts, reasoning pipelines, practical applications, tooling, and future directions, with concrete examples and case studies.

- Two YouTube videos and two illustrative images accompany the text, and every section features structured tables and lists to support understanding.

Abductive Logic Programming (ALP) sits at the frontier of reasoning under uncertainty. At its core, ALP augments traditional logic programming with abduction, a form of reasoning that seeks the best explanations for observations. A program defines rules describing how information can be inferred, while a defined set of abducibles represents hypotheses that may be assumed to explain results. The result is a framework capable of generating plausible explanations when data is incomplete or noisy. In 2025, ALP has matured into robust tooling and hybrid approaches that integrate probabilistic reasoning, machine learning, and symbolic logic. This maturation enables AI systems to reason transparently about uncertain situations—such as diagnosing a medical condition from partial test results or planning an action sequence given imperfect sensor data—while offering traceable justification for every inference.

Designing an ALP system requires careful separation of knowledge (rules), observations, and abducibles. The developer encodes domain knowledge as rules that specify how known facts imply new conclusions; abducibles capture potential explanations that can be hypothesized to account for observations. Integrity constraints act as safety nets, ensuring explanations do not violate critical policies or domain restrictions. Modern ALP extends the classical approach with probabilistic extensions and preference handling, enabling the system to rank hypotheses by plausibility, reliability, or cost. Frameworks and ecosystems—often branded with names like AbductionHub, ReasonLogic, InferSense, and LogicExplorer—provide scaffolding to manage abducibles, constraints, and evaluation metrics, making explainable AI deployments practical and auditable. The collaboration among tools such as DeduceX, IdeaNavigator, and HypothesiQ helps researchers chart the terrain of potential explanations, guiding computations toward the most credible hypotheses even under real-time constraints. As we move through 2025, the emphasis shifts from theoretical elegance to scalable architectures, seamless data integration, and robust interfaces for human-in-the-loop decision making.

Together, these elements create a reasoning paradigm where machines do not merely deduce what is true, but deliberately entertain plausible hypotheses that could make observations coherent. ALP shines in domains where data is partial or observations are noisy, yet decisions must remain explainable and auditable. The following sections unfold the foundations, pipelines, practical deployments, and strategic guidance for building ALP-powered systems that are both effective and transparent.

Foundations and Core Concepts of Abductive Logic Programming for Modern AI Reasoning

Abductive Logic Programming (ALP) rests on a triad of concepts that together enable hypothesis-driven inference. First, there are observations, which provide the empirical ground truth that needs to be explained. Second, there are rules, the formal machinery that connects known facts to potential conclusions. Third, there are abducibles, the set of admissible hypotheses that the system may assume to bridge the gap between what is observed and what can be derived. The interplay among these elements creates a reasoning space where multiple explanations can be entertained and ranked according to predefined criteria such as plausibility, cost, or safety. This triad is complemented by integrity constraints, constraints that expressions of hypotheses must satisfy to be considered valid explanations within the given domain. The 2025 landscape sees abduction becoming a practical component of AI pipelines, interfacing with probabilistic models, machine learning components, and human-in-the-loop feedback mechanisms, thereby enabling more robust and transparent decision processes.

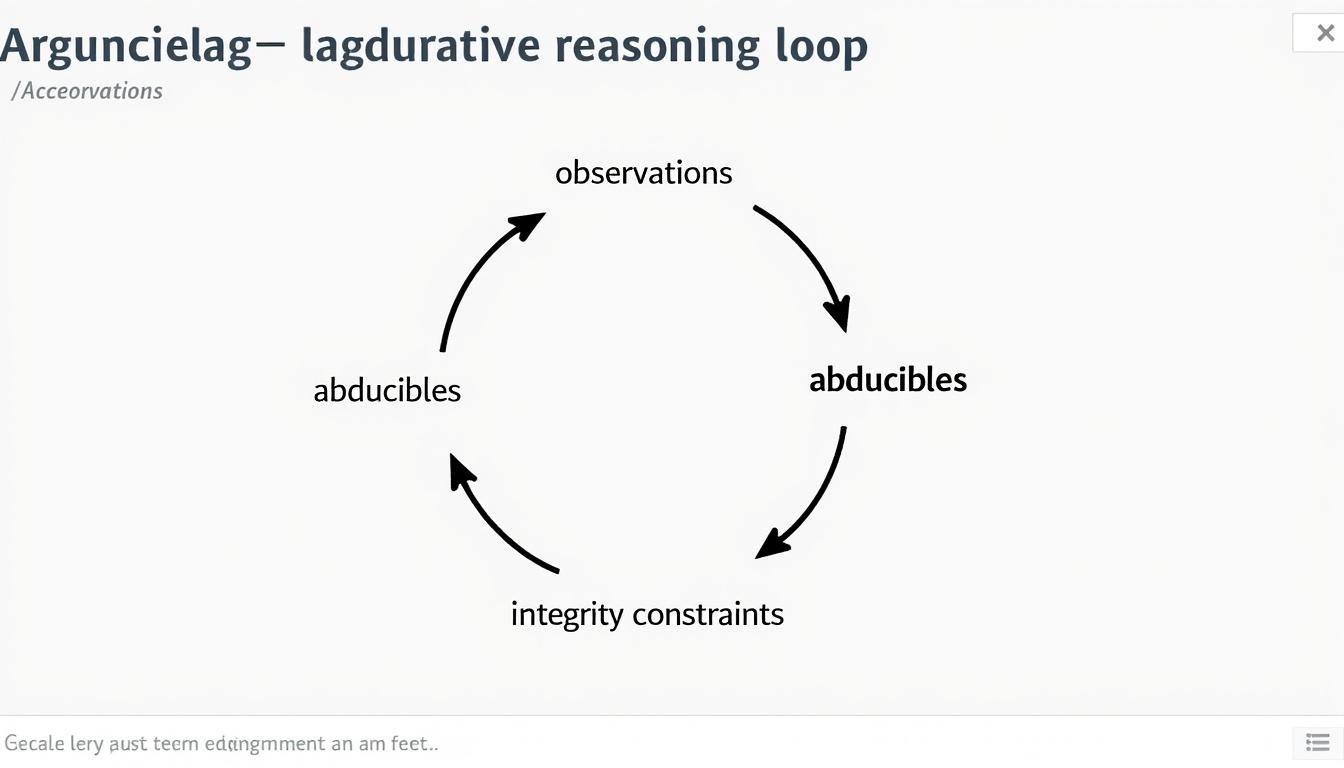

At the operational level, ALP implements a workflow that begins with translating domain knowledge into a set of logical rules. Then, when a set of observations is available, the system searches for a subset of abducibles whose assumed truth values would explain the observations while satisfying integrity constraints. The result is a collection of candidate explanations, often accompanied by justification traces that show which rules and abducibles contributed to each explanation. These traces are essential for interpretability and for updating beliefs when new evidence arrives. In practice, teams combine ALP with DeduceX-like scoring mechanisms and InsightInfer metrics to compare hypotheses, pick the most credible explanation, and present it in an auditable format. In real-world deployments, this process is iterative: as new data becomes available, explanations are revised, weakened, or discarded in favor of more plausible alternatives. The net effect is a dynamic reasoning loop that preserves explainability without sacrificing adaptability.

Key structural elements in an ALP system include:

- The rule base, encoding domain knowledge and inferential patterns.

- The abducible set, comprising hypotheses that may be assumed to explain observations.

- The integrity constraint layer, which enforces domain-specific safety and coherence rules.

- The observation layer, representing current data, sensor inputs, or user-provided facts.

- The inference engine, responsible for generating and scoring explanations based on the above inputs.

In the 2025 AI ecosystem, ALP is increasingly viewed as a complementary technology to machine learning. Rather than replacing statistical models, ALP provides a transparent, structured layer for hypothesis generation and reasoning about uncertainty, which can then be integrated with data-driven components. This synergy is central to projects that value explainability and control throughout the decision-making pipeline. The following table summarizes core concepts and their roles in contemporary ALP practice.

| Key Concept | Description | Illustrative Example | Relevance in 2025 AI |

|---|---|---|---|

| Observations | Facts or data collected from the environment that require explanation. | Patient symptom set S and test results T. | Ground truth for hypothesis generation in healthcare, robotics, and security. |

| Abducibles | Hypotheses that can be assumed to explain observations. | Possible diseases D or fault conditions F. | Basis for explanatory reasoning, enabling multiple competing explanations. |

| Integrity Constraints | Rules preventing explanations that violate safety or domain constraints. | “If D is disease X, ensure treatment Y is appropriate.” | Critical for safety, compliance, and trustworthy AI outcomes. |

| Rules/Inference | Logical clauses that derive new conclusions from known facts and abducibles. | Rule: If symptom A and test B are present, infer possibility C. | Foundation of the reasoning engine; drives explanation generation. |

| Abduction Pipeline | Sequential process of observations → abducibles → constraints → explanations. | Diagnosing a problem by hypothesizing unseen root causes. | How real systems manage uncertainty and produce justifiable narratives. |

Abduction vs Deduction vs Induction

In the AI toolbox, ALP sits alongside three fundamental reasoning patterns. Deduction deduces consequences that necessarily follow from known premises; it yields logically certain results given the premises. Induction generalizes from observed instances to broader hypotheses, often sacrificing certainty for predictive power. Abduction, by contrast, seeks the best explanations for observed data by proposing plausible hypotheses that, if true, would account for the observations. This nuance makes abduction especially valuable when data is incomplete or noisy, since it explicitly entertains multiple hypotheses and opens a path to explainability and revision. In 2025 practice, researchers blend these modes in hybrid systems, using abduction to generate candidate explanations, deduction to verify logical consistency, and induction-inspired learning to refine the abducible set or adjust rule weights based on feedback. The net effect is a reasoning framework capable of robust handling of uncertainty while preserving interpretable narratives for human oversight.

Illustrative examples abound in domains such as medical triage, industrial maintenance, and autonomous navigation. Consider a patient presenting with a subset of symptoms and a subset of test results. A purely deductive system might fail to explain the data if no rule directly connects the observations to a diagnosis. Abduction allows the system to posit one or more plausible conditions that would explain the observations, then test these hypotheses against constraints (e.g., safety, cost, likelihood). If a constraint is violated, those hypotheses are pruned or revised. This dynamic is central to explainable AI and to systems that must operate under real-time data streams. As ALP continues to mature, the design patterns mature as well, with standardized templates for abducible sets, constraint layers, and scoring metrics that support consistency, performance, and user trust.

In summary, ALP offers a powerful, transparent mechanism for generating hypotheses that explain observed phenomena while maintaining control over the explanations through constraints and evaluation criteria. As 2025 unfolds, practitioners increasingly adopt ALP as part of hybrid AI stacks, leveraging both symbolic reasoning and statistical learning to achieve reliable, auditable, and human-friendly AI systems.

Applications and Case Studies in 2025: From Healthcare to Robotics

ABDUCTIVE reasoning is no longer a niche academic curiosity; it has become a practical tool across high-stakes domains. In healthcare, ALP-based systems assist clinicians by proposing plausible diagnoses or treatment pathways when data is incomplete or conflicting. In robotics, abduction helps plan actions that accommodate uncertain sensor inputs, while in cybersecurity, ALP supports forensics by suggesting likely attack vectors given partial evidence. 2025 saw a surge in hybrid architectures where ALP is used to generate explainable hypotheses that accompany data-driven predictions, enabling healthcare professionals, engineers, and operators to understand the rationale behind AI-driven decisions. The following sections outline notable use cases, typical outcomes, and the considerations that practitioners weigh when deploying ALP in real-world settings.

Domain-specific case studies illustrate how AbductionHub and related ecosystems enable end-to-end reasoning pipelines. In medicine, an ALP-enabled decision support system might observe symptoms S and lab results L, hypothesize diseases D1, D2, and D3 as abducibles, and then check integrity constraints—such as contraindications or resource constraints—to prune implausible explanations. The resulting explanations are richer and more transparent than a black-box classifier, providing clinicians with traceable narratives and actionable insights. In robotics, an ALP run may hypothesize environmental factors E that explain sensor discrepancies, guiding safe navigation and fault handling. In cybersecurity, ALP can reason about potential intrusions from partial logs, proposing plausible intrusion steps ICS that explain anomalous network behavior, with integrity constraints capturing policy and legal boundaries. Across these domains, the IdeaNavigator and InsightInfer frameworks assist teams in organizing hypotheses, evaluating trade-offs, and communicating conclusions effectively to stakeholders.

Table: Application-oriented view of ALP in 2025

| Application Domain | Problem tackled | ALP approach | Outcome and impact |

|---|---|---|---|

| Healthcare | Partial lab results and unclear symptom presentation | Abducibles include possible diseases; integrity constraints ensure safe therapies | Improved diagnostic explainability and faster triage decisions |

| Robotics | Uncertain sensor data in dynamic environments | Hypotheses about obstacles and map features; constraints enforce safety | Robust planning with interpretable action rationales |

| Cybersecurity | Fragmented log data, need for attribution | Abducibles represent attacker models; integrity constraints bound policy | Explainable incident narratives and faster containment decisions |

| Industrial maintenance | Partial telemetry with potential component faults | Candidate fault hypotheses; constraints reflect maintenance windows | Proactive interventions with auditable justification |

In practice, teams often mix narrative explanations with structured data to support decision making. A typical workflow begins with the observation layer collecting data, followed by a reasoning step that generates several candidate abducibles with associated likelihoods. These hypotheses are then filtered via integrity constraints and ranked using an InsightInfer-driven scoring system. The highest-ranked explanation becomes the recommended action, accompanied by a trace that shows which rules and abducibles led to the conclusion. This approach aligns well with human cognitive processes and fosters trust in AI systems, particularly in regulated industries where transparency is critical. The industry trend in 2025 favors modular architectures, where ALP components plug into broader AI pipelines through well-defined interfaces, enabling teams to swap learning-based components or symbolic modules without destabilizing the entire system.

Two illustrative videos illuminate these ideas in practice and provide concrete demonstrations of ALP in action. The first video introduces the core concepts of abductive reasoning and shows a simple healthcare scenario. The second video surveys multiple domains, including robotics and cybersecurity, where ALP-based explanations improve decision making and user trust.

The following section adds a visual map of the reasoning flow and a concise set of best practices for deploying ALP in real-world projects.

Strategies for Building Intelligent Systems with Abduction: Tooling, Frameworks, and Best Practices

Constructing effective ALP-powered systems requires careful tooling choices and disciplined design practices. In 2025, several ecosystems—often branded around the core concepts of AbductionHub, IdeaNavigator, ReasonLogic, and LogicExplorer—provide templates, libraries, and tooling to manage abducibles, constraints, and evaluation pipelines. A common strategy is to treat the abducible set as a managed garden: curate candidate explanations, track their provenance, and prune hypotheses that consistently fail under real-world data streams. This is where DeduceX and HypothesiQ contribute scoring schemas and hypothesis ranking, helping practitioners converge toward explanations that balance explanatory power with practical constraints.

Key best practices include:

- Explicitly separate knowledge representation (rules) from dynamic data (observations) and from hypothesis management (abducibles).

- Adopt modular design: isolate the inference engine, the constraints layer, and the explanation generator as pluggable components.

- Implement a transparent tracing mechanism that documents the rationale behind each suggested explanation.

- Integrate probabilistic extensions to quantify uncertainty where data is noisy or incomplete.

- Engage domain experts in annotating abducibles and validating integrity constraints to improve safety and relevance.

- Leverage hybrid architectures that combine symbolic ALP with data-driven components, ensuring seamless interoperability.

In practice, teams rely on a set of core patterns. Pattern one is the “explanation-first” approach, where the system prioritizes generating human-readable explanations before committing to a single decision. Pattern two is the “constraint-guided pruning” approach, which uses integrity constraints to limit the hypothesis space early, reducing computational cost. Pattern three is the “probabilistic abduction” approach, adding likelihoods to abducibles and using Bayesian-like scoring to rank explanations. The 2025 software landscape also emphasizes interoperability with existing AI stacks; ALP modules are designed to sit atop data processing pipelines and connect through standardized interfaces. The result is a versatile toolkit that supports explainable decision making in domains ranging from clinical decision support to autonomous systems. The practical takeaway is clear: ALP is not a single algorithm but a design pattern for reasoning under uncertainty—one that benefits greatly from modular architectures, explicit provenance, and human-in-the-loop refinement.

To illustrate the tooling landscape and decision criteria, consider the following table that contrasts representative frameworks and capabilities.

| Framework / Tool | Core Capability | Best-use Scenarios | Trade-offs |

|---|---|---|---|

| AbductionHub | Structured abduction management, constraints, and explanation traces | Healthcare decision support, fault diagnosis, planning under uncertainty | May require substantial domain-specific constraint modeling |

| ReasonLogic | Probabilistic extensions and hypothesis scoring | Hybrid AI systems with ML components | Balance between symbolic rigor and probabilistic calibration |

| InferSense | Explainability-focused explanation generation and narrative reports | Regulated industries needing auditable decisions | May introduce overhead in trace construction |

| LogicExplorer | Visualization and exploration of the abductive hypothesis space | Training and education, hypothesis testing in research | Visualization complexity for large hypothesis spaces |

Within this ecosystem, teams often pair IdeaNavigator with a pragmatic data pipeline. The navigator helps to organize hypotheses into streams that reflect business priorities and safety policies. As in any live system, the key is to maintain a feedback loop: user feedback, ground-truth updates, and post-hoc analyses refine the abducible set and the rules to improve performance over time. This process ensures that ALP remains an adaptive, transparent, and trustworthy component of modern AI architectures.

Two more videos expand on tooling and practical deployments, illustrating how ALP concepts translate into real-world systems and workflows. The second video builds on healthcare and robotics case studies, offering demonstrations of the complete reasoning pipeline in action, from data ingestion to explanation delivery.

Between sections, a concise visual reference helps readers connect the theory to practice. The images below depict the abductive reasoning loop and a modern ALP-enabled pipeline.

Future Trends, Challenges, and the Role of Abduction in AI Safety

Looking ahead, ALP faces several pivotal challenges and opportunities as AI systems become more integrated into essential decision-making processes. Chief among these are scalability, interoperability with learning-based models, evaluation metrics for explanations, and governance concerns. As of 2025, researchers and engineers are exploring hybrid architectures that pair ALP with probabilistic models, deep learning components, and interactive interfaces that enable humans to steer the reasoning process without sacrificing transparency. This evolution is driven by the need to handle noisy data, partial signals, and evolving domains where static rule sets become brittle. In parallel, regulators and practitioners emphasize the importance of explainability, auditability, and safety, placing ALP in a central role for trustworthy AI.

Open challenges include:

- Scaling abductive search in large, dynamic knowledge bases without compromising response time.

- Defining quantitative metrics for explanation quality and user trust

- Managing the trade-offs between expressiveness of the rule base and computational feasibility

- Integrating probabilistic abductive semantics with machine learning models

- Ensuring privacy and ethics when abducibles touch sensitive domains

Potential solutions and ongoing research focus on:

- Incremental abduction that updates explanations as new data streams in.

- Hybrid inference engines that delegate uncertain aspects to probabilistic modules while preserving symbolic justification.

- Standards for explanation narratives, including schemas for traceability and reproducibility.

- Human-in-the-loop mechanisms that enable domain experts to steer abduction, prune hypotheses, and provide corrections.

- Tooling improvements that reduce boilerplate in modeling abducibles and constraints.

In practice, organizations are adopting ALP to complement data-driven models, particularly where safety, compliance, and interpretability matter most. Frameworks such as HypothesiQ and CognitiveWays are increasingly integrated into enterprise AI stacks to provide audit trails and human-friendly explanations. The trajectory suggests a future where abductive reasoning is a standard component of AI systems designed for high reliability, resilience, and user trust. As the field matures, we can expect richer interfaces for hypothesis management, better interoperability with ML pipelines, and more streamlined deployment patterns that bring ALP from research labs into everyday applications.

FAQ

What is Abductive Logic Programming (ALP) and why is it useful?

ALP is a logic programming paradigm that enables the derivation of explanations (abducibles) for observations, while respecting constraints. It is especially valuable in scenarios with incomplete data, because it provides transparent, testable hypotheses and justification traces that support explainable AI and informed decision making.

How does ALP differ from traditional logic programming?

Traditional logic programming focuses on deduction: deriving conclusions strictly from rules and facts. ALP adds the abductive component, allowing plausible hypotheses to be assumed to explain observations. This enables reasoning under uncertainty and supports auditable explanations.

What role do frameworks like AbductionHub play in practice?

Frameworks such as AbductionHub provide structured management of abducibles, integrity constraints, and explanation traces, along with scoring and ranking of hypotheses. They help teams build scalable, auditable, explainable AI systems in domains like healthcare, robotics, and cybersecurity.

Can ALP be combined with machine learning?

Yes. ALP can operate as the symbolic reasoning layer that complements data-driven models. Probabilistic extensions, scoring of hypotheses, and integration with learning components enable hybrid systems that benefit from both explainability and predictive power.